ACM.37: Utilizing CloudTrail Lake to question the actions wanted to create zero-trust insurance policies (Zero Belief Insurance policies ~ Half 2)

It is a continuation of my sequence on Automating Cybersecurity Metrics.

I wrote in my final submit about an unsuccessful try to make use of AWS Athena to question CloudTrail as a consequence of my Management Tower setup.

https://medium.com/@2ndsightlab/how-to-create-zero-trust-aws-policies-45f9e778562b

On this submit I’ll check out a more moderen choice known as CloudTrail Lake. I’m going to stroll by way of this demo and see if it really works with my Management Tower setup.

Let’s begin with my check account. I’m an admin in that account however I actually haven’t checked out what SCPs Management Tower has utilized on my use of CloudTrail. Let’s discover out.

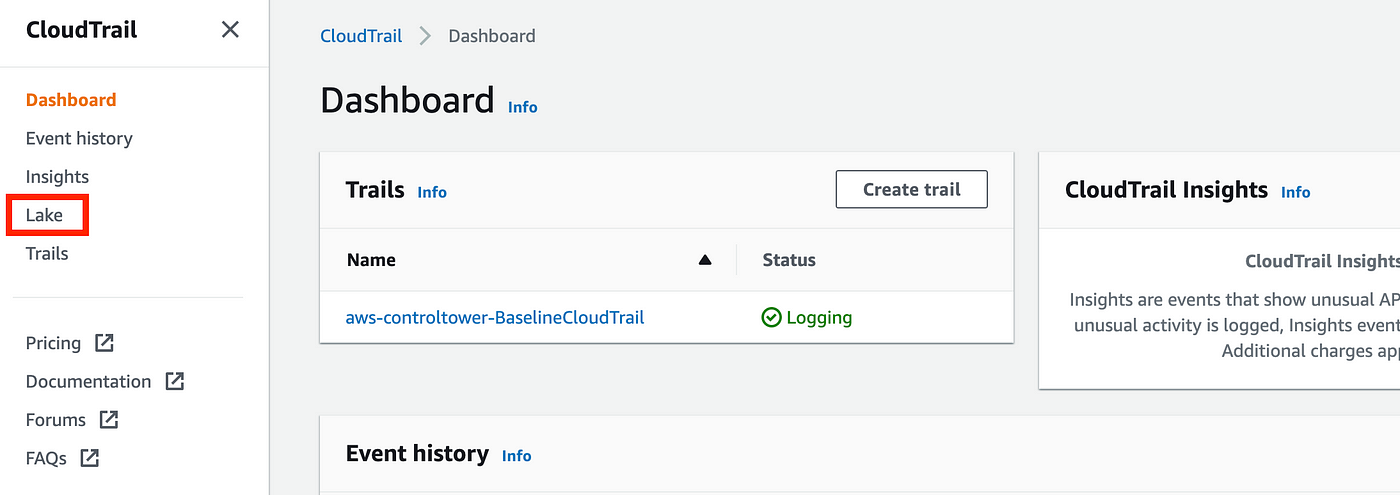

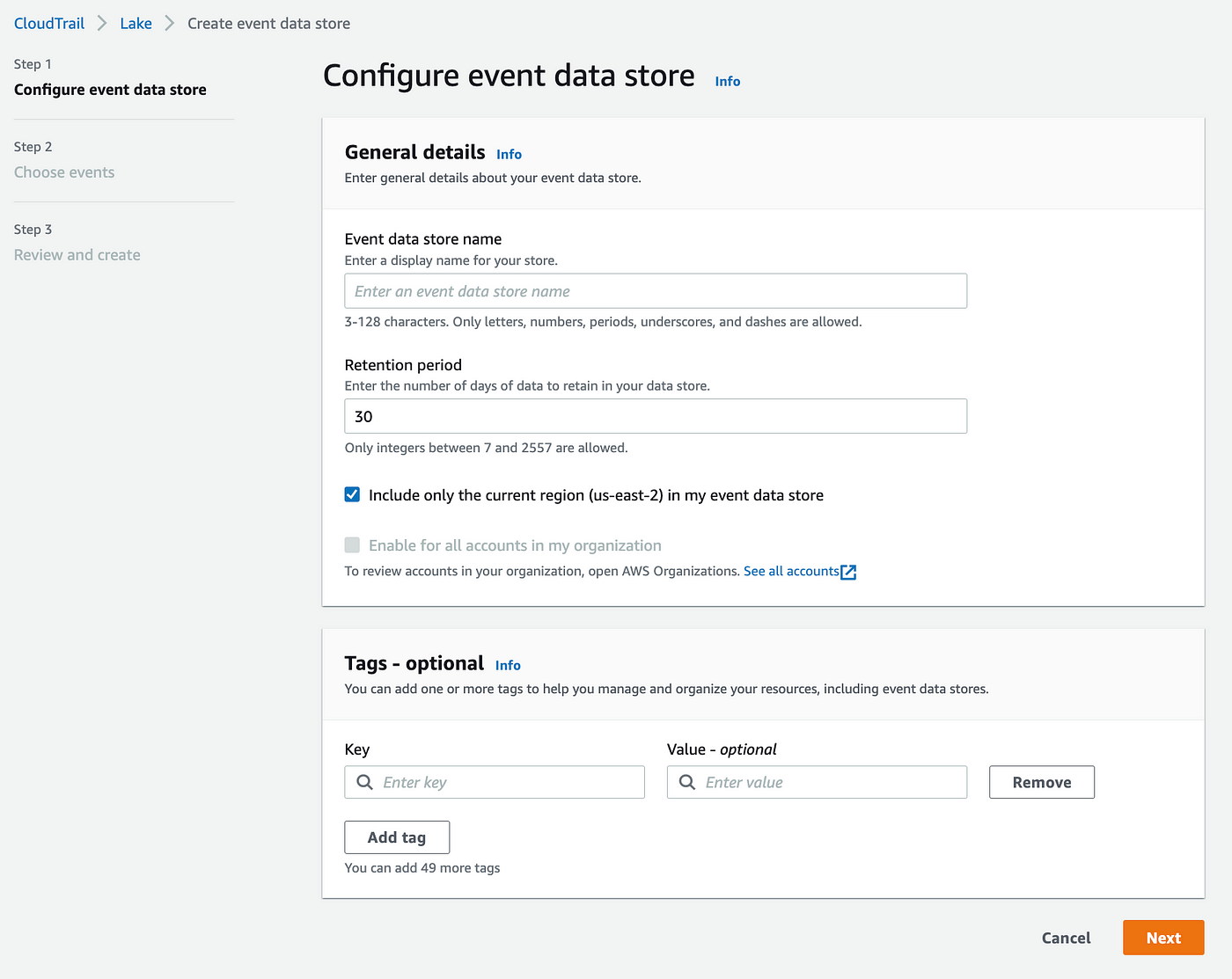

Navigate to CloudTrail and click on on Lake.

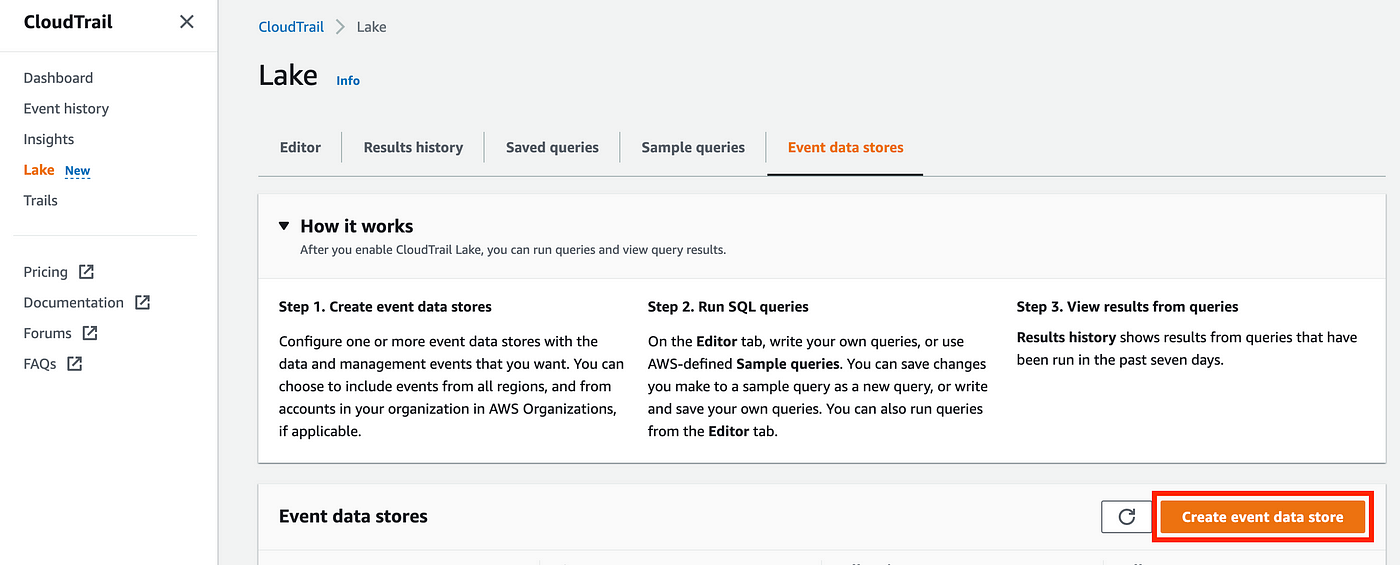

Click on Create occasion information retailer

On the following display I’ve to decide on how lengthy to maintain the info retailer. Let’s try the value of this service:

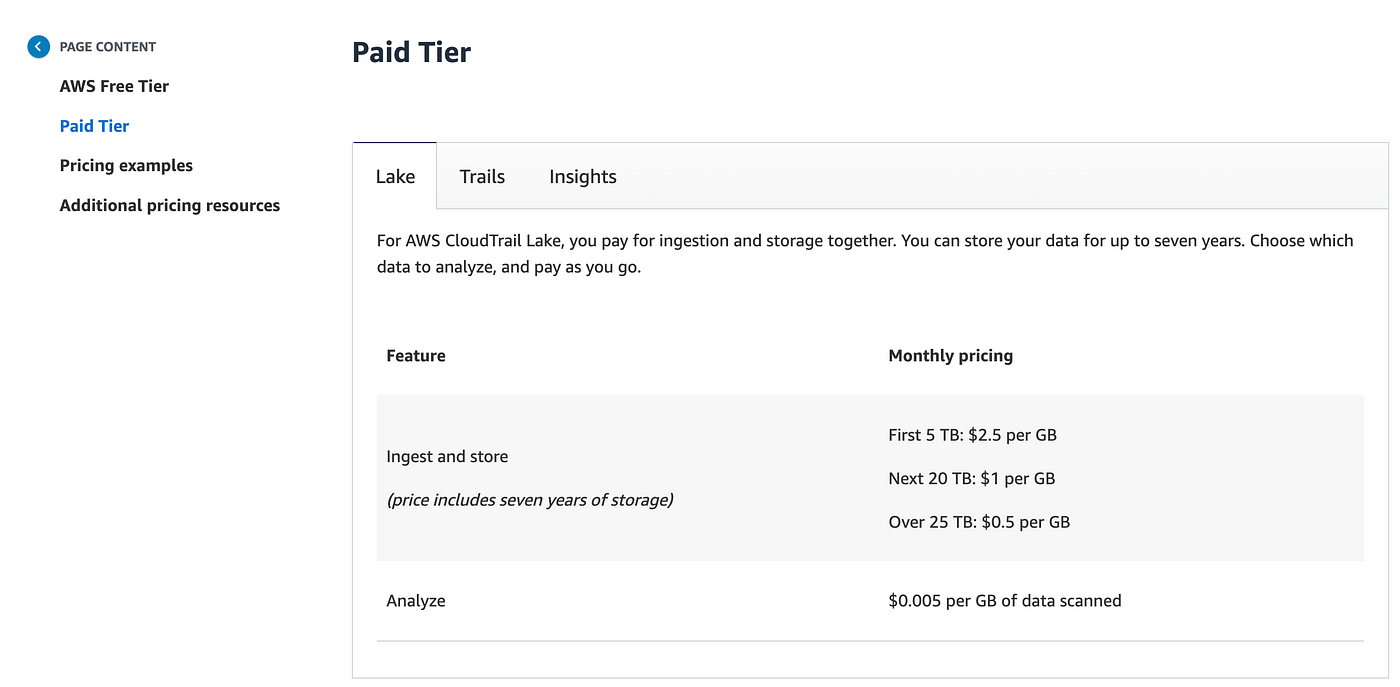

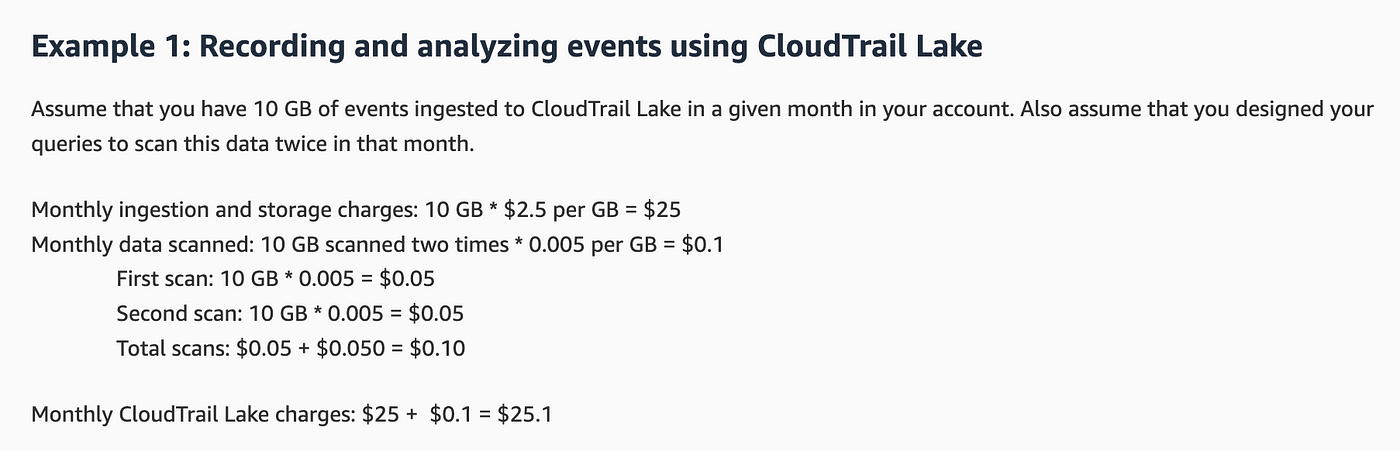

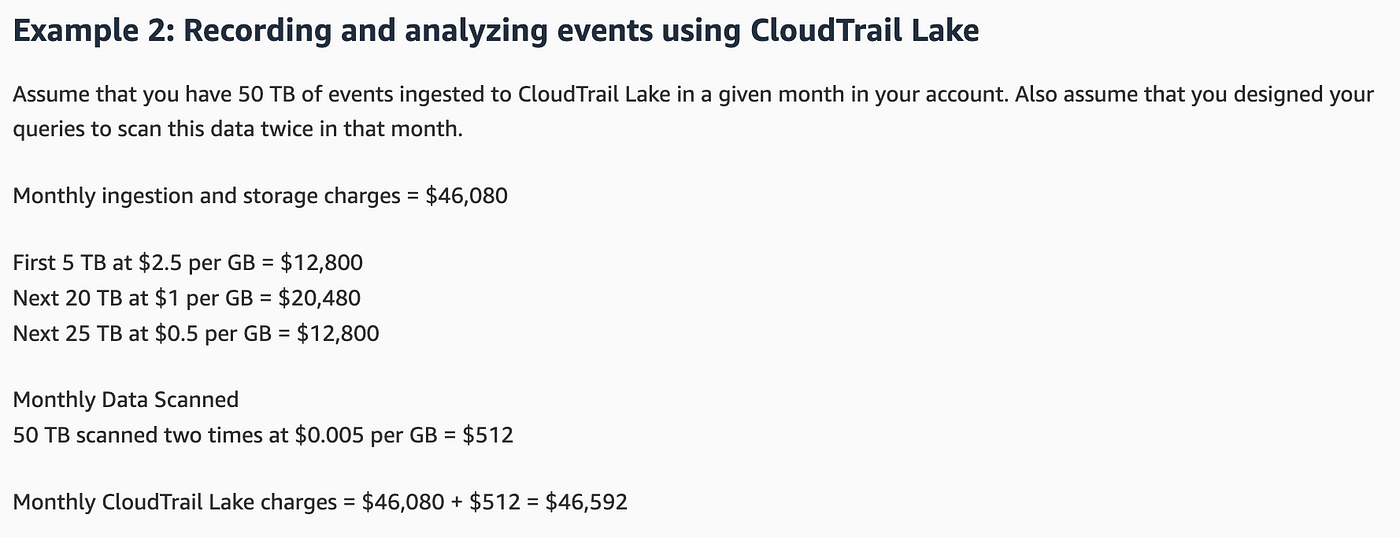

It seems like if I have been to ingest the info again and again I might pay repeatedly for that ingestion so I most likely wish to preserve the info round so long as I feel I’ll want it. What number of instances do I want to research it although? Is each question thought-about an “evaluation?” Let’s have a look at the pricing instance:

Though I don’t wish to ingest the identical information again and again I’ll pay for continued ingestion of recent information.

Clearly the value goes up primarily based on the quantity of knowledge. 50 TB of knowledge. Nearly $47K on this instance. Wow.

How can I inform how a lot information I’ve in CloudTrail proper now? I presume the quantity of storage would match the scale of the info within the S3 bucket we couldn’t entry within the final submit, additional detailed on this submit:

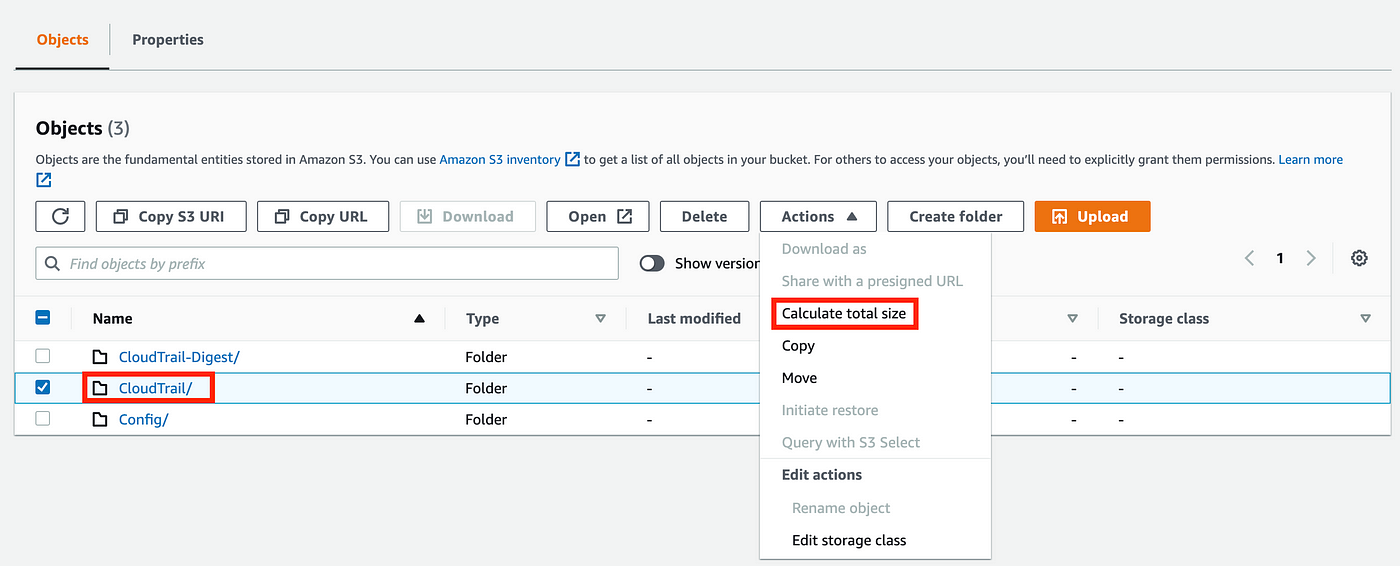

In my case the info is saved within the Management Tower log archive bucket so I’ll navigate over there and particularly to the bucket containing the CloudTrail logs for the account the place I’m working. Test the field for that account. Selected Actions > Calculate complete dimension.

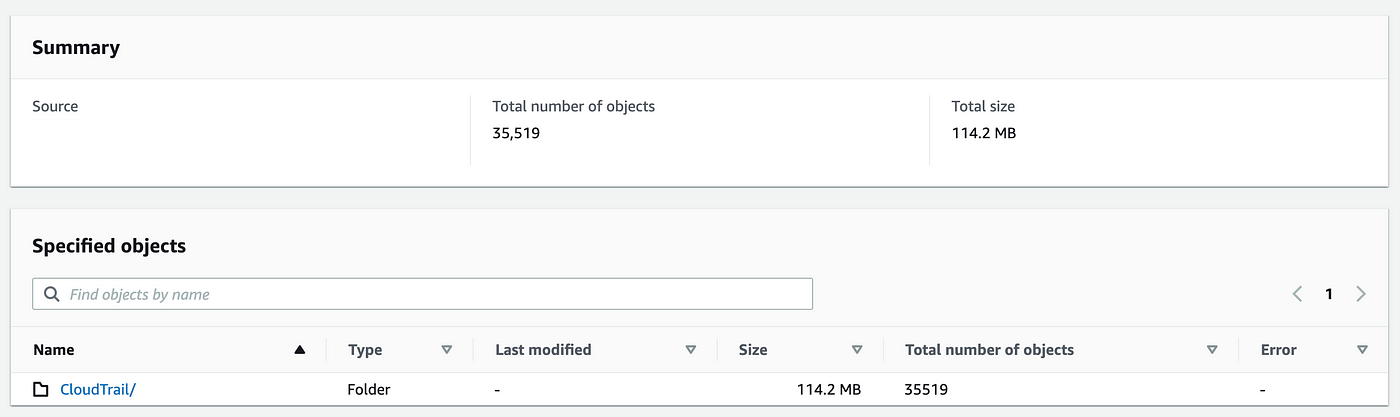

I created this account construction some time again for testing out Management Tower. Appears to be like like my check account has saved 114.2MB of CloudTrail logs.

If I can restrict my queries to solely this account then it looks as if my price to ingest will probably be $2.5 and .0005 to scan. That’s not too dangerous.

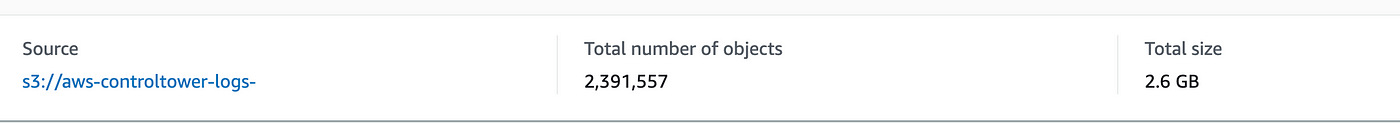

What if I’ve to run it on all of the CloudTrail logs for my group? It’s more durable to get that as a result of I’ve to enter every account individually and get the scale and to exclude the CloudWatch metrics. Somebody might write a question for that however to get a tough thought I’ll simply test the scale of the complete organizational folder.

Ouch. That’s a bit greater folder. I’ve been working this CloudTower configuration for a couple of months and the prices are undoubtedly including up in comparison with my different little check account as properly. I’ll be exploring that each one later.

I might love to only spend all my time writing about all these items for you however I don’t make sufficient cash from my weblog or guide so I’ll most likely have to leap over to another work sooner or later. However I might like to discover cost-effective options and write about it. We’ll see.

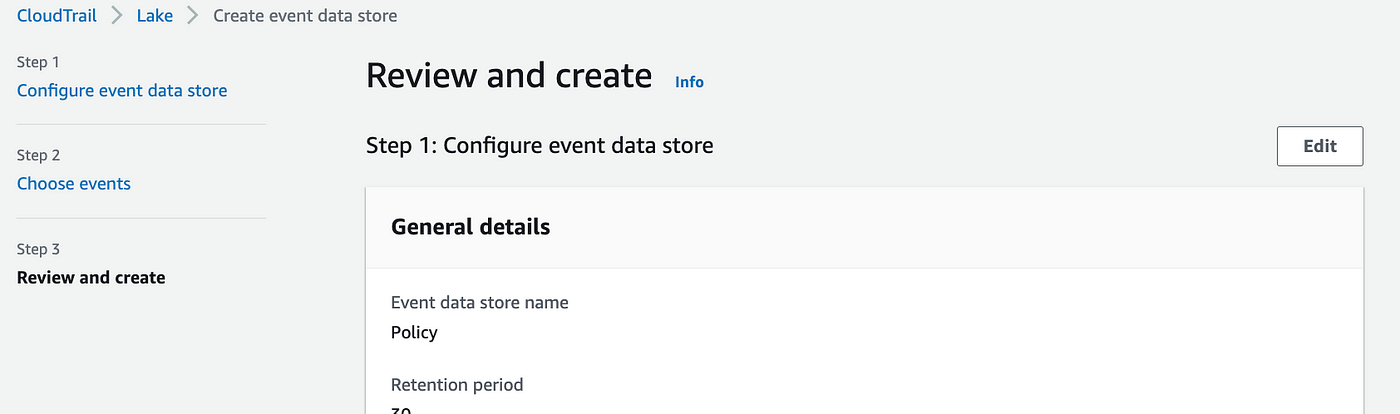

For now if I can get this engaged on one account I’ll strive it. Let’s proceed on with the steps for our single account, and I’ll set the storage for 30 days for this check of the CloudTrailLake service. I’m additionally going to restrict the logs to the present area.

I misplaced my place since I needed to change accounts, so again to my check account and repeat the steps above.

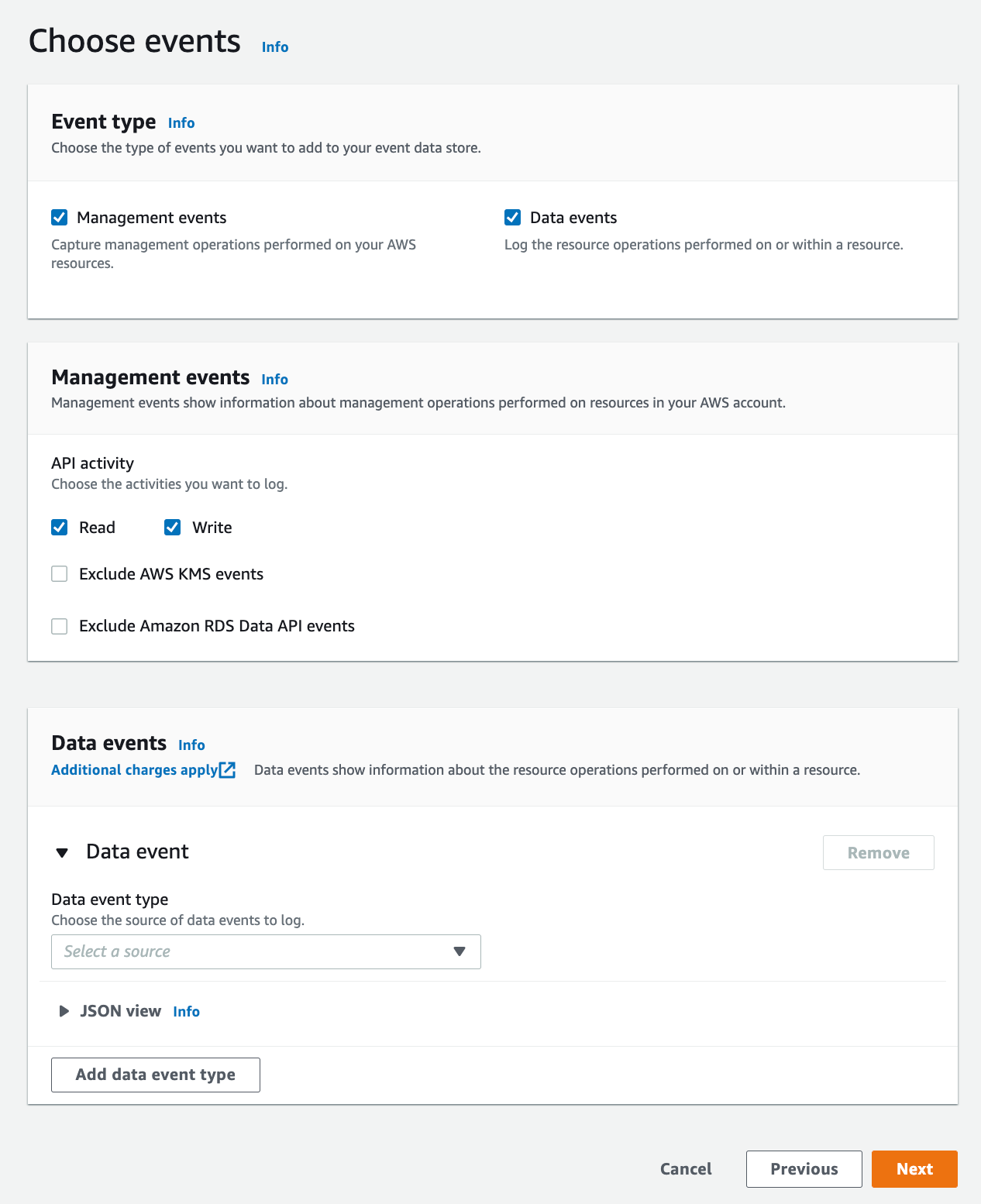

On then subsequent display there are a couple of extra decisions to make.

We are able to embrace Administration Occasions:

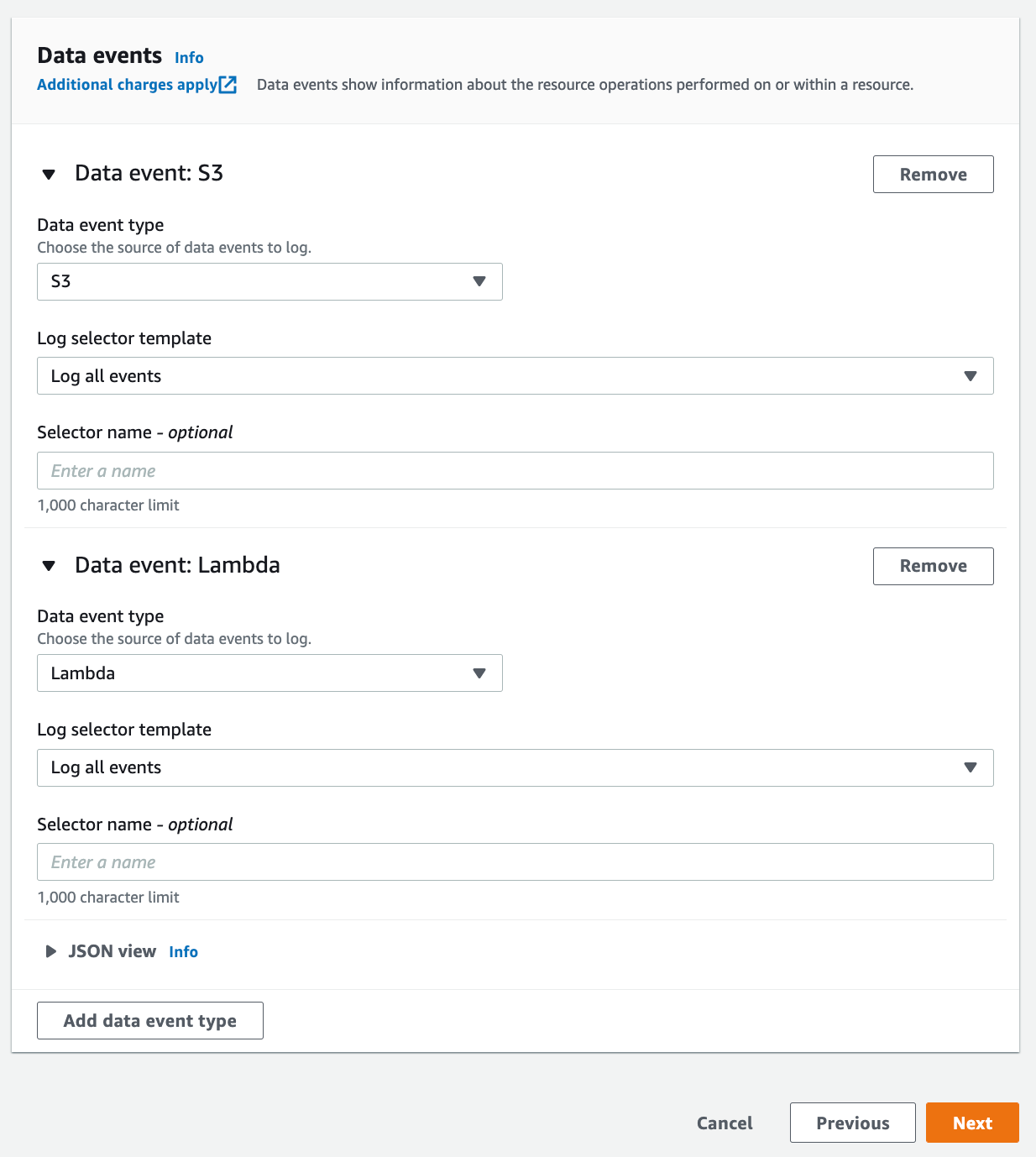

and Knowledge Occasions:

The documentation doesn’t give a concrete listing of what’s thought-about a “information occasion”:

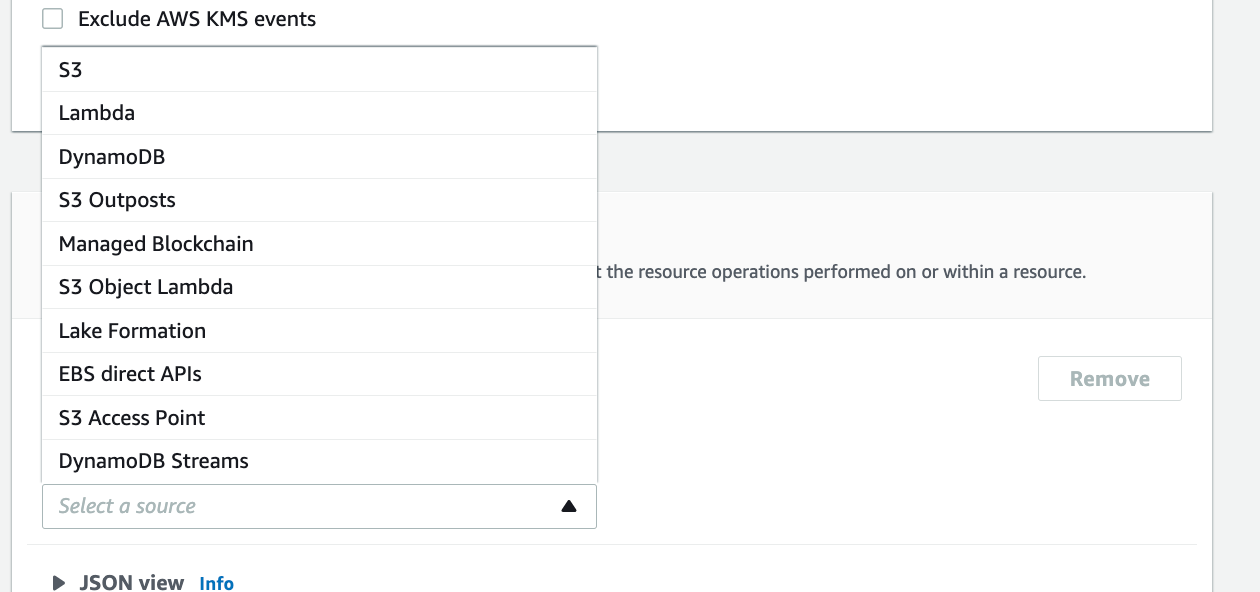

Nevertheless there’s an inventory of sorts of occasions you’ll be able to add on this display:

And “Further expenses apply”.

That hyperlink simply takes you to the pricing web page above so I assume the costs are associated to the extra logs that may must be scanned.

Must you embrace information occasions in your logs?These logs will price you extra, nonetheless with out them you can't inform what an attacker accessed within the occasion of an information breach. A selected firm I do know that had one of the vital notable AWS information breaches to this point was about to show off their information occasion logging earlier than the breach. With out it, the group wouldn't have been in a position to outline the precise information the attacker accessed. When you have got an information breach you will be fined for every document uncovered. Your logs show you how to cut back these fines by being very particular about what attackers accessed. By having the info occasion logs the corporate was in a position to present the precise S3 objects the attacker accessed with a get motion and keep away from fines for each object with delicate information of their S3 buckets.So do you want information occasions? Will depend on in the event you retailer delicate information and if you wish to see precisely what an attacker accessed within the occasion of a safety incident. In case you have a breach involving PII, PCI, HIPAA or different delicate information your detailed logs and an excellent safety analyst can cut back the price of a breach - in the event you stopped the breach earlier than the attacker received to all the info.

At this level, I’m not utilizing the above providers, however I anticipate to make use of S3 and Lambda sooner or later. I’ll add these for this check however I don’t know if I’ll want or use them.

Click on Subsequent. Reivew.

Click on Create occasion information retailer.

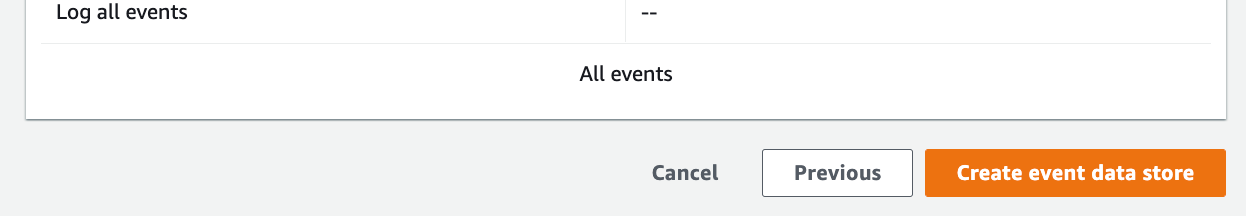

Appeared to work. Click on on the hyperlink to your information retailer. Observe the worth of the ARN. Then return to the earlier web page.

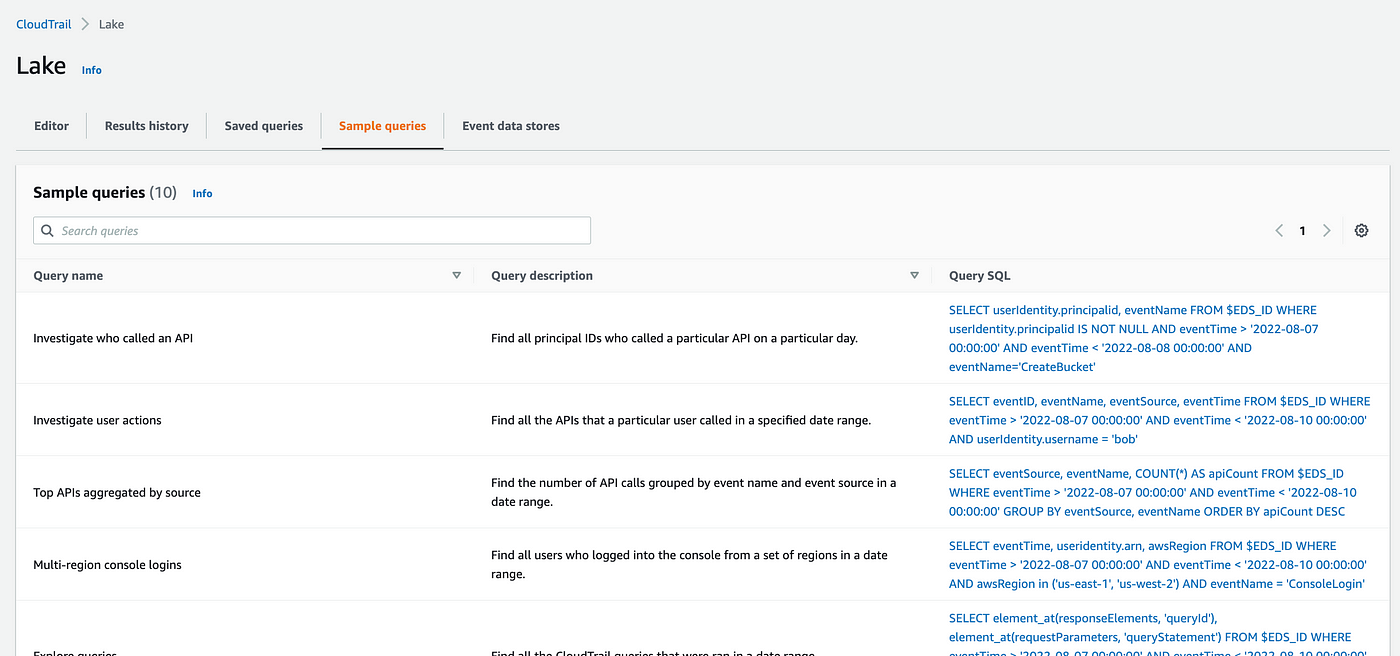

Click on on Pattern queries to get quite a few queries you’ll be able to check out:

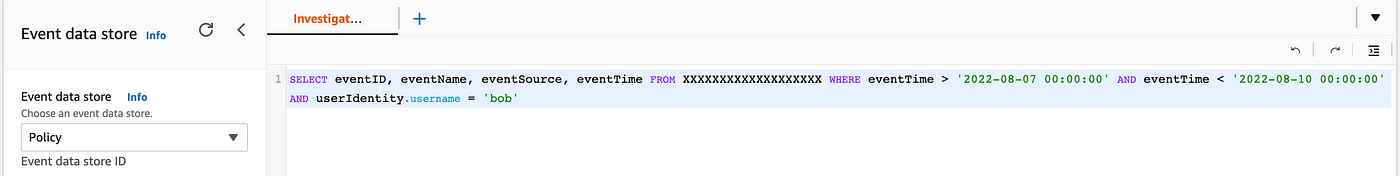

Click on on the hyperlink for the second: Examine consumer actions. Observe that the worth I’ve x’d out within the question under is the worth on the finish of your information retailer ARN. Observe that it’s additionally querying userIdentity.username for the consumer bob. We have to change that to the function that we’re making an attempt to create a zero-trust coverage for if we will.

Let’s re-run our check script for the batch job we have been engaged on once we launched into this mission to create a zero belief coverage. That manner we will get some contemporary occasions in our CloudTrail logs.

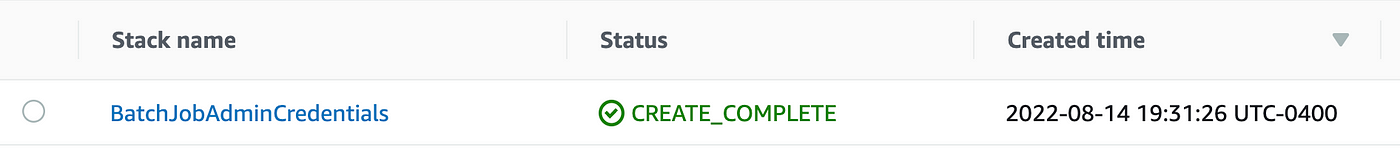

First I’ve to delete this CloudFormation stack: BatchJobAdminCredentials

Now I can re-run the check script for our batch job:

jobs/iam/DeployBatchJobCredentials/check.sh

As soon as efficiently created I can evaluate CloudTrail logs for the exercise (it’d take a couple of minutes for it to point out up). Go to the complete occasion historical past as we did in a previous submit with the hyperlink on the backside of the display.

From right here you’ll find the consumer title that took the actions that simply occurred. I might personally quite seek for all actions taken by a specific function, however we will get at it from the consumer session which is extra useful if you’re working in an account the place a number of individuals are utilizing the identical function. Copy the worth from the username column under. Your username may have some numbers after it to establish the distinctive session.

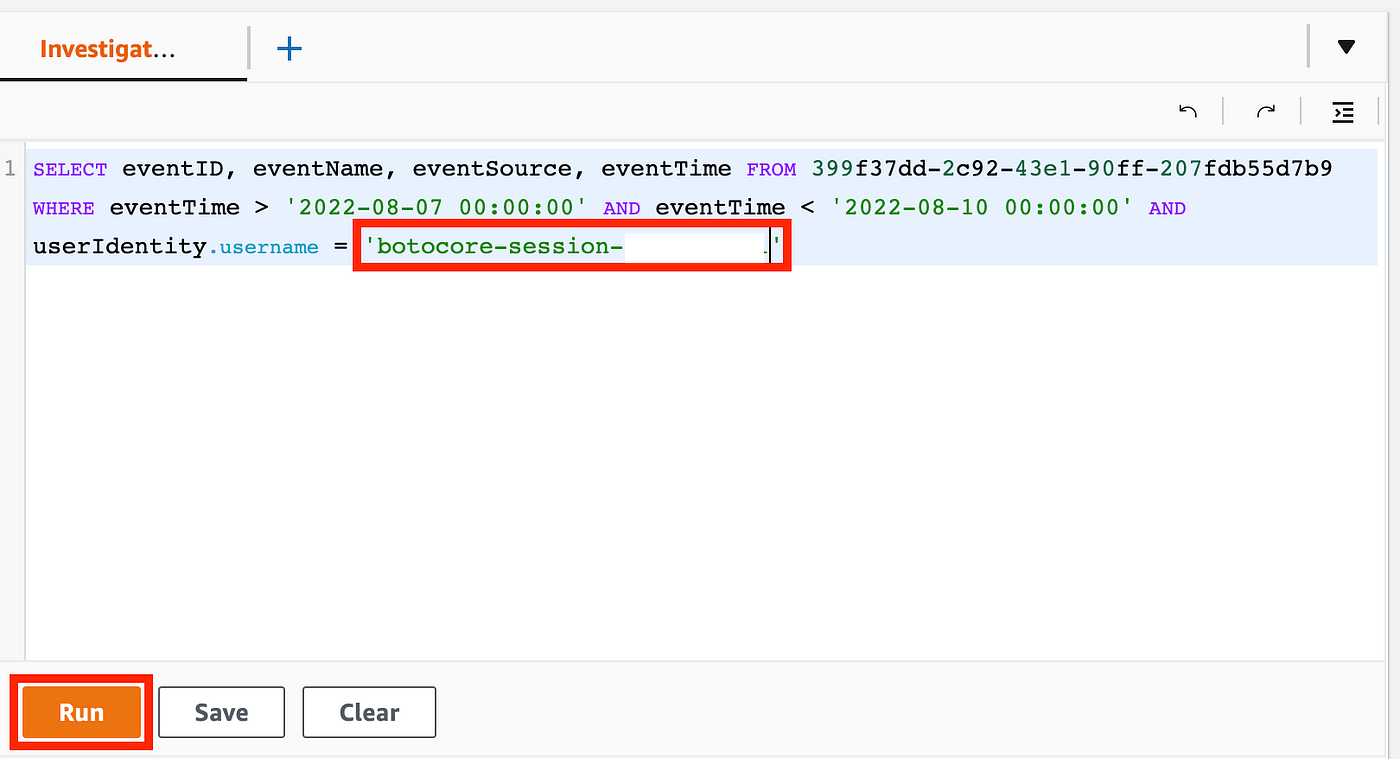

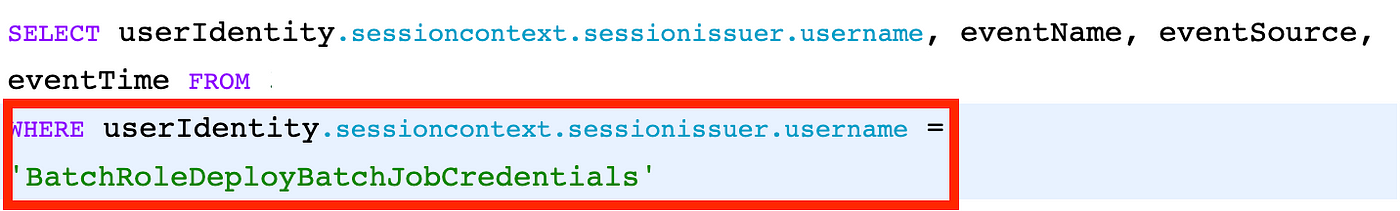

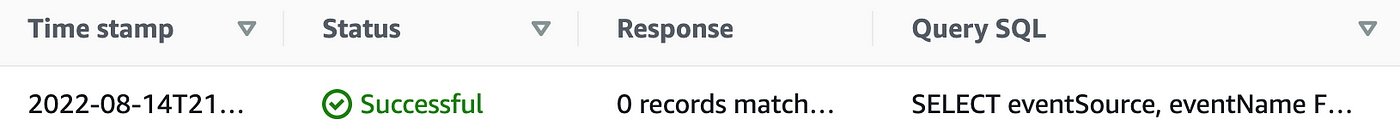

Return to CloudTrail Lake and the pattern question we checked out above. Change the username Bob with the title you simply copied above. Be sure you depart the only quotes. Click on Run.

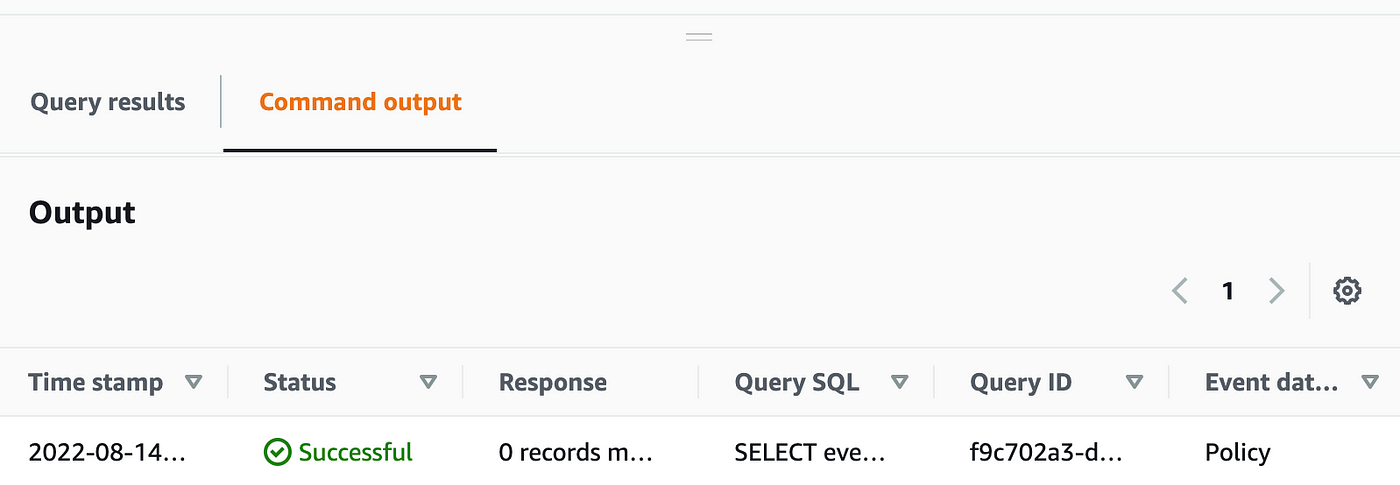

Scroll right down to see that your question was profitable:

Click on question outcomes. ?? I received no outcomes.

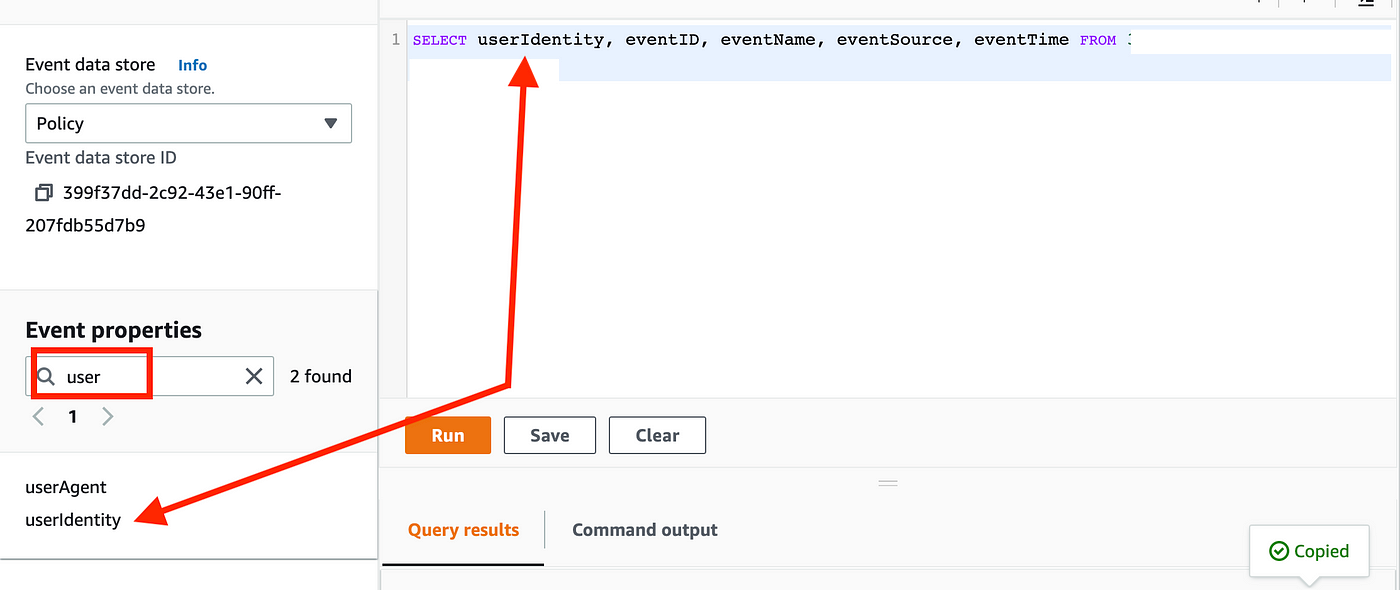

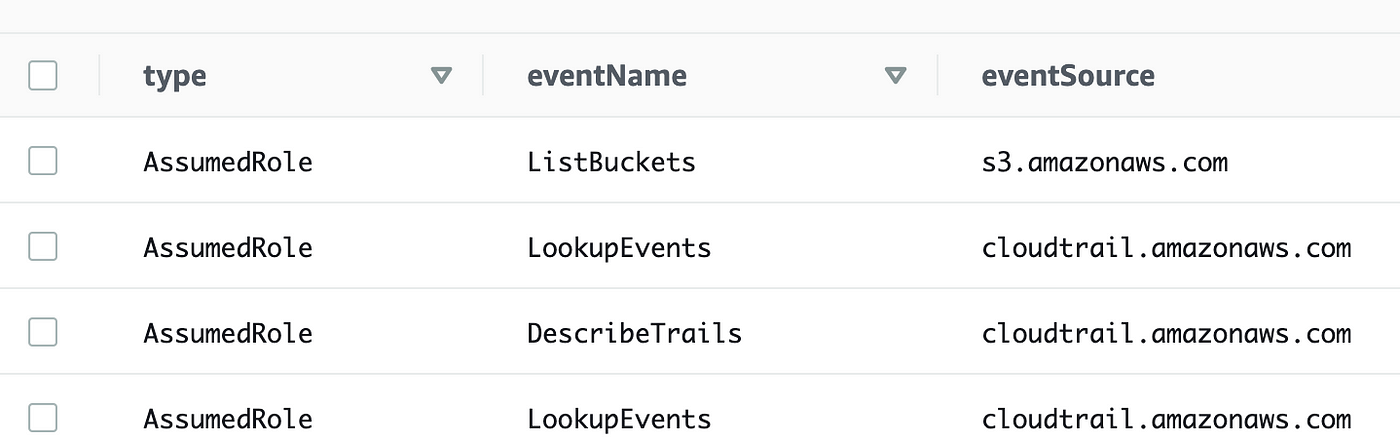

Let’s return and alter our question. Take away the time from the question. Nonetheless no luck. OK take away the complete the place clause however we wish to add the consumer identification data.

Add userIdentity to the info our question returns.

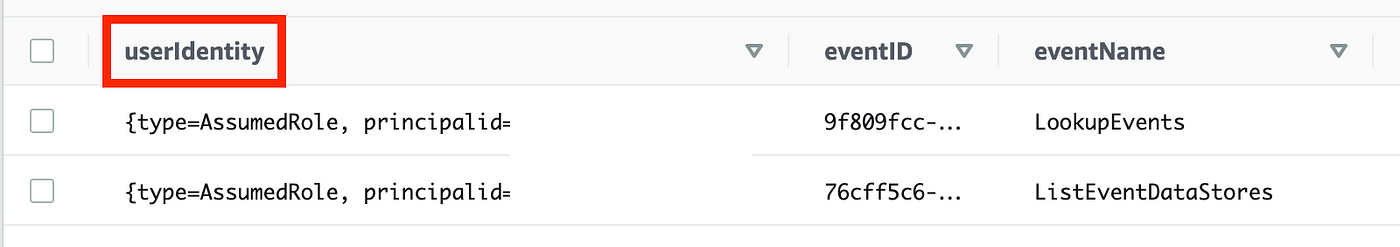

Now we get again an inventory of occasions with userIdentity within the first column. The worth is a JSON blob wit a number of key-value pairs in it with details about the identification that took the motion in CloudTrail.

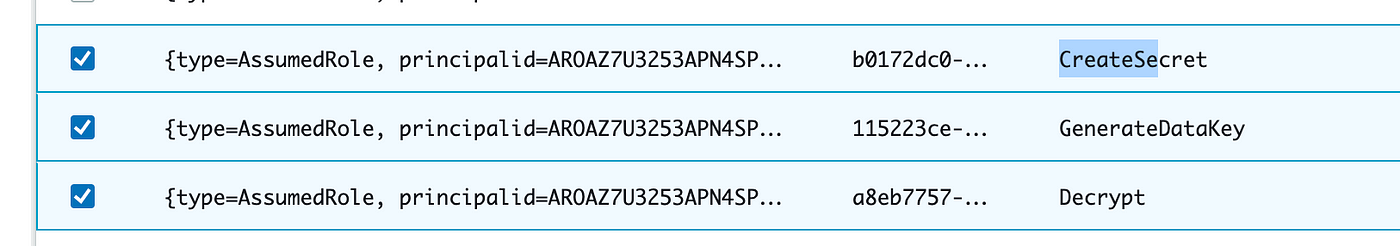

Scroll down to search out our CreateSecret motion. You may as well sort Ctrl-F on a Mac and seek for “CreateSecret”. I’m going to test the rows of three actions I feel have been taken by the batch job function.

Scroll again up and click on Copy.

Now paste that data right into a terminal window. There’s an excessive amount of to redact in that blob of textual content so I’m not going to stick it right here however it reveals all of the key-value pairs within the output. You should utilize these key-value pairs and their values in your question. I see that username is null, which is why our question didn’t work.

What was that worth within the CloudTrail UI? I see that principalid has that worth however preceeded by one other worth and a colon. Which may work.

Scrolling down I additionally see the session context which showsmfaauthenticated=false as this can be a function, not a consumer.

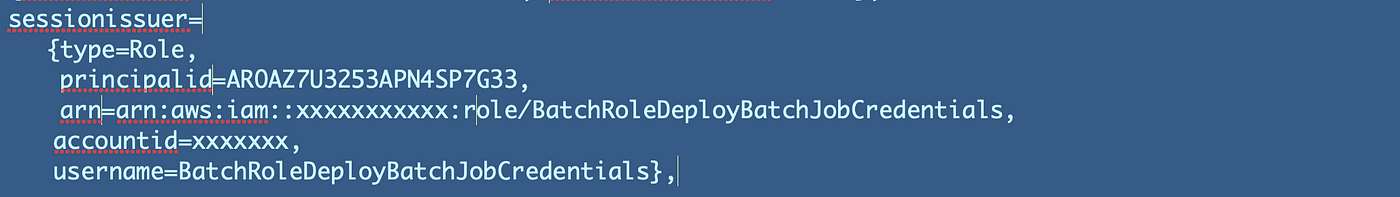

I additionally see the next inside this blob:

sessionissuer={sort=Function, principalid=xxxxxxxxx, arn=arn:aws:iam::xxxxxxx:function/BatchRoleDeployBatchJobCredentials, accountid=xxxxxx, username=BatchRoleDeployBatchJobCredentials},

What if I wished to get at that username “BatchRoleDeployBatchJobCredentials”? I’d have so as to add a couple of issues to establish that exact worth. This will get a bit difficult to determine the trail to get the worth we wish from that massive weblog so let’s take it a step at a time.

The start of the blob seems like this:

[{"userIdentity":"{type=AssumedRole, principalid=

We can add a dot (.) instead of the { to get the next value. I’m also going to remove event ID because that’s not helping me at the moment. Make sure you have our data source after from as shown above.

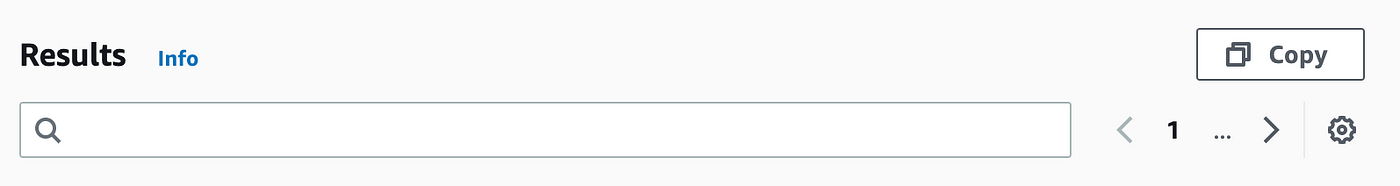

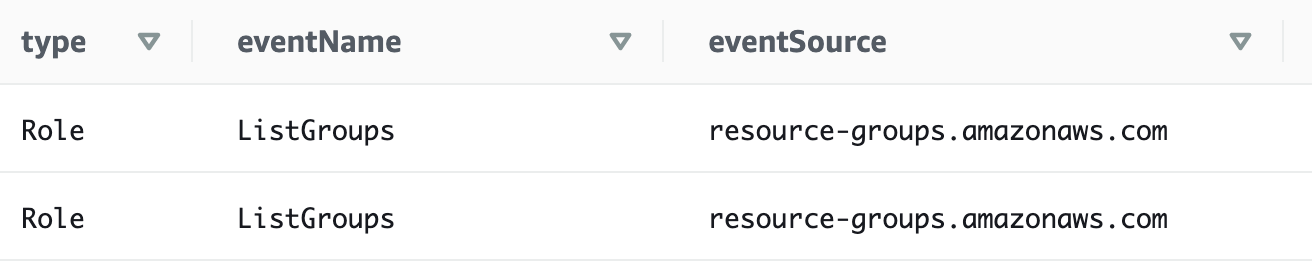

Run that query and it works. You get the type which is AssumedRole for these events:

We want something deeper down the stack. The value we want is deeper down the stack. Let’s organize the JSON so it’s easier to understand the structure by putting each curly brace ( { or } )on it’s own line and a line break after each comma. Indent the value after each curly brace.

I removed a few values but we end up with the structure below.

[

{

"userIdentity":

"{

type=AssumedRole,

principalid=xxxx:botocore-session-xxxx,

...

sessioncontext=

{

attributes=

{

creationdate=2022-08-14 17:38:28.000,

mfaauthenticated=false

},

sessionissuer=

{

type=Role,

Here’s how to construct our value that we want to retrieve.

- We want the value of an attribute of userIdentity.

- session context an attribute of userIdentity

- sessionissure is an attribute of sessioncontext

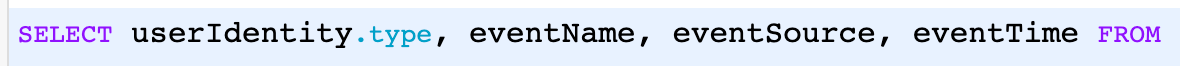

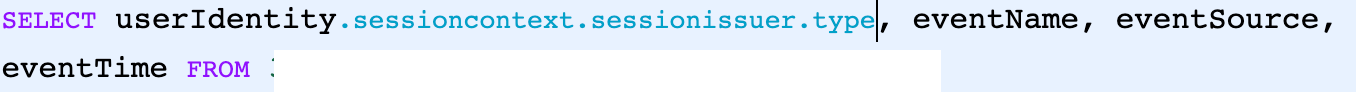

- type is in sessionissuerTry:userIdentity.sessioncontext.sessionissuer.type

Appears to work:

But we want a different value. Let’s look at sessionissuer in more detail:

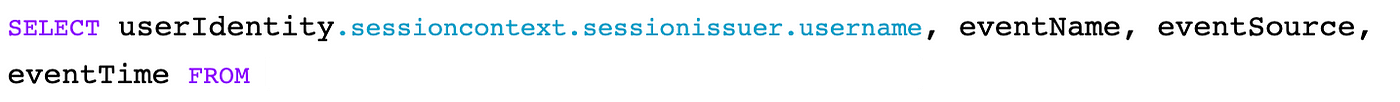

There are more fields than that but the username is the one we’re after. change type to username.

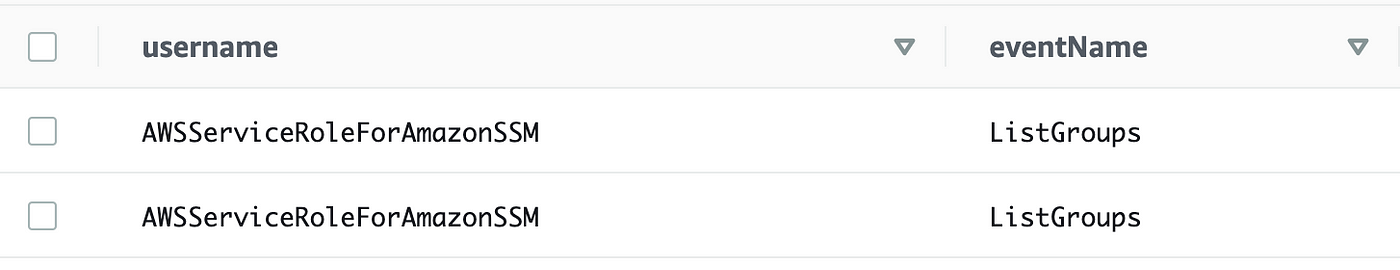

There we go. Now we can see what roles are performing what actions.

But we want to restrict the query to our batch job role. Copy the username that we can see in the above JSON output:

Add a WHERE clause to the query where the attribute we are querying equals that value:

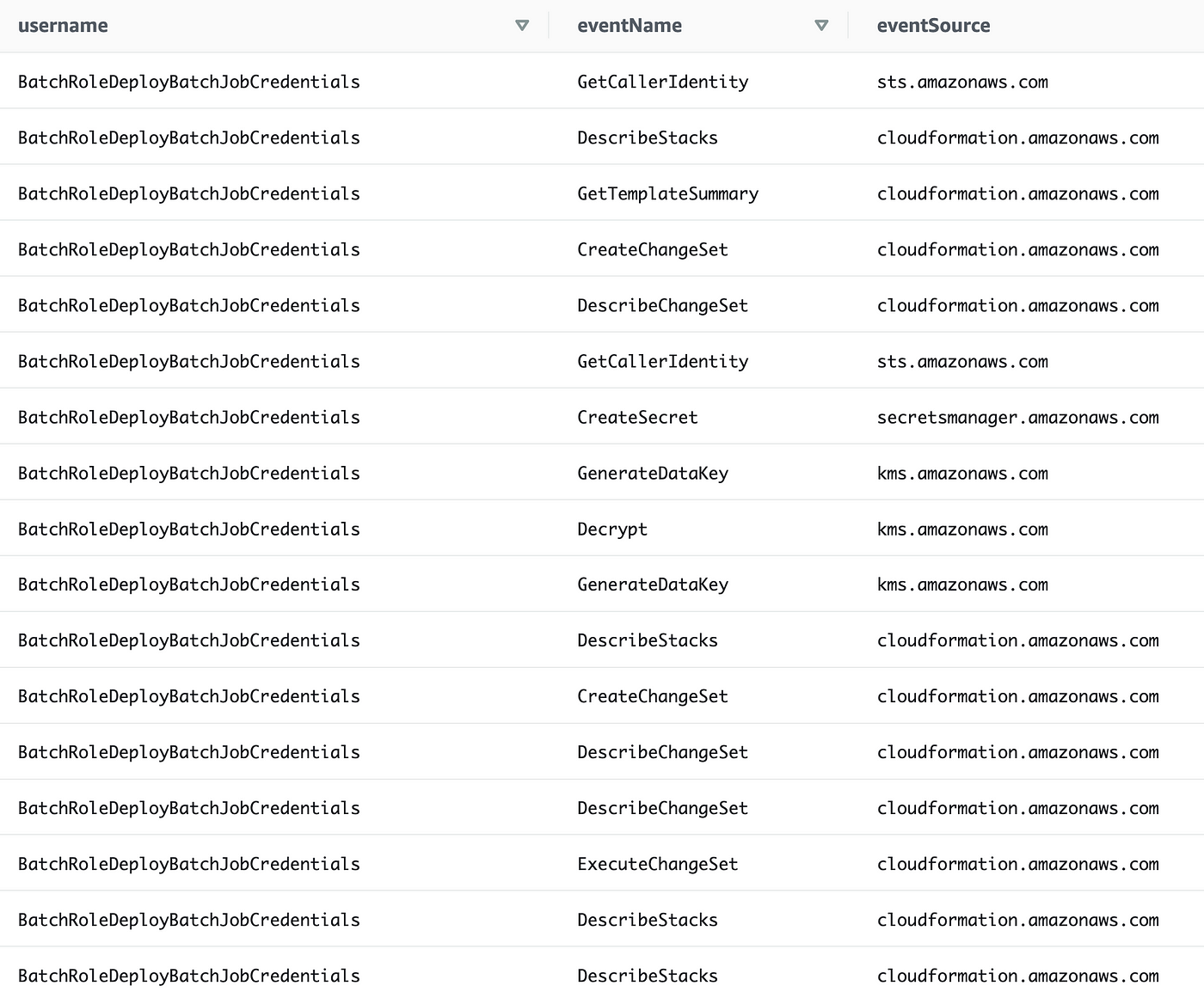

Finally. We can see all the actions taken by our batch job role:

We can now easily add each one of these to our policy document — and presuming our batch job only takes these action going forward and that CloudTrail is logging every action, this should work.

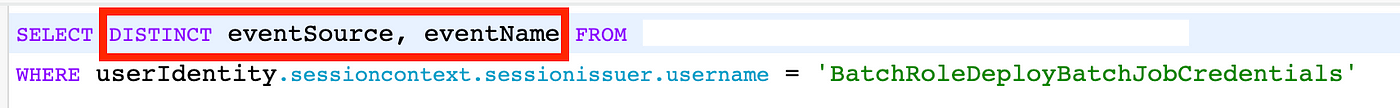

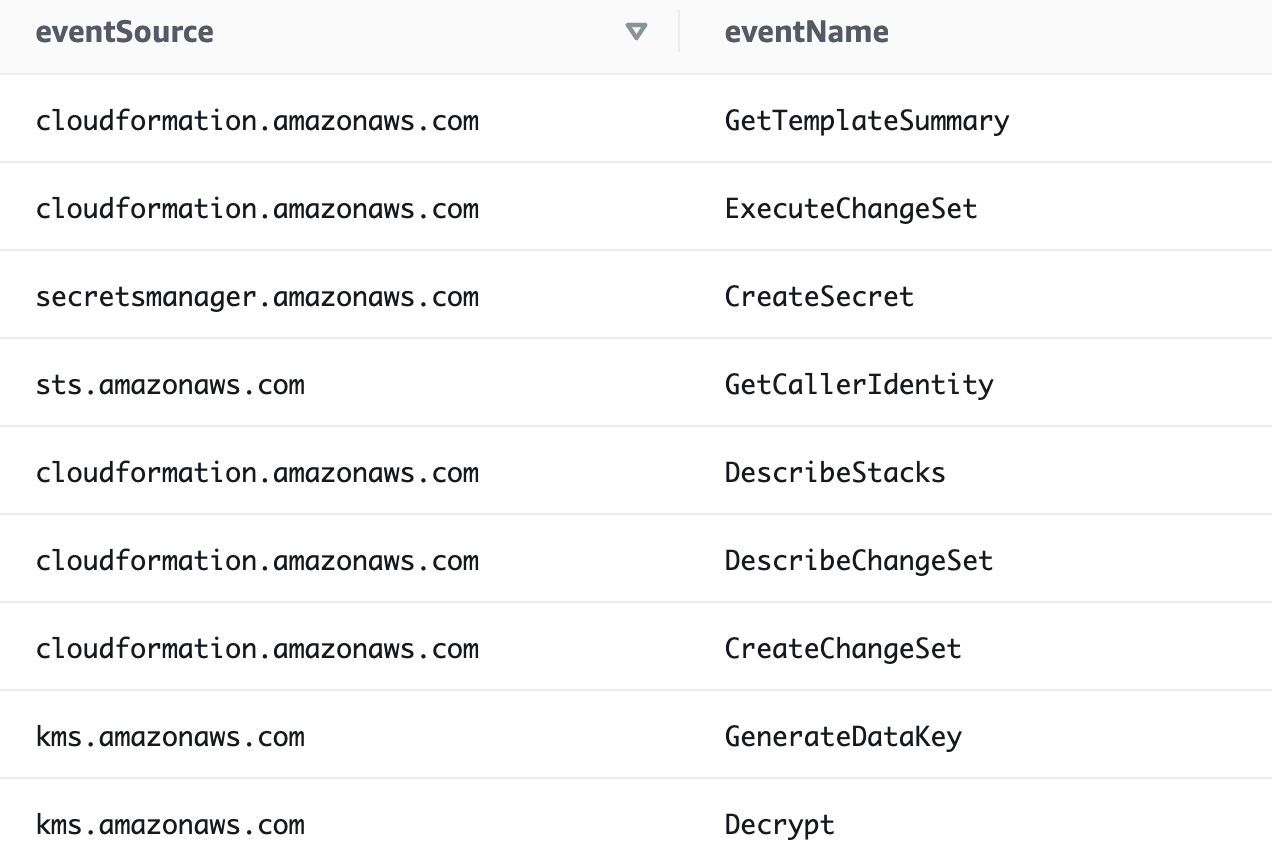

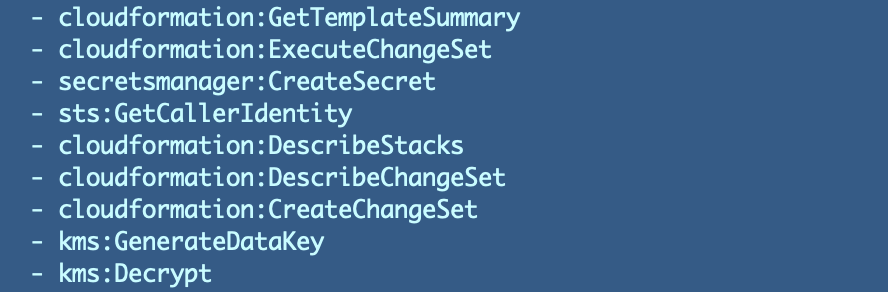

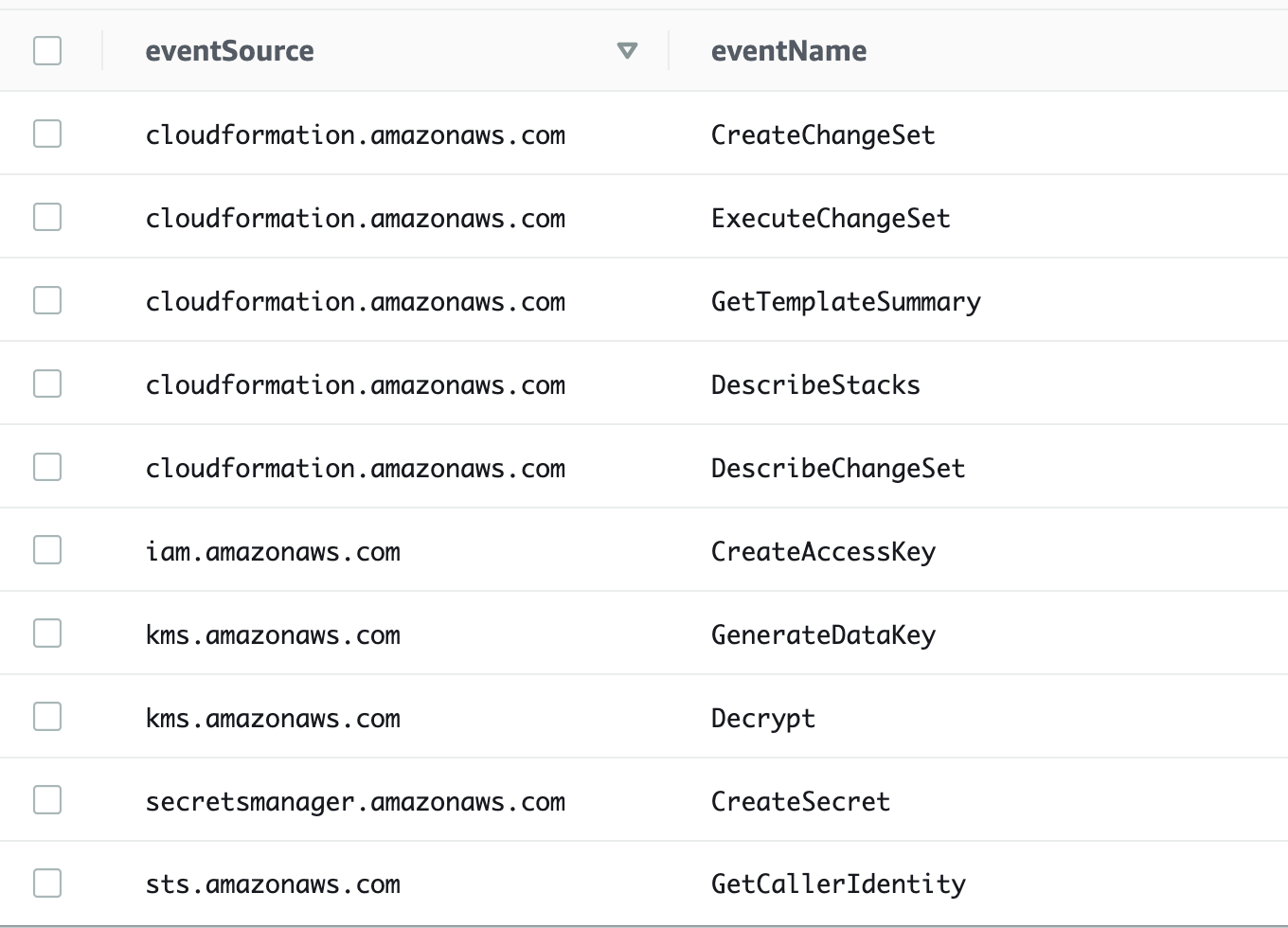

We can do one more thing to make it a bit easier to update our role. Remove all the extraneous fields, put the service before the action in the list, and add the DISTINCT keyword to only show a list of unique results.

That query results in the following which are the actions we should need:

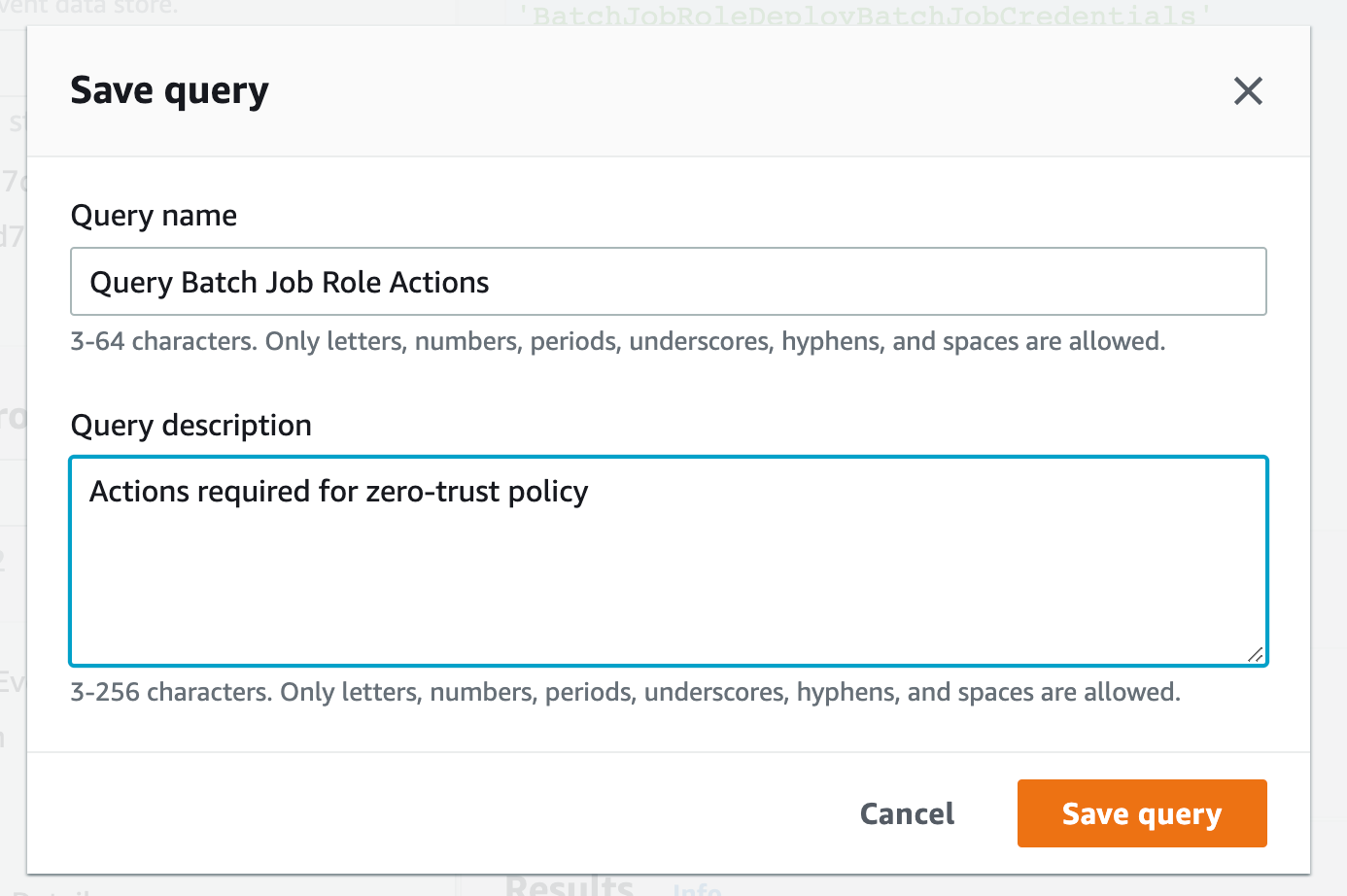

Now before you do anything else — save this query. I didn’t when I was running this post and navigated away from the screen and had to re-create it.

Click Save at the bottom of the screen.

Give your query a name and description.

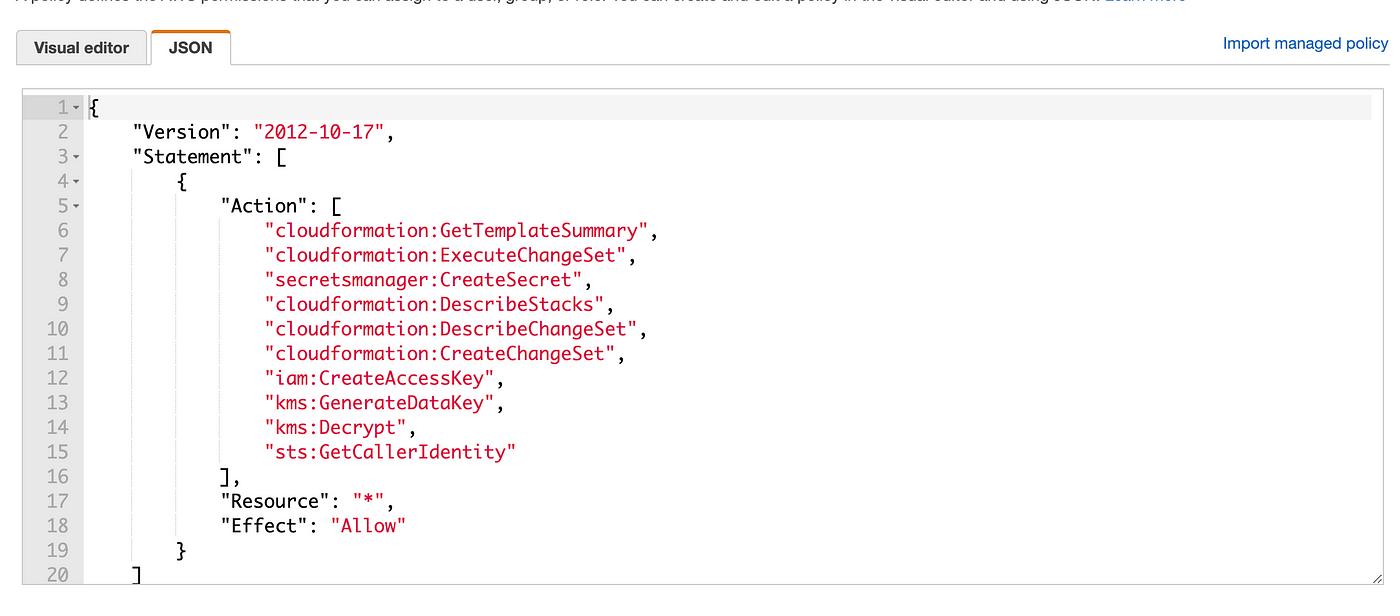

You can essentially copy and past that list into your policy document and edit it a bit. Remove “.amazonaws.com” and put a colon between the two values. If I ran this on the command line I could easily construct the policy. (So could AWS with their policy generator.)

Do you notice anything strange about the above list?

Decrypt but not Encrypt?

First of all, we initially wanted to give this policy ENCRYPT permissions only to create our new credentials and store them in Secrets Manager. We don’t want to allow it to decrypt the values it is creating and storing in secrets manager later. Encrypt is never called, and yet Decrypt is. This seems like a bug in my opinion and I wrote about it here:

This implementation prevents companies from creating separation of duties between encrypt and decrypt capabilities.

However, at least this role can only perform the action CreateSecret on secrets manager. It cannot retrieve a secret. Even though this role has decrypt permissions, it cannot retrieve the secret so as long as we don’t give that permission we’re OK. It would be more clear to segregate encrypt and decrypt permissions.

But what if we need to update the secret? What if the secrete needs to be deleted and recreated? For now we will assume that is a batch job override that happens outside the batch job. This role doesn’t get those permissions. It’s sole purpose is to create and store the credentials.

IAM actions are missing?

There’s one curious thing about this list. I don’t see the IAM actions to create the Access Key and Secret Key that we already added to the policy earlier in this post:

https://medium.com/@2ndsightlab/how-to-create-zero-trust-aws-policies-45f9e778562b

Why aren’t they showing up here?

Let’s make sure those actions were actually taken by our batch job role.

First, I deleted my CloudFormation stack and verified that the access key does not exist. Then I re-ran the stack to recreate it. Back to CloudFormation to find those entries.

The IAM entries for this username definitely do not exist. In fact, querying on any IAM events shows that the IAM events do not exist at all:

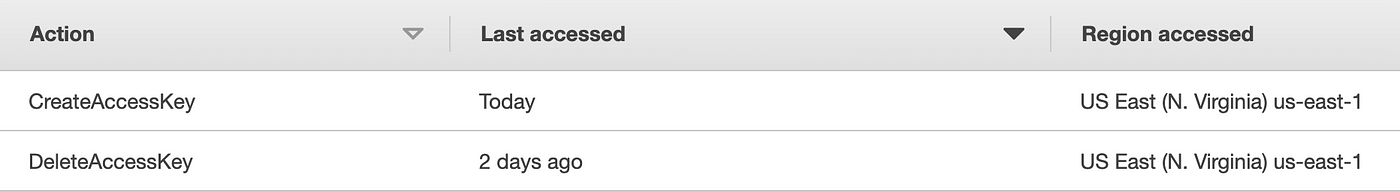

Well, I already showed you how to find those actions in the last post so let’s review the IAM Access Advisor for this role:

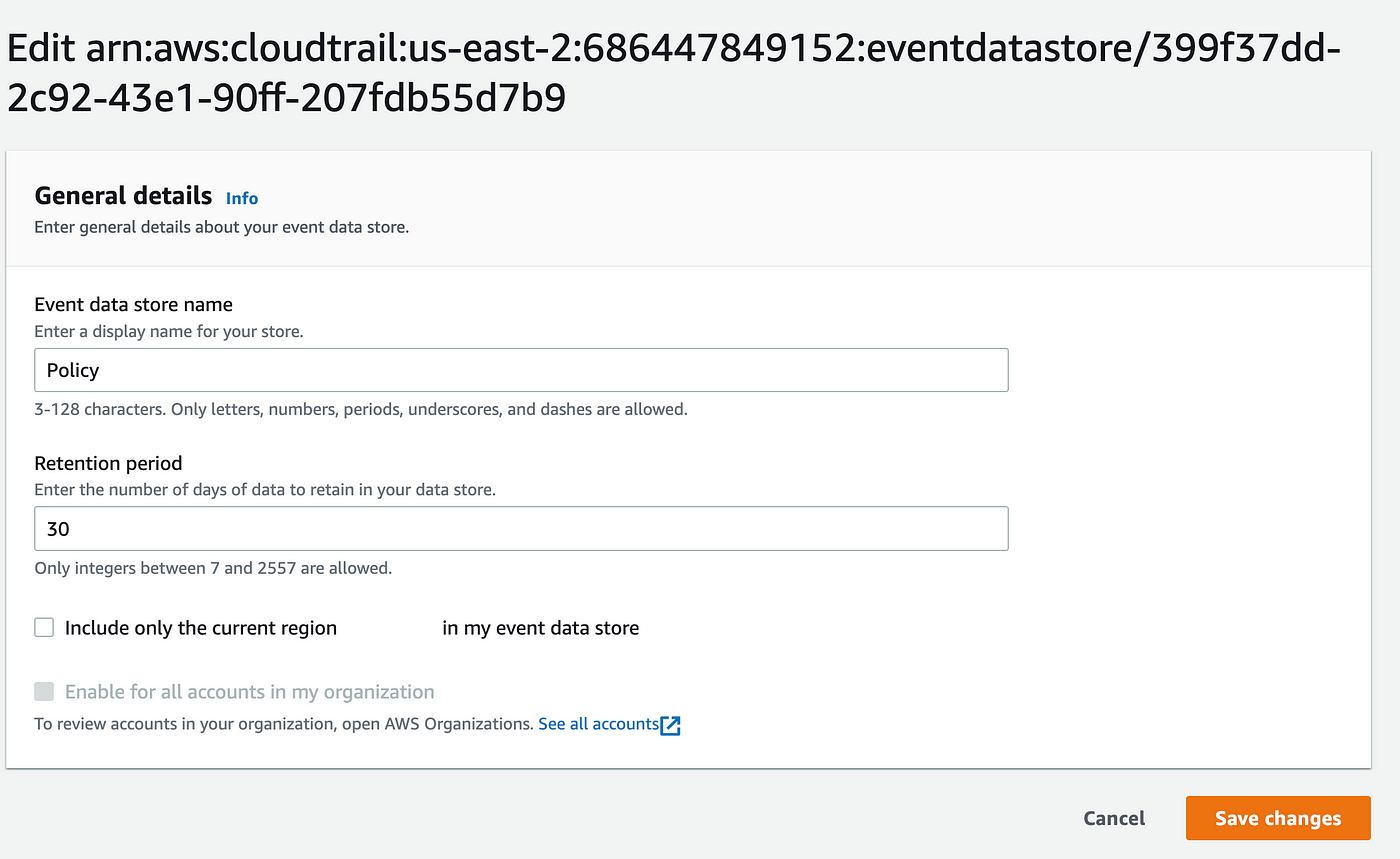

Turns out these IAM actions are logged in us-east-1 so we’d have to include that in our CloudTrail Lake query if we want to see those actions as well. We can switch over to that region and repeat the whole process again, or we can add all regions to our data store. I don’t expect I have too much data in the other regions so let’s see if we can add that to the existing store or if we have to create a new one.

If you return to your event data store like we did above and edit it we can uncheck the box to only include a single region:

I’m not sure how long it takes for the data to show up but let’s try to run our last query again.

When I run my query again I do not get any IAM events.

Perhaps I need to run batch job actions again to get them to appear in these logs.

No. I can see the actions in the us-east-1 region but I cannot get them in my CloudTrail Lake query.

To fix the problem maybe I have to create a new CloudTrail Lake.

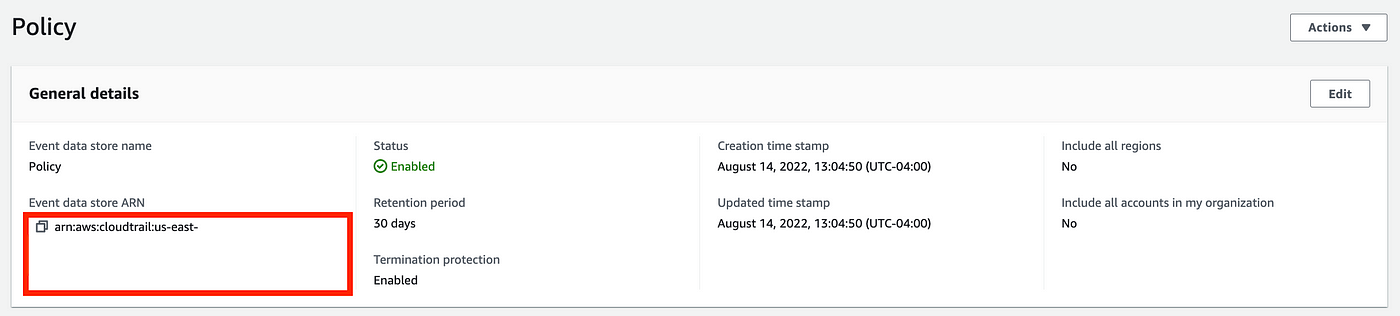

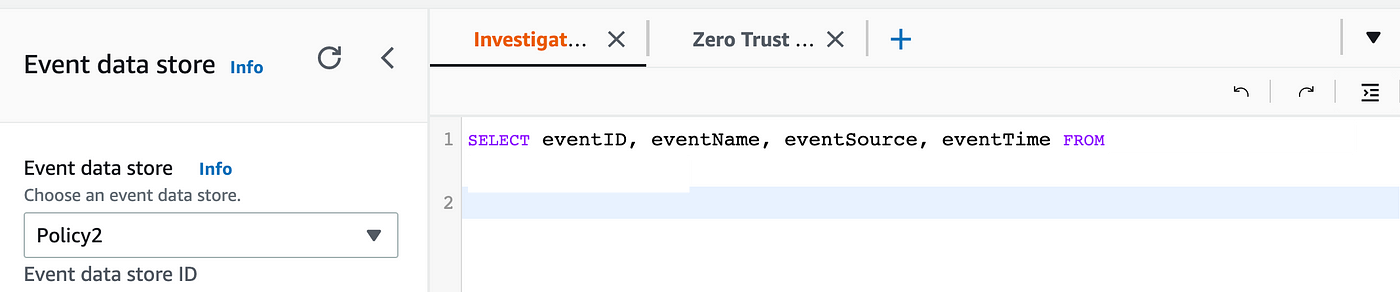

I gave my new data store the name Policy 2 and used all the same settings as before except I unchecked the box to limit the logs to the current region.

Then I chose Policy 2 on the left hand side. I made sure my query referenced the new data store after the “FROM” keyword. I ran the query again.

No dice. In fact, I was getting no logs at all from CloudTrail Lake. I fiddled around with this and my old data store for more than a few minutes, and then my SSO session timed out. I don’t know if that was the root cause of the issue but after starting a new session I could query all the regions and all the data showed up. I also hit a hidden character bug when switching between data stores. I wrote about them here if you are interested but I hope they are fixed by the time you read this.

You can select your saved query, change the data store, and run it again and you’ll see more results this time:

The only other odd thing I notice now is that there is no DeleteAccessKey action even though that showed up in IAM access advisor for this role. I don’t recall ever using this role outside of this batch job script so I’m not sure why the Delete Key action is listed in the console, but I’m going to go ahead and leave it out of my role policy and test.

Test our new zero-trust policy

The first thing we need to do is re-deploy the batch job policy. that is not included in the test script in this folder because there is a delay in when IAM takes effect after a policy gets updated.

Run the deploy.sh script in the root folder of the batch job:

./deploy.sh

Of course we want to double check that our policy got updated and it did:

Next we need to delete the CloudFormation stack for our job otherwise there will be no changes and the actions we are trying to test won’t execute. Delete this stack:

BatchJobAdminCredentials

Now you might want to wait a bit or else just run the job and then run it again later to make sure all the IAM permissions have kicked in.

Yes! It worked!

Finally after all those prior blog posts and trial and error we have a way to create a zero trust policy that works. We could take this a step further to automate the process.

But what we do *NOT* want to do is fully automate it to the point where batch jobs are creating their own permissions in a production environment. You could create a tool for developers to generate a zero trust policy but then the developer reviews it, the QA team tests it, perhaps it goes through some security checks, and then it goes to production.

For my purposes at this moment it is faster for me to edit the query and I’m more interested in some other automation I want to get done so I’m going to move on.

Teri Radichel

If you liked this story please clap and follow:

Medium: Teri Radichel or Email List: Teri Radichel

Twitter: @teriradichel or @2ndSightLab

Requests services via LinkedIn: Teri Radichel or IANS Research

© 2nd Sight Lab 2022

All the posts in this series:

____________________________________________

Author:

Cybersecurity for Executives in the Age of Cloud on Amazon

Need Cloud Security Training? 2nd Sight Lab Cloud Security Training

Is your cloud secure? Hire 2nd Sight Lab for a penetration test or security assessment.

Have a Cybersecurity or Cloud Security Question? Ask Teri Radichel by scheduling a call with IANS Research.

Cybersecurity & Cloud Security Resources by Teri Radichel: Cybersecurity and Cloud security classes, articles, white papers, presentations, and podcasts