Whether or not you have absolutely migrated from Twitter to Mastodon, are simply attempting out the “fediverse,” or have been a longtime Mastodon person, you could miss having the ability to search via the complete textual content of “toots” (often known as posts). In Mastodon, hashtags are searchable however different, non-hashtag textual content is just not. The unavailability of full-text search lets customers management how a lot of their content material is well discoverable by strangers. However what if you need to have the ability to search your individual posts?

Some Mastodon situations enable customers to do full-text searches of their very own toots however others do not, relying on the admin. Thankfully, it is simple to full-text search your individual Mastodon posts, because of R and the rtoot package deal developed by David Schoch. That is what this text is about.

Arrange a full-text search

First, set up the rtoot package deal if it is not already in your system with set up.packages("rtoot"). I am going to even be utilizing the dplyr and DT packages. All three may be loaded with the next command:

# set up.packages("rtoot") # if wanted

library(rtoot)

library(dplyr)

library(DT)

Subsequent, you will want your Mastodon ID, which isn’t the identical as your person title and occasion. The rtoot package deal features a option to search throughout the fediverse for accounts. That is a great tool if you wish to see if somebody has an account wherever on Mastodon. However because it additionally returns account IDs, you should utilize it to search out your individual ID, too.

To seek for my very own ID, I might use:

accounts <- search_accounts("smach@fosstodon.org")

That can probably solely convey again a dataframe with one consequence. If you happen to solely seek for a person title and no occasion, akin to search_accounts("posit") to see if Posit (previously RStudio) is energetic on Mastodon, there could possibly be extra outcomes.

My search had just one consequence, so my ID is the primary (in addition to solely) merchandise within the id column:

my_id <- accounts$id[1]

I can now retrieve my posts with rtoot‘s get_account_statuses() perform.

Pull and save your knowledge

The default returns 20 outcomes, a minimum of for now, although the restrict seems to be quite a bit greater if you happen to set it manually with the restrict argument. Do be form about profiting from this setting, nonetheless, since most Mastodon situations are run by volunteers dealing with vastly elevated internet hosting prices just lately.

The primary time you attempt to pull your individual knowledge, you will be requested to authenticate. I ran the next to get my most up-to-date 50 posts (notice the usage of verbose = TRUE to see any messages that is perhaps returned):

smach_statuses <- get_account_statuses(my_id, restrict = 50, verbose = TRUE)

Subsequent, I used to be requested if I needed to authenticate. After selecting sure, I obtained the next question:

On which occasion do you need to authenticate (e.g., "mastodon.social")?

Subsequent, I used to be requested:

What sort of token would you like?

1: public

2: person

Since I need the authority to see all exercise in my very own account, I selected person. The package deal then saved an authentication token for me and I might then run get_account_statuses().

The ensuing knowledge body—which was truly a tibble, a particular sort of knowledge body utilized by tidyverse packages—consists of 29 columns. A couple of are list-columns akin to account and media_attachments with non-atomic outcomes, that means outcomes aren’t in a strict two-dimensional format.

I recommend saving this consequence earlier than going additional so that you need not re-ping the server in case one thing goes awry together with your R session or code. I often use saveRDS, like so:

saveRDS(smach_statuses, "smach_statuses.Rds")

Attempting to save lots of outcomes as a parquet file doesn’t work because of the complicated record columns. Utilizing the vroom package deal to save lots of as a CSV file works and consists of the complete textual content of the record columns. Nonetheless, I might slightly save as a local .Rds or .Rdata file.

Create a searchable desk together with your outcomes

If all you need is a searchable desk for full-text looking out, you solely want a number of of these 29 columns. You’ll undoubtedly need created_at, url, spoiler_text (if you happen to use content material warnings and wish these in your desk), and content material. If you happen to miss seeing engagement metrics in your posts, add reblogs_count, favourites_count, and replies_count.

Under is the code I take advantage of to create knowledge for a searchable desk for my very own viewing. I added a URL column to create a clickable >> with the URL of the publish, which I then add to the top of every publish’s content material. That makes it straightforward to click on via to the unique model:

tabledata <- smach_statuses |>

filter(content material != "") |>

# filter(visibility == "public") |> # If you wish to make this public someplace. Default consists of direct messages.

mutate(

url = paste0("<a goal="clean" href="", uri,""><sturdy> >></sturdy></a>"),

content material = paste(content material, url),

created_at := as.character(as.POSIXct(created_at, format = "%Y-%m-%d %H:%M UTC"))

) |>

choose(CreatedAt = created_at, Submit = content material, Replies = replies_count, Favorites = favourites_count, Boosts = reblogs_count)

If I have been sharing this desk publicly, I might be certain to uncomment filter(visibility == "public") so solely my public posts have been accessible. The information returned by get_account_statuses() to your personal account consists of posts which can be unlisted (accessible to anybody who finds them however not on public timelines by default) in addition to these which can be set for followers solely or direct messages.

There are a variety of methods to show this knowledge right into a searchable desk. A method is with the DT package deal. The code under creates an interactive HTML desk with search filter bins that may use common expressions. (See Do extra with R: Fast interactive HTML tables to study extra about utilizing DT.)

DT::datatable(tabledata, filter="high", escape = FALSE, rownames = FALSE,

choices = record(

search = record(regex = TRUE, caseInsensitive = TRUE),

pageLength = 20,

lengthMenu = c(25, 50, 100),

autowidth = TRUE,

columnDefs = record(record(width="80%", targets = record(2)))

))

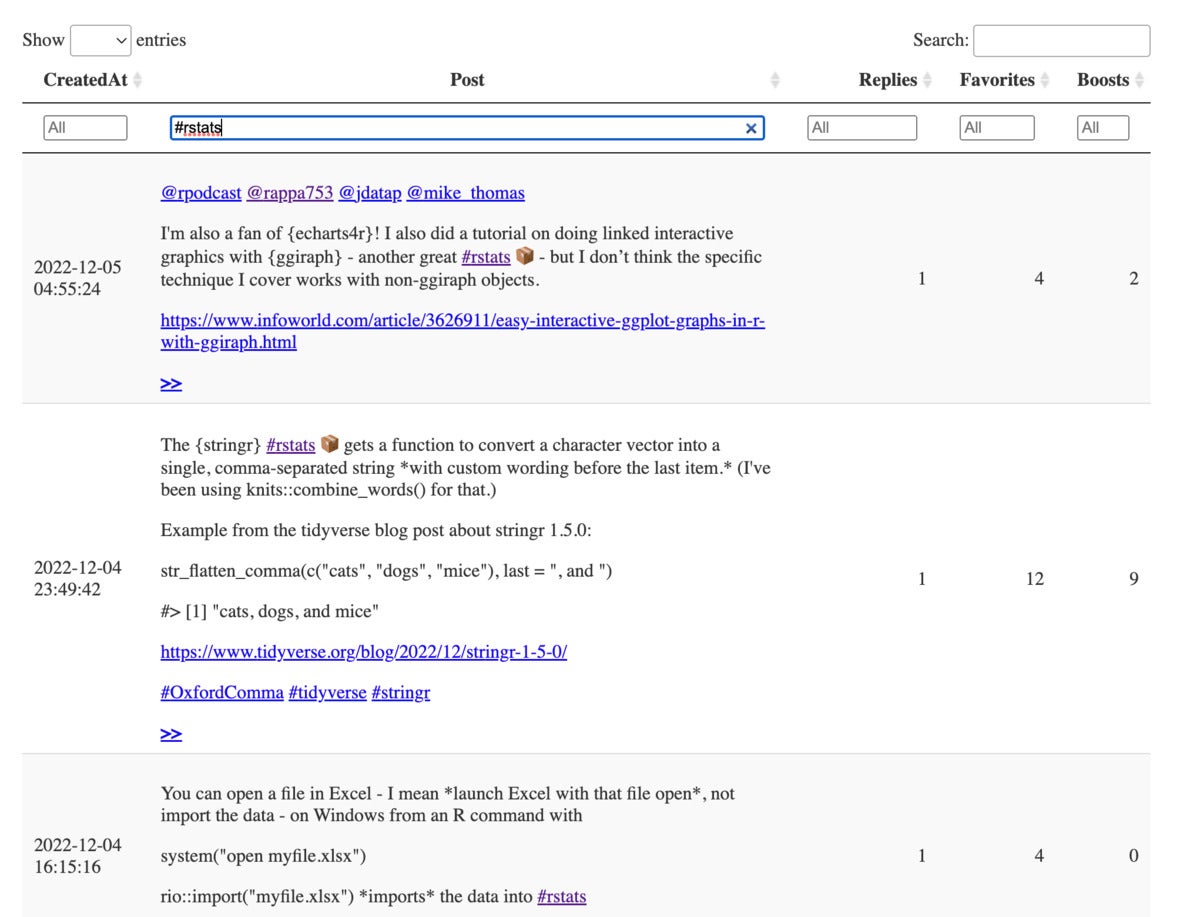

Here is a screenshot of the ensuing desk:

Sharon Machlis

Sharon MachlisAn interactive desk of my Mastodon posts. This desk was created with the DT R package deal utilizing rtoot.

Tips on how to pull in new Mastodon posts

It is simple to replace your knowledge to tug new posts, as a result of the get_account_statuses() perform features a since_id argument. To start out, discover the utmost ID from the prevailing knowledge:

max_id <- max(smach_statuses$id)

Subsequent, search an replace with all of the posts because the max_id:

new_statuses <- get_account_statuses(my_id, since_id = max_id,

restrict = 10, verbose = TRUE)

all_statuses <- bind_rows(new_statuses, smach_statuses)

If you wish to see up to date engagement metrics for some current posts in current knowledge, I might recommend getting the final 10 or 20 total posts as an alternative of utilizing since_id. You’ll be able to then mix that with the prevailing knowledge and dedupe by conserving the primary merchandise. Right here is a method to do this:

new_statuses <- get_account_statuses(my_id, restrict = 25, verbose = TRUE)

all_statuses <- bind_rows(new_statuses, smach_statuses) |>

distinct(id, .keep_all = TRUE)

Tips on how to learn your downloaded Mastodon archive

There may be one other option to get all of your posts, which is especially helpful if you happen to’ve been on Mastodon for a while and have a variety of exercise over that interval. You’ll be able to obtain your Mastodon archive from the web site.

Within the Mastodon internet interface, click on the little gear icon above the left column for Settings, then Import and export > Knowledge export. You need to see an choice to obtain an archive of your posts and media. You’ll be able to solely request an archive as soon as each seven days, although, and it’ll not embrace any engagement metrics.

When you obtain the archive, you may unpack it manually or, as I desire, use the archive package deal (accessible on CRAN) to extract the recordsdata. I am going to additionally load the jsonlite, stringr, and tidyr packages earlier than extracting recordsdata from the archive:

library(archive)

library(jsonlite)

library(stringr)

library(tidyr)

archive_extract("name-of-your-archive-file.tar.gz")

Subsequent, you will need to take a look at outbox.json‘s orderItems. Here is how I imported that into R:

my_outbox <- fromJSON("outbox.json")[["orderedItems"]]

my_posts <- my_outbox |>

unnest_wider(object, names_sep = "_")

From there, I created a knowledge set for a searchable desk just like the one from the rtoot outcomes. This archive consists of all exercise, akin to favoriting one other publish, which is why I am filtering each for sort Create and to verify object_content has a worth. As earlier than, I add a >> clickable URL to the publish content material and tweak how dates are displayed:

search_table_data <- my_posts |>

filter(sort == "Create") |>

filter(!is.na(object_content)) |>

mutate(

url = paste0("<a goal="clean" href="", object_url,""><sturdy> >></sturdy></a>")

) |>

rename(CreatedAt = revealed, Submit = object_content) |>

mutate(CreatedAt = str_replace_all(CreatedAt, "T", " "),

CreatedAt = str_replace_all(CreatedAt, "Z", " "),

Submit = str_replace(Submit, "</p>$", " "),

Submit = paste0(Submit, " ", url, "</p>")

) |>

choose(CreatedAt, Submit) |>

prepare(desc(CreatedAt))

Then, it is one other straightforward single perform to make a searchable desk with DT:

datatable(search_table_data, rownames = FALSE, escape = FALSE,

filter="high", choices = record(search = record(regex = TRUE)))

That is useful to your personal use, however I would not use archive outcomes to share publicly, because it’s much less apparent which of those might need been non-public messages (you’d must do some filtering on the to column).

When you’ve got any questions or feedback about this text, yow will discover me on Mastodon at smach@fosstodon.org in addition to often nonetheless on Twitter at @sharon000 (though I am undecided for the way for much longer). I am additionally on LinkedIn.

For extra R suggestions, head to InfoWorld’s Do Extra With R web page.

Copyright © 2022 IDG Communications, Inc.