Develop a strong Characteristic Choice workflow for any supervised downside

Learn to face one of many largest challenges of machine studying with the perfect of Sklearn function selectors.

Introduction

Right now, it’s common for datasets to have a whole lot if not 1000’s of options. On the floor, this would possibly seem to be a superb factor — extra options give extra details about every pattern. However most of the time, these further options don’t present a lot worth and introduce complexity.

The most important problem of Machine Studying is to create fashions which have sturdy predictive energy by utilizing as few options as attainable. However given the huge sizes of at present’s datasets, it’s straightforward to lose the oversight of which options are vital and which of them aren’t.

That’s why there may be a whole talent to be discovered within the ML area — function choice. Characteristic choice is the method of selecting a subset of crucial options whereas making an attempt to retain as a lot data as attainable (An excerpt from the first article on this collection).

As function choice is such a urgent concern, there’s a myriad of options you possibly can choose from🤦♂️🤦♂️. To spare you some ache, I’ll train you 3feature choice methods that, when used collectively, can supercharge any mannequin’s efficiency.

This text gives you an outline of those methods and the way to use them with out questioning an excessive amount of concerning the internals. For a deeper understanding, I’ve written separate posts for every with the nitty-gritty defined. Let’s get began!

Intro to the dataset and the issue assertion

We might be working with the Ansur Male dataset, which comprises greater than 100 completely different US Military personnel physique measurements. I’ve been utilizing this dataset excessively all through this function choice collection as a result of it comprises 98 numeric options — an ideal dataset to show function choice.

We might be making an attempt to foretell the load in kilos, so it’s a regression downside. Let’s set up a base efficiency with easy Linear Regression. LR is an effective candidate for this downside as a result of we are able to anticipate physique measurements to be linearly correlated:

For the bottom efficiency, we obtained a powerful R-squared of 0.956. Nevertheless, this is perhaps as a result of there may be additionally weight in kilograms column amongst options, giving the algorithm all it wants (we try to foretell weight in kilos). So, let’s attempt with out it:

Now, now we have 0.945, however we managed to cut back mannequin complexity.

Step I: Variance Thresholding

The primary approach might be focused on the particular person properties of every function. The concept behind Variance Thresholding is that options with low variance don’t contribute a lot to total predictions. All these options have distributions with too few distinctive values or low-enough variances to make regardless of. VT helps us to take away them utilizing Sklearn.

One concern earlier than making use of VT is the dimensions of options. Because the values in a function get greater, the variance grows exponentially. Which means that options with completely different distributions have completely different scales, so we can not safely evaluate their variances. So, we should apply some type of normalization to deliver all options to the identical scale after which apply VT. Right here is the code:

After normalization (right here, we’re dividing every pattern by the function’s imply), it’s best to select a threshold between 0 and 1. As a substitute of utilizing the .rework() methodology of the VT estimator, we’re utilizing get_support() which provides a boolean masks (True values for options that must be stored). Then, it may be used to subset the information whereas preserving the column names.

This can be a easy approach, however it could actually go an extended in eliminating ineffective options. For deeper perception and extra rationalization of the code, you possibly can head over to this text:

Step II: Pairwise Correlation

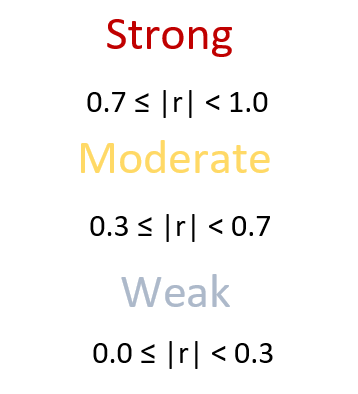

We are going to additional trim our dataset by specializing in the relationships between options. Among the finest metrics that present a linear connection is Pearson’s correlation coefficient (denoted r). The logic behind utilizing r for function choice is straightforward. If the correlation between options A and B is 0.9, it means you possibly can predict the values of B utilizing the values of A 90% of the time. In different phrases, in a dataset the place A is current, you possibly can discard B or vice versa.

There isn’t a Sklearn estimator that implements function choice based mostly on correlation. So, we’ll do it on our personal:

This operate is a shorthand that returns the names of columns that must be dropped based mostly on a customized correlation threshold. Often, the edge might be over 0.8 to be protected.

Within the operate, we first create a correlation matrix utilizing .corr(). Subsequent, we create a boolean masks to solely embrace correlations under the correlation matrix’s diagonal. We use this masks to subset the matrix. Lastly, in an inventory comprehension, we discover the names of options that must be dropped and return them.

There’s a lot I didn’t clarify concerning the code. Despite the fact that this operate works properly, I recommend studying my separate article on function choice based mostly on the correlation coefficient. I totally defined the idea of correlation and the way it’s completely different from causation. There may be additionally a separate part on plotting the right correlation matrix as a heatmap and, after all, the reason of the above operate.

For our dataset, we’ll select a threshold of 0.9:

The operate tells us to drop 13 options:

Now, solely 35 options are remaining.

Step III: Recursive Characteristic Elimination with Cross-Validation (RFECV)

Lastly, we’ll select the ultimate set of options based mostly on how they have an effect on mannequin efficiency. Many of the Sklearn fashions have both .coef_ (linear fashions) or .feature_importances_ (tree-based and ensemble fashions) attributes that present the significance of every function. For instance, let’s match the Linear Regression mannequin to the present set of options and see the computed coefficients:

The above DataFrame reveals the options with the smallest coefficients. The smaller the load or the coefficient of a function is, the much less it contributes to the mannequin’s predictive energy. With this concept in thoughts, Recursive Characteristic Elimination removes options one-by-one utilizing cross-validation till the perfect smallest set of options is remaining.

Sklearn implements this method below the RFECV class which takes an arbitrary estimator and several other different arguments:

After becoming the estimator to the information, we are able to get a boolean masks with True values encoding the options that must be stored. We will lastly use it to subset the unique information one final time:

After making use of RFECV, we managed to discard 5 extra options. Let’s consider a last GradientBoostingRegressor mannequin on this function chosen dataset and see its efficiency:

Despite the fact that we obtained a slight drop in efficiency, we managed to take away nearly 70 options lowering mannequin complexity considerably.

In a separate article, I additional mentioned the .coef_ and .feature_importances_ attributes in addition to additional particulars of what occurs in every elimination spherical of RFE:

Abstract

Characteristic choice shouldn’t be taken flippantly. Whereas lowering mannequin complexity, some algorithms may even see a rise within the efficiency because of the lack of distracting options within the dataset. It is usually not clever to depend on a single methodology. As a substitute, method the issue from completely different angles and utilizing numerous methods.

Right now, we noticed the way to apply function choice to a dataset in three levels:

- Primarily based on the properties of every function utilizing Variance Thresholding.

- Primarily based on the relationships between options utilizing Pairwise Correlation.

- Primarily based on how options have an effect on a mannequin’s efficiency.

Utilizing these methods in procession ought to offer you dependable outcomes for any supervised downside you face.