This publish discusses the deficiencies of phrase embeddings and the way current approaches have tried to resolve them.

Desk of contents:

The word2vec technique based mostly on skip-gram with adverse sampling (Mikolov et al., 2013) was revealed in 2013 and had a big impression on the sphere, primarily by way of its accompanying software program package deal, which enabled environment friendly coaching of dense phrase representations and a simple integration into downstream fashions. In some respects, we have now come far since then: Phrase embeddings have established themselves as an integral a part of Pure Language Processing (NLP) fashions. In different points, we’d as effectively be in 2013 as we have now not discovered methods to pre-train phrase embeddings which have managed to supersede the unique word2vec.

This publish will deal with the deficiencies of phrase embeddings and the way current approaches have tried to resolve them. If not in any other case said, this publish discusses pre-trained phrase embeddings, i.e. phrase representations which were discovered on a big corpus utilizing word2vec and its variants. Pre-trained phrase embeddings are simplest if not hundreds of thousands of coaching examples can be found (and thus transferring information from a big unlabelled corpus is beneficial), which is true for many duties in NLP. For an introduction to phrase embeddings, seek advice from this weblog publish.

Subword-level embeddings

Phrase embeddings have been augmented with subword-level info for a lot of functions reminiscent of named entity recognition (Lample et al., 2016) , part-of-speech tagging (Plank et al., 2016) , dependency parsing (Ballesteros et al., 2015; Yu & Vu, 2017) , , and language modelling (Kim et al., 2016) . Most of those fashions make use of a CNN or a BiLSTM that takes as enter the characters of a phrase and outputs a character-based phrase illustration.

For incorporating character info into pre-trained embeddings, nonetheless, character n-grams options have been proven to be extra highly effective than composition capabilities over particular person characters (Wieting et al., 2016; Bojanowski et al., 2017) , . Character n-grams — by far not a novel characteristic for textual content categorization (Cavnar et al., 1994) — are significantly environment friendly and in addition type the idea of Fb’s fastText classifier (Joulin et al., 2016) . Embeddings discovered utilizing fastText are obtainable in 294 languages.

Subword items based mostly on byte-pair encoding have been discovered to be significantly helpful for machine translation (Sennrich et al., 2016) the place they’ve changed phrases as the usual enter items. They’re additionally helpful for duties with many unknown phrases reminiscent of entity typing (Heinzerling & Strube, 2017) , however haven’t been proven to be useful but for normal NLP duties, the place this isn’t a serious concern. Whereas they are often discovered simply, it’s tough to see their benefit over character-based representations for many duties (Vania & Lopez, 2017) .

One other alternative for utilizing pre-trained embeddings that combine character info is to leverage a state-of-the-art language mannequin (Jozefowicz et al., 2016) educated on a big in-domain corpus, e.g. the 1 Billion Phrase Benchmark (a pre-trained Tensorflow mannequin could be discovered right here). Whereas language modelling has been discovered to be helpful for various duties as auxiliary goal (Rei, 2017) , pre-trained language mannequin embeddings have additionally been used to reinforce phrase embeddings (Peters et al., 2017) . As we begin to higher perceive the right way to pre-train and initialize our fashions, pre-trained language mannequin embeddings are poised to change into simpler. They could even supersede word2vec because the go-to alternative for initializing phrase embeddings by advantage of getting change into extra expressive and simpler to coach on account of higher frameworks and extra computational sources during the last years.

OOV dealing with

One of many predominant issues of utilizing pre-trained phrase embeddings is that they’re unable to cope with out-of-vocabulary (OOV) phrases, i.e. phrases that haven’t been seen throughout coaching. Sometimes, such phrases are set to the UNK token and are assigned the identical vector, which is an ineffective alternative if the variety of OOV phrases is giant. Subword-level embeddings as mentioned within the final part are one technique to mitigate this challenge. One other approach, which is efficient for studying comprehension (Dhingra et al., 2017) is to assign OOV phrases their pre-trained phrase embedding, if one is on the market.

Not too long ago, completely different approaches have been proposed for producing embeddings for OOV phrases on-the-fly. Herbelot and Baroni (2017) initialize the embedding of OOV phrases because the sum of their context phrases after which quickly refine solely the OOV embedding with a excessive studying fee. Their strategy is profitable for a dataset that explicitly requires to mannequin nonce phrases, however it’s unclear if it may be scaled as much as work reliably for extra typical NLP duties. One other attention-grabbing strategy for producing OOV phrase embeddings is to coach a character-based mannequin to explicitly re-create pre-trained embeddings (Pinter et al., 2017) . That is significantly helpful in low-resource situations, the place a big corpus is inaccessible and solely pre-trained embeddings can be found.

Analysis

Analysis of pre-trained embeddings has been a contentious challenge since their inception because the generally used analysis through phrase similarity or analogy datasets has been proven to solely correlate weakly with downstream efficiency (Tsvetkov et al., 2015) . The RepEval Workshop at ACL 2016 solely centered on higher methods to guage pre-trained embeddings. Because it stands, the consensus appears to be that — whereas pre-trained embeddings could be evaluated on intrinsic duties reminiscent of phrase similarity for comparability in opposition to earlier approaches — the easiest way to guage them is extrinsic analysis on downstream duties.

Multi-sense embeddings

A generally cited criticism of phrase embeddings is that they’re unable to seize polysemy. A tutorial at ACL 2016 outlined the work lately that centered on studying separate embeddings for a number of senses of a phrase (Neelakantan et al., 2014; Iacobacci et al., 2015; Pilehvar & Collier, 2016) , , . Nevertheless, most present approaches for studying multi-sense embeddings solely consider on phrase similarity. Pilehvar et al. (2017) are one of many first to indicate outcomes on matter categorization as a downstream job; whereas multi-sense embeddings outperform randomly initialized phrase embeddings of their experiments, they’re outperformed by pre-trained phrase embeddings.

Given the stellar outcomes Neural Machine Translation methods utilizing phrase embeddings have achieved lately (Johnson et al., 2016) , it appears that evidently the present technology of fashions is expressive sufficient to contextualize and disambiguate phrases in context with out having to depend on a devoted disambiguation pipeline or multi-sense embeddings. Nevertheless, we nonetheless want higher methods to know whether or not our fashions are literally capable of sufficiently disambiguate phrases and the right way to enhance this disambiguation behaviour if obligatory.

Past phrases as factors

Whereas we’d not want separate embeddings for each sense of every phrase for good downstream efficiency, lowering every phrase to some extent in a vector house is unarguably overly simplistic and causes us to overlook out on nuances that could be helpful for downstream duties. An attention-grabbing route is thus to make use of different representations which can be higher capable of seize these aspects. Vilnis & McCallum (2015) suggest to mannequin every phrase as a chance distribution reasonably than a degree vector, which permits us to signify chance mass and uncertainty throughout sure dimensions. Athiwaratkun & Wilson (2017) prolong this strategy to a multimodal distribution that enables to cope with polysemy, entailment, uncertainty, and enhances interpretability.

Slightly than altering the illustration, the embedding house can be modified to raised signify sure options. Nickel and Kiela (2017) , as an example, embed phrases in a hyperbolic house, to study hierarchical representations. Discovering different methods to signify phrases that incorporate linguistic assumptions or higher cope with the traits of downstream duties is a compelling analysis route.

Phrases and multi-word expressions

Along with not with the ability to seize a number of senses of phrases, phrase embeddings additionally fail to seize the meanings of phrases and multi-word expressions, which is usually a perform of the which means of their constituent phrases, or have a completely new which means. Phrase embeddings have been proposed already within the unique word2vec paper (Mikolov et al., 2013) and there was constant work on studying higher compositional and non-compositional phrase embeddings (Yu & Dredze, 2015; Hashimoto & Tsuruoka, 2016) , . Nevertheless, just like multi-sense embeddings, explicitly modelling phrases has up to now not proven vital enhancements on downstream duties that will justify the extra complexity. Analogously, a greater understanding of how phrases are modelled in neural networks would pave the best way to strategies that increase the capabilities of our fashions to seize compositionality and non-compositionality of expressions.

Bias

Bias in our fashions is turning into a bigger challenge and we’re solely beginning to perceive its implications for coaching and evaluating our fashions. Even phrase embeddings educated on Google Information articles exhibit feminine/male gender stereotypes to a disturbing extent (Bolukbasi et al., 2016) . Understanding what different biases phrase embeddings seize and discovering higher methods to take away theses biases will probably be key to creating truthful algorithms for pure language processing.

Temporal dimension

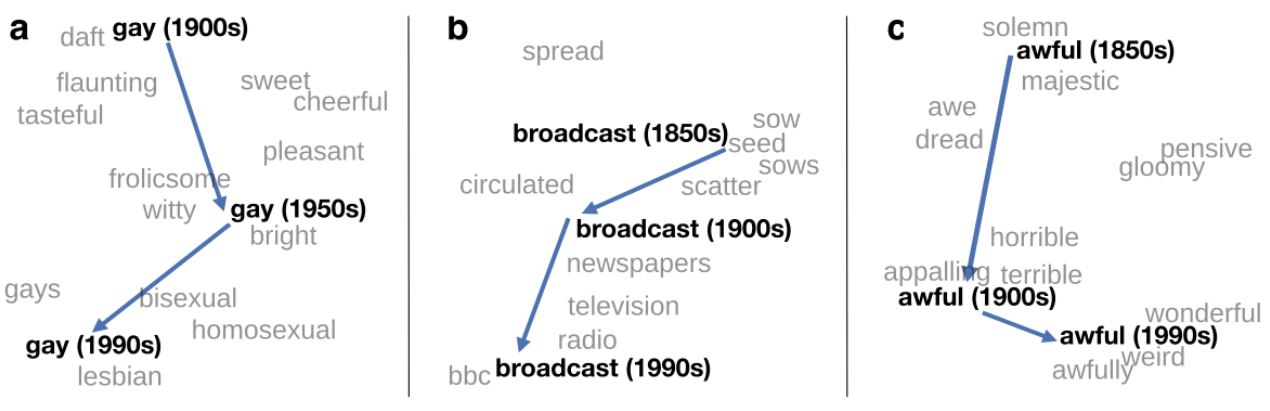

Phrases are a mirror of the zeitgeist and their meanings are topic to steady change; present representations of phrases may differ considerably from the best way these phrases the place used up to now and will probably be used sooner or later. An attention-grabbing route is thus to keep in mind the temporal dimension and the diachronic nature of phrases. This could permits us to disclose legal guidelines of semantic change (Hamilton et al., 2016; Bamler & Mandt, 2017; Dubossarsky et al., 2017) , , , to mannequin temporal phrase analogy or relatedness (Szymanski, 2017; Rosin et al., 2017) , , or to seize the dynamics of semantic relations (Kutuzov et al., 2017) .

Lack of theoretical understanding

In addition to the perception that word2vec with skip-gram adverse sampling implicitly factorizes a PMI matrix (Levy & Goldberg, 2014) , there was comparatively little work on gaining a greater theoretical understanding of the phrase embedding house and its properties, e.g. that summation captures analogy relations. Arora et al. (2016) suggest a brand new generative mannequin for phrase embeddings, which treats corpus technology as a random stroll of a discourse vector and establishes some theoretical motivations concerning the analogy behaviour. Gittens et al. (2017) present a extra thorough theoretical justification of additive compositionality and present that skip-gram phrase vectors are optimum in an information-theoretic sense. Mimno & Thompson (2017) moreover reveal an attention-grabbing relation between phrase embeddings and the embeddings of context phrases, i.e. that they don’t seem to be evenly dispersed throughout the vector house, however occupy a slim cone that’s diametrically reverse to the context phrase embeddings. Regardless of these further insights, our understanding concerning the situation and properties of phrase embeddings continues to be missing and extra theoretical work is critical.

Process and domain-specific embeddings

One of many main downsides of utilizing pre-trained embeddings is that the information information used for coaching them is commonly very completely different from the information on which we want to use them. Most often, nonetheless, we do not need entry to hundreds of thousands of unlabelled paperwork in our goal area that will permit for pre-training good embeddings from scratch. We’d thus like to have the ability to adapt embeddings pre-trained on giant information corpora, in order that they seize the traits of our goal area, however nonetheless retain all related present information. Lu & Zheng (2017) proposed a regularized skip-gram mannequin for studying such cross-domain embeddings. Sooner or later, we’ll want even higher methods to adapt pre-trained embeddings to new domains or to include the information from a number of related domains.

Slightly than adapting to a brand new area, we will additionally use present information encoded in semantic lexicons to reinforce pre-trained embeddings with info that’s related for our job. An efficient technique to inject such relations into the embedding house is retro-fitting (Faruqui et al., 2015) , which has been expanded to different sources reminiscent of ConceptNet (Speer et al., 2017) and prolonged with an clever collection of optimistic and adverse examples (Mrkšić et al., 2017) . Injecting further prior information into phrase embeddings reminiscent of monotonicity (You et al., 2017) , phrase similarity (Niebler et al., 2017) , task-related grading or depth, or logical relations is a crucial analysis route that can permit to make our fashions extra sturdy.

Phrase embeddings are helpful for all kinds of functions past NLP reminiscent of info retrieval, suggestion, and hyperlink prediction in information bases, which all have their very own task-specific approaches. Wu et al. (2017) suggest a general-purpose mannequin that’s appropriate with many of those functions and might function a robust baseline.

Switch studying

Slightly than adapting phrase embeddings to any specific job, current work has sought to create contextualized phrase vectors by augmenting phrase embeddings with embeddings based mostly on the hidden states of fashions pre-trained for sure duties, reminiscent of machine translation (McCann et al., 2017) or language modelling (Peters et al., 2018) . Along with fine-tuning pre-trained fashions (Howard and Ruder, 2018) , this is without doubt one of the most promising analysis instructions.

Embeddings for a number of languages

As NLP fashions are being more and more employed and evaluated on a number of languages, creating multilingual phrase embeddings is turning into a extra necessary challenge and has acquired elevated curiosity over current years. A promising route is to develop strategies that study cross-lingual representations with as few parallel information as attainable, in order that they are often simply utilized to study representations even for low-resource languages. For a current survey on this space, seek advice from Ruder et al. (2017) .

Embeddings based mostly on different contexts

Phrase embeddings are sometimes discovered solely based mostly on the window of surrounding context phrases. Levy & Goldberg (2014) have proven that dependency buildings can be utilized as context to seize extra syntactic phrase relations; Köhn (2015) finds that such dependency-based embeddings carry out greatest for a specific multilingual analysis technique that clusters embeddings alongside completely different syntactic options.

Melamud et al. (2016) observe that completely different context varieties work effectively for various downstream duties and that easy concatenation of phrase embeddings discovered with completely different context varieties can yield additional efficiency good points. Given the current success of incorporating graph buildings into neural fashions for various duties as — as an example — exhibited by graph-convolutional neural networks (Bastings et al., 2017; Marcheggiani & Titov, 2017) , we will conjecture that incorporating such buildings for studying embeddings for downstream duties might also be useful.

In addition to deciding on context phrases otherwise, further context might also be utilized in different methods: Tissier et al. (2017) incorporate co-occurrence info from dictionary definitions into the adverse sampling course of to maneuver associated works nearer collectively and forestall them from getting used as adverse samples. We will consider topical or relatedness info derived from different contexts reminiscent of article headlines or Wikipedia intro paragraphs that would equally be used to make the representations extra relevant to a specific downstream job.

Conclusion

It’s good to see that as a group we’re progressing from making use of phrase embeddings to each attainable drawback to gaining a extra principled, nuanced, and sensible understanding of them. This publish was meant to spotlight among the present traits and future instructions for studying phrase embeddings that I discovered most compelling. I’ve undoubtedly failed to say many different areas which can be equally necessary and noteworthy. Please let me know within the feedback beneath what I missed, the place I made a mistake or misrepresented a technique, or simply which facet of phrase embeddings you discover significantly thrilling or unexplored.

Quotation

For attribution in tutorial contexts or books, please cite this work as:

Sebastian Ruder, "Phrase embeddings in 2017: Traits and future instructions". http://ruder.io/word-embeddings-2017/, 2017.

BibTeX quotation:

@misc{ruder2017wordembeddings2017,

writer = {Ruder, Sebastian},

title = {{Phrase embeddings in 2017: Traits and future instructions}},

12 months = {2017},

howpublished = {url{http://ruder.io/word-embeddings-2017/}},

}

Hacker Information

Consult with the dialogue on Hacker Information for some extra insights on phrase embeddings.

Different weblog posts on phrase embeddings

If you wish to study extra about phrase embeddings, these different weblog posts on phrase embeddings are additionally obtainable:

References

Cowl picture credit score: Hamilton et al. (2016)