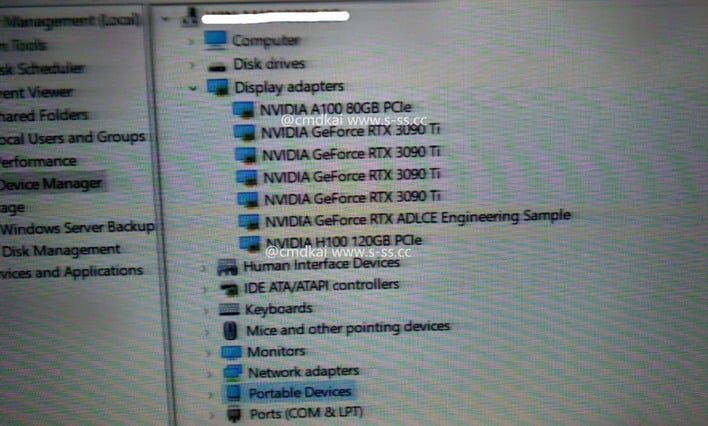

This screenshot was posted as much as s-ss.cc by the weblog’s writer, Kaixin (@cmdkai on Twitter). The writer claims to have acquired it from a “mysterious good friend,” and it is fairly a stunning screenshot. It seems to point out a Home windows System Supervisor window for a system with at least seven NVIDIA GPUs: 4 GeForce RTX 3090 Ti playing cards, an 80GB A100 accelerator, an “RTX ADLCE Engineering Pattern”, and eventually, an “NVIDIA H100 120GB PCIe”.

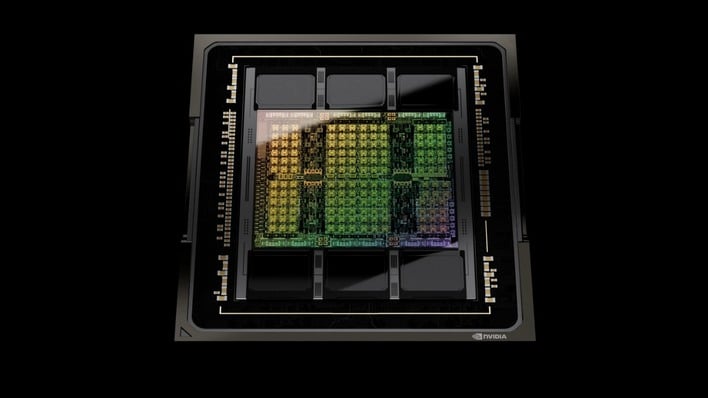

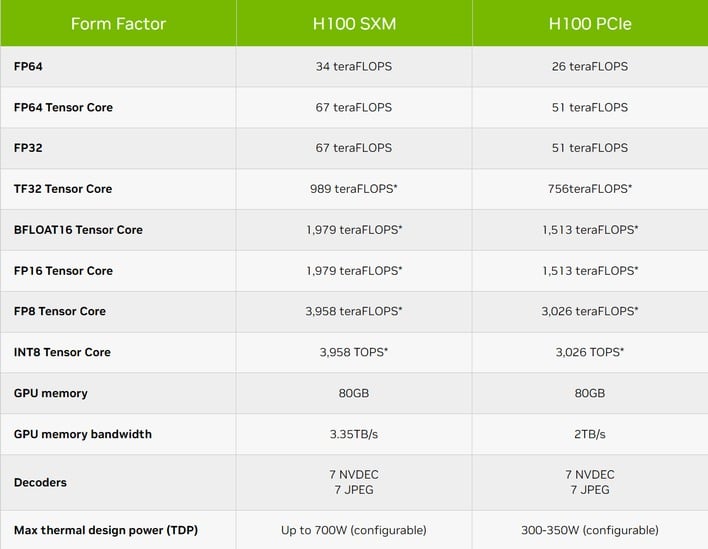

The extra fascinating half is the “H100 120GB” card. NVIDIA did announce Hopper H100 with a PCI Categorical add-in card model, however it’s considerably slower than the SXM mezzanine model—and neither model has been introduced with 120GB of reminiscence onboard, however quite 80GB. Hopper comes as a multi-chip module (MCM) with the Hopper GPU surrounded by 5 stacks of HBM3 reminiscence (plus one dummy stack for bodily stability.)

A 120GB card would require every stack to be 24GB quite than 16GB as on the introduced components. That is not not possible; when Hynix introduced its HBM3 reminiscence, its flagship product had 24 GB capability and switch charges as much as 6.4 Gbps. Hynix’s RAM is the reminiscence that NVIDIA is utilizing on Hopper, so it is completely possible {that a} product may exist with an additional 50% extra reminiscence on-board.

Kaixin’s “mysterious good friend” additionally claims that this H100 120GB PCIe card is in reality utilizing the identical model of the H100 chip used on the SXM mezzanine interface H100 playing cards. He lists off some specs: 16896 CUDA cores, and 3TB/s reminiscence bandwidth—each matching the specs for the quicker SXM H100 card.

Now, that GPU is designed for enterprise enclosures with excessive quantities of airflow, and because of this it comes with an especially excessive 700-watt TDP; we doubt this PCIe card has the identical, so the efficiency nonetheless would not be the identical. It does supposedly hit about 60 TFLOPs in single-precision, although. That is partway between the NVIDIA specs for the H100 SXM and the H100 PCIe, and is sensible if it is a barely wider GPU working on the similar clocks in comparison with the usual PCIe card model.

After all, we have no option to confirm the leak’s veracity; this might merely be an attention-seeking photoshop. There may be benefit, although; NVIDIA’s enterprise prospects all the time need extra reminiscence capability, and a 120GB H100 GPU would absolutely be simply the factor these prospects are searching for. NVIDIA’s enterprise prospects are supposed to begin promoting programs with H100s any time now—we’ll see if one in all them begins delivery 120GB GPUs quickly.