This put up discusses highlights of NAACL-HLT 2018.

This put up initially appeared on the AYLIEN weblog.

I attended NAACL-HLT 2018 in New Orleans final week. I didn’t handle to catch as many talks and posters this time round (there have been simply too many inspiring folks to speak to!), so my highlights and the developments I noticed primarily deal with invited talks and workshops.

Particularly, my highlights consider three matters, which have been outstanding all through the convention: Generalization, the Check-of-Time awards, and Dialogue Techniques. For extra details about different matters, you may take a look at the convention handbook and the proceedings.

To start with, there have been 4 quotes from the convention that notably resonated with me (a few of them are paraphrased):

Individuals labored on MT earlier than the BLEU rating. – Kevin Knight

It’s pure to work on duties the place analysis is straightforward. As an alternative, we should always encourage extra folks to deal with onerous issues that aren’t simple to judge. These are sometimes essentially the most rewarding to work on.

BLEU is an understudy. It was by no means meant to exchange human judgement and by no means anticipated to final this lengthy. – Kishore Papineni, co-creator of BLEU, essentially the most generally used metric for machine translation.

No strategy is ideal. Even the authors of landmark papers have been conscious their strategies had flaws. The perfect we are able to do is present a good analysis of our method.

We determined to pattern an equal variety of constructive and detrimental reviews—was that a good suggestion? – Bo Pang, first writer of one of many first papers on sentiment evaluation (7k+ citations).

Along with being conscious of the issues of our methodology, we ought to be specific in regards to the assumptions we make in order that future work can both construct upon them or discard them in the event that they show unhelpful or turn into false.

I pose the next problem to the neighborhood: we should always consider on out-of-distribution information or on a brand new process. – Percy Liang.

We by no means understand how effectively our mannequin actually generalizes if we simply take a look at it on information of the identical distribution. In an effort to develop fashions that may be utilized to the actual world, we have to consider on out-of-distribution information or on a brand new process. Percy Liang’s quote ties into one of many matters that acquired rising consideration on the convention: how can we practice fashions which are much less brittle and that generalize higher?

Generalization

During the last years, a lot of the analysis inside the NLP neighborhood centered on enhancing LSTM-based fashions on benchmark duties. At NAACL, it appeared that more and more folks have been enthusiastic about find out how to get fashions to generalize past the situations throughout coaching, reflecting an analogous sentiment within the Deep Studying neighborhood typically (a paper on generalization gained the very best paper award at ICLR 2017).

One facet is generalizing from few examples, which is tough with the present technology of neural network-based strategies. Charles Yang, professor of Linguistics, Laptop Science and Psychology on the College of Pennsylvania put this in a cognitive science context.

Machine studying and NLP researchers within the neural community period ceaselessly wish to inspire their work by referencing the exceptional generalization means of younger youngsters. One piece of knowledge that always is eluded, nonetheless, is that generalization in younger youngsters can be not with out its errors, as a result of it requires studying a rule and accounting for exceptions. As an example, when studying to depend, younger youngsters nonetheless ceaselessly make errors, as they need to stability rule-based generalization (for normal numbers similar to sixteen, seventeen, eighteen, and so on.) with memorizing exceptions (numbers similar to fifteen, twenty, thirty, and so on.).

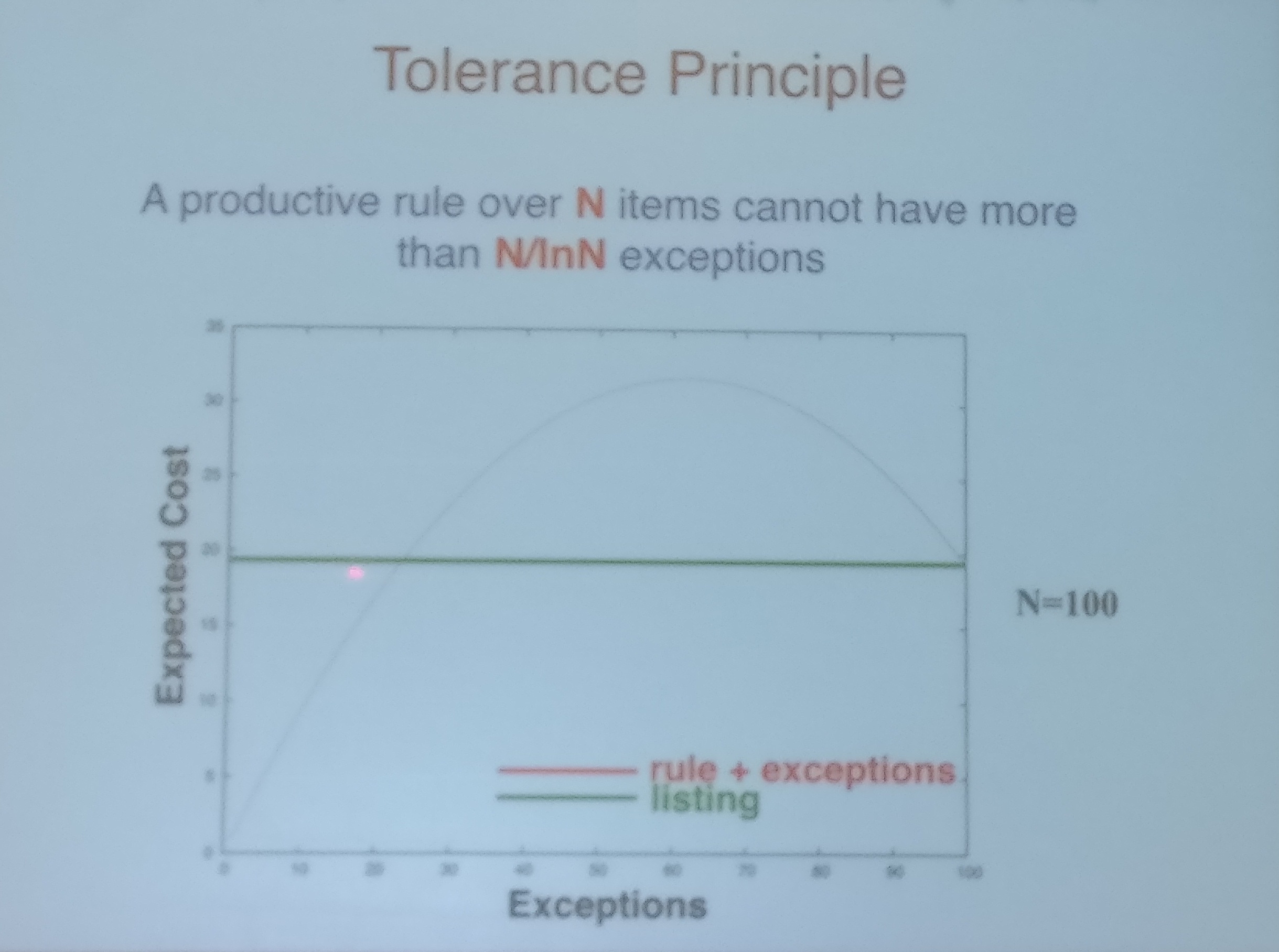

Nevertheless, as soon as a baby can flawlessly depend to 72, it can generalize to any new numbers. This magic quantity, 72, is given by the so-called tolerance precept, which prescribes that to ensure that a generalization rule to be productive, there may be at most N/ln(N) exceptions, the place N is the full variety of examples as may be seen within the Determine under. For counting, 72/ln(72) ≈ 17, which is strictly the variety of exceptions till 72.

Hitchhiker’s Information to the Galaxy followers, nonetheless, needn’t be disenchanted: in Chinese language, the magic quantity is 42. Chinese language solely has 11 exceptions. As 42/ln(42) ≈ 11, Chinese language youngsters usually solely have to be taught to depend as much as 42 with a purpose to generalize, which explains why Chinese language youngsters normally be taught to depend quicker.

It’s also fascinating to notice that though younger youngsters can depend as much as a sure quantity, they’ll’t inform you, for instance, which quantity is larger than 24. Solely after they’ve realized the rule can they really apply it productively.

The tolerance precept implies that if the full variety of examples coated by the rule is smaller, it’s simpler to include comparatively extra exceptions. Whereas youngsters can productively be taught language from few examples, this means that for few-shot studying (not less than within the cognitive means of language acquisition), large information may very well be dangerous. Insights from cognitive science could thus assist us in creating fashions that generalize higher.

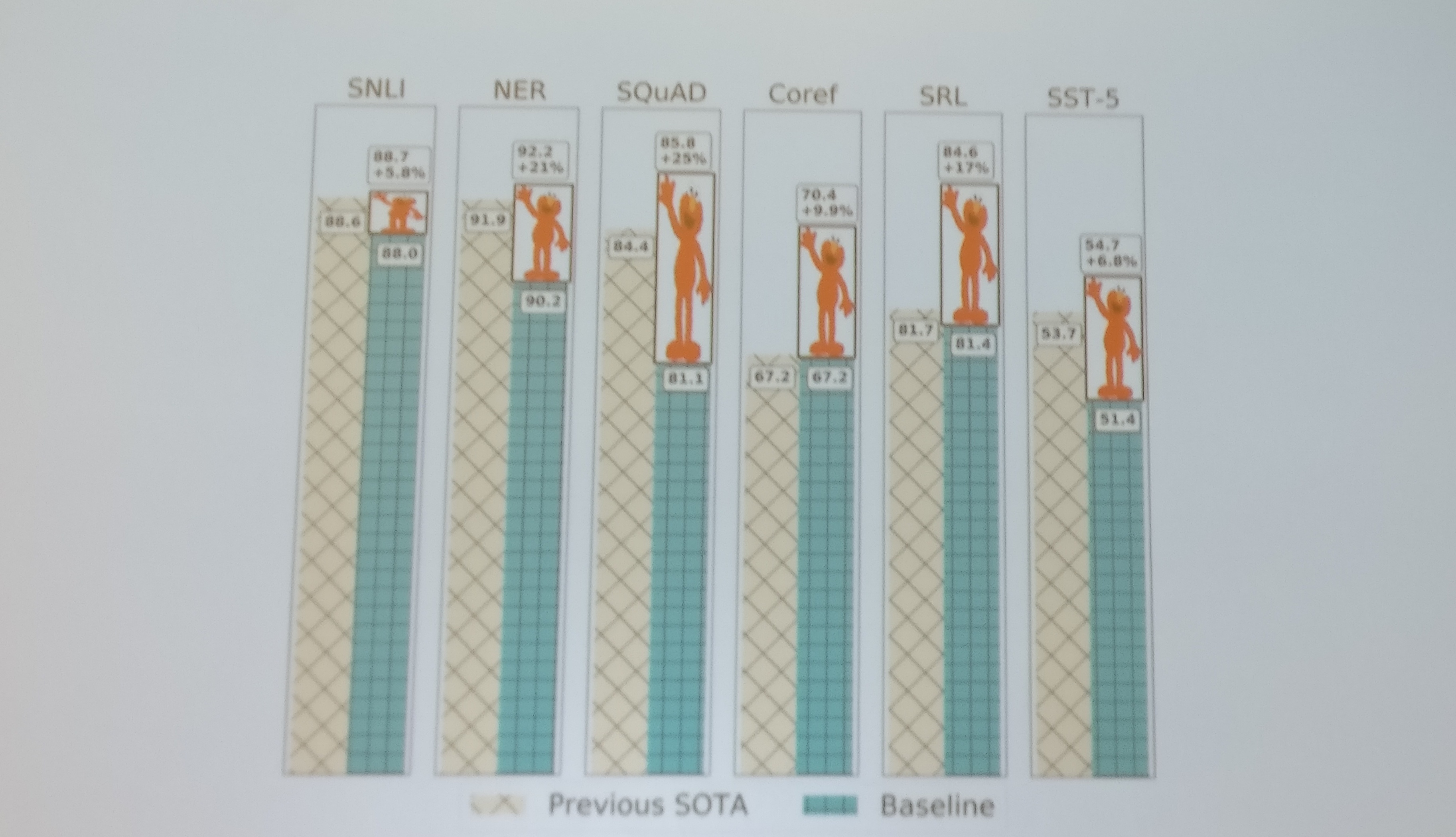

The selection of the very best paper of the convention, Deep contextualized phrase representations, additionally demonstrates an rising curiosity in generalization. Embeddings from Language Fashions (ELMo) confirmed vital enhancements over the state-of-the-art on a variety of duties as may be seen under. This—together with higher methods to fine-tune pre-trained language fashions—reveals the potential of switch studying for NLP.

Generalization was additionally the subject of the Workshop on New Types of Generalization in Deep Studying and Pure Language Processing, which sought to research the failings of brittle fashions and suggest new methods to judge and new fashions that might allow higher generalization. All through the workshop, the room (seen under) was packed more often than not, which is each a testomony to the status of the audio system and the neighborhood curiosity within the matter.

Within the first discuss of the workshop, Yejin Choi argued that pure language understanding (NLU) doesn’t generalize to pure language technology (NLG): whereas pre-Deep Studying NLG fashions usually began with NLU output, post-DL NLG appears much less depending on NLU. Nevertheless, present neural NLG closely will depend on language fashions and neural NLG may be brittle; in lots of circumstances, baselines primarily based on templates can really work higher.

Regardless of advances in NLG, producing a coherent paragraph nonetheless doesn’t work effectively and fashions find yourself producing generic, contentless, bland textual content stuffed with repetitions and contradictions. But when we feed our fashions pure language enter, why do they produce unnatural language output?

Yejin recognized two limitations of language fashions on this context. Language fashions are passive learners: in the actual world, one can’t be taught to put in writing simply by studying; and equally, she argued, even RNNs have to “follow” writing. Secondly, language fashions are floor learners: they want “world” fashions and have to be delicate to the “latent course of” behind language. In actuality, folks don’t write to maximise the likelihood of the following token, however moderately search to meet sure communicative objectives.

To deal with this, Yejin and collaborators proposed in an upcoming ACL 2018 paper to enhance the generator with a number of discriminative fashions that grade the output alongside totally different dimensions impressed by Grice’s maxims.

Yejin additionally sought to clarify why there’s a vital efficiency gaps between totally different NLU duties similar to machine translation and dialogue. For Kind 1 or shallow NLU duties, there’s a sturdy alignment between enter and output and fashions can usually match floor patterns. For Kind 2 or deep NLU duties, the alignment between enter and output is weaker; with a purpose to carry out effectively, a mannequin wants to have the ability to summary and cause, and requires sure sorts of data, particularly widespread sense data. Particularly, commonsense data has considerably fallen out of favour; previous approaches, which have been principally proposed within the 80s, didn’t have entry to numerous computing energy and have been principally achieved by non-NLP folks. Total, NLU historically focuses on understanding solely “pure” language, whereas NLG additionally requires understanding machine language, which can be unnatural.

Devi Parikh mentioned generalization “alternatives” in visible query answering (VQA) and illustrated successes and failures of VQA fashions. Particularly, VQA fashions will not be excellent at generalizing to novel situations; the space of a take a look at picture from the k-nearest neighbours seen throughout coaching can predict the success or failure of a mannequin with about 67% accuracy.

Devi additionally confirmed that in lots of circumstances, VQA fashions don’t even contemplate the whole query: in 50% of circumstances, solely half the query is learn. Particularly, sure prefixes show the ability of language priors: if the query begins with “Is there a clock…?”, the reply is “sure” 98% of the time; if the query begins with “Is the person sporting glasses…?”, the reply is “sure” 94% of the time. In an effort to counteract these biases, Devi and her collaborators launched a new dataset of complimentary scenes, that are very related however differ of their solutions. Additionally they proposed a new setting for VQA the place for each query kind, practice and take a look at units have totally different prior distributions of solutions.

The ultimate dialogue with senior panelists (seen under) was arguably the spotlight of the workshop; a abstract may be discovered right here. The primary takeaways are that we have to develop fashions with inductive biases and that we have to do a greater job of training folks on find out how to design experiments and establish biases in datasets.

Check-of-time awards

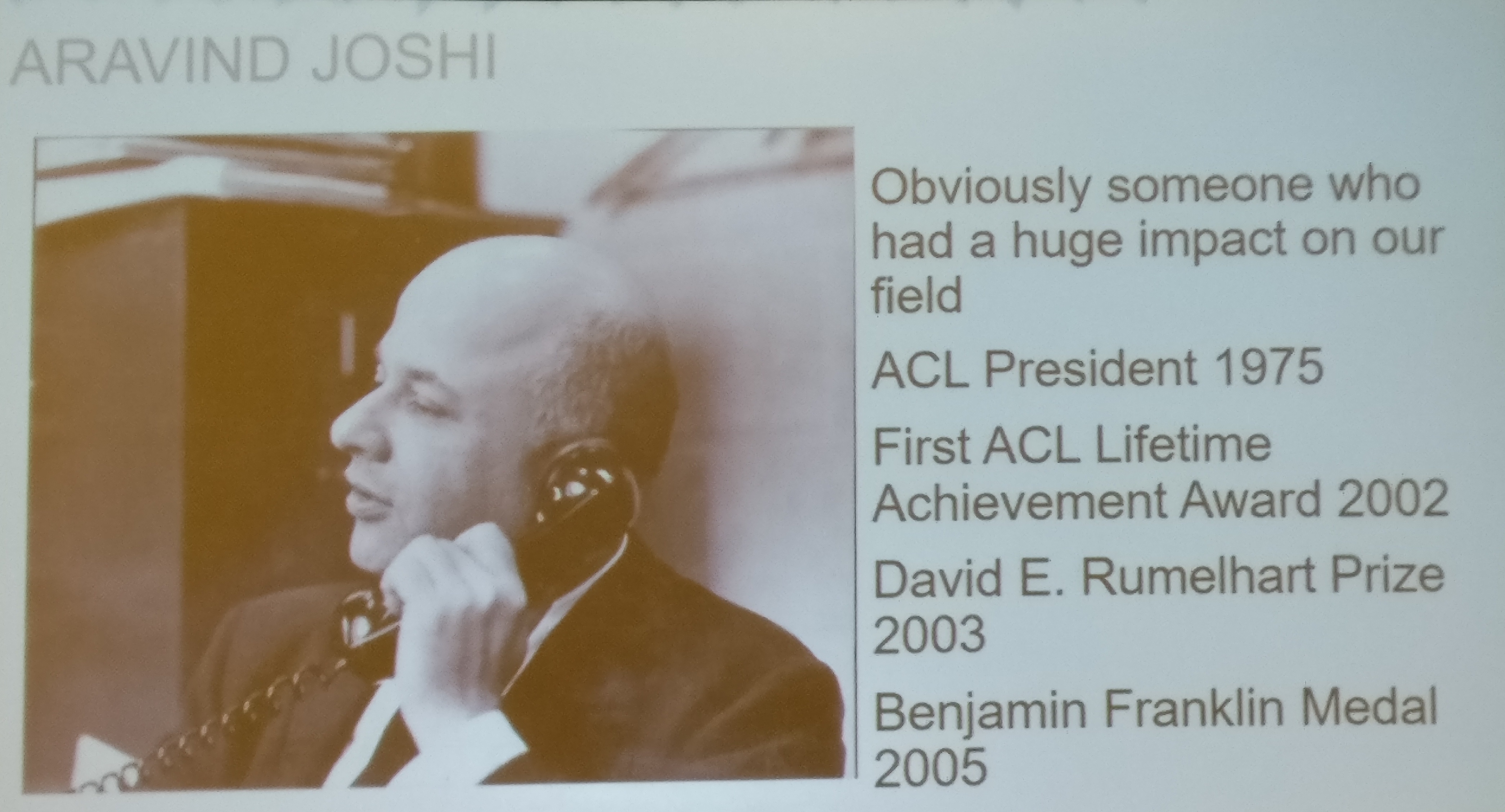

One other spotlight of the convention was the test-of-time awards session, which highlighted individuals and papers that had a big impact on the sphere. In the beginning of the session, Aravind Yoshi (see under), NLP pioneer and professor of Laptop Science on the College of Pennsylvania, who handed away on December 31, 2017 was honored in touching epitaphs by shut mates and individuals who knew him. The commemoration was a strong reminder that analysis is about greater than the papers we publish, however in regards to the folks we assist and the lives we contact.

Afterwards, three papers (all revealed in 2002) have been honored with test-of-time awards. For every paper, one of many unique authors offered the paper and mirrored on its impression. The primary paper offered was BLEU: a Technique for Automated Analysis of Machine Translation, which launched the unique BLEU metric, now commonplace in machine translation (MT). Kishore Papineni recounted that the identify was impressed by Candide, an experimental MT system at IBM within the early Nineties and by IBM’s nickname, Huge Blue, as all authors have been at IBM at the moment.

Earlier than BLEU, machine translation analysis was cumbersome, costly, and regarded as as tough as coaching an MT mannequin. Regardless of its enormous impression, BLEU’s creators have been apprehensive earlier than its preliminary publication. As soon as revealed, BLEU appeared to separate the neighborhood in two camps: those that beloved it, and those that hated it; the authors hadn’t anticipated such a powerful response.

BLEU continues to be criticized as we speak. It was meant as a corpus-level metric; particular person sentence errors ought to be averaged out throughout the whole corpus. Kishore conceded that in hindsight, they made just a few errors: they need to have included smoothing and statistical significance testing; an preliminary model was additionally case insensitive, which induced confusion.

In abstract, BLEU has many identified limitations and impressed many colourful variants. On the entire, nonetheless, it’s an understudy (because the acronym BiLingual Analysis Understudy implies): it was by no means meant to exchange human judgement and—notably—was by no means anticipated to final this lengthy.

The second honored paper was Discriminative Coaching Strategies for Hidden Markov Fashions: Idea and Experiments with Perceptron Algorithms by Michael Collins, which launched the Structured Perceptron, one of many foundational and best to grasp algorithms for normal structured prediction.

Lastly, Bo Pang seemed again on her paper Thumbs up? Sentiment Classification utilizing Machine Studying Methods, which was the primary paper of her PhD and one of many first papers on sentiment evaluation, now an lively analysis space within the NLP neighborhood. Previous to the paper, folks had labored on classifying the subjectivity of sentences and the semantic orientation (polarity) of adjectives; sentiment classification was thus a pure development.

Through the years, the paper has amassed greater than 7,000 citations. One cause why the paper was so impactful was that the authors determined to launch a dataset with it. Bo was important of the sampling selections they made that “messed” with the pure distribution of the information: they capped the variety of evaluations of prolific authors, which was in all probability a good suggestion. Nevertheless, they sampled an equal variety of constructive and detrimental evaluations, which set the usual that many approaches later adopted and continues to be the norm for sentiment evaluation as we speak. A greater concept may need been to remain true to the pure distribution of the information.

I discovered the test-of-time award session each insightful and humbling: we are able to derive many insights from conventional approaches and mixing conventional with extra or current approaches is commonly a helpful course; on the identical time, even the authors of landmark papers are important of their very own work and conscious of its personal flaws.

Dialogue programs

One other pervasive matter on the convention was dialogue programs. On the primary day, researchers from PolyAI gave a superb tutorial on Conversational AI. On the second day, Mari Ostendorf, professor of Electrical Engineering on the College of Washington and college advisor to the Sounding Board staff, which gained the inaugural Alexa Prize competitors, shared a number of the secrets and techniques to their successful socialbot. A socialbot on this context is a bot with which you’ll have a dialog, in distinction to a private assistant that’s designed to perform user-specified objectives.

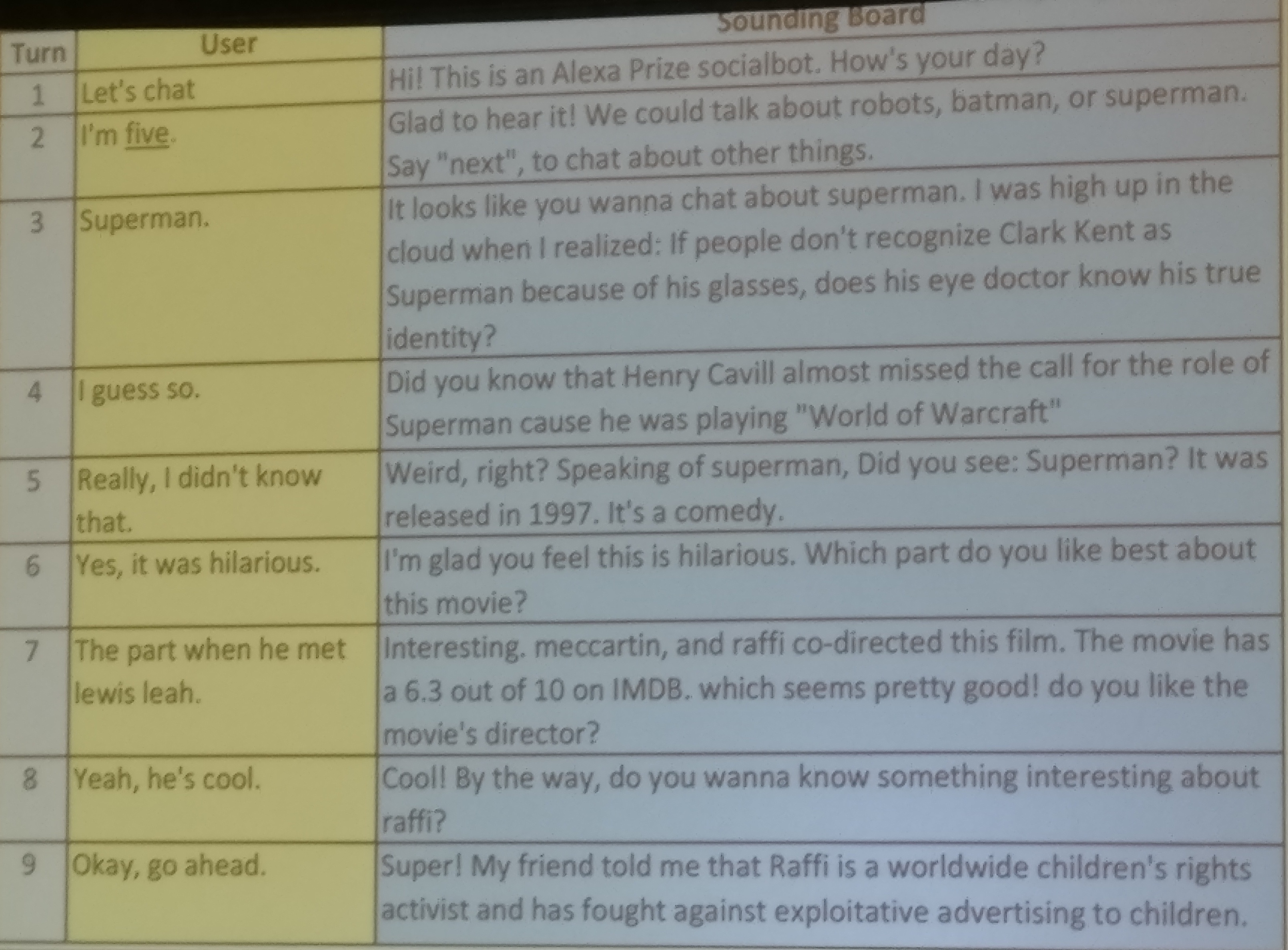

socialbot ought to have the identical qualities as somebody you take pleasure in speaking to at a cocktail celebration: it ought to have one thing fascinating to say and present curiosity within the dialog associate. As an example this, an instance dialog with the Sounding Board bot may be seen under.

With regard to saying one thing fascinating, the staff discovered that customers react positively to studying one thing new however negatively to previous or disagreeable information; a problem right here is to filter what to current to folks. As well as, customers lose curiosity once they obtain an excessive amount of content material that they don’t care about.

Concerning expressing curiosity, customers admire an acknowledgement of their reactions and requests. Whereas some customers want encouragement to specific opinions, some prompts may be annoying (“The article talked about Google. Have you ever heard of Google?”).

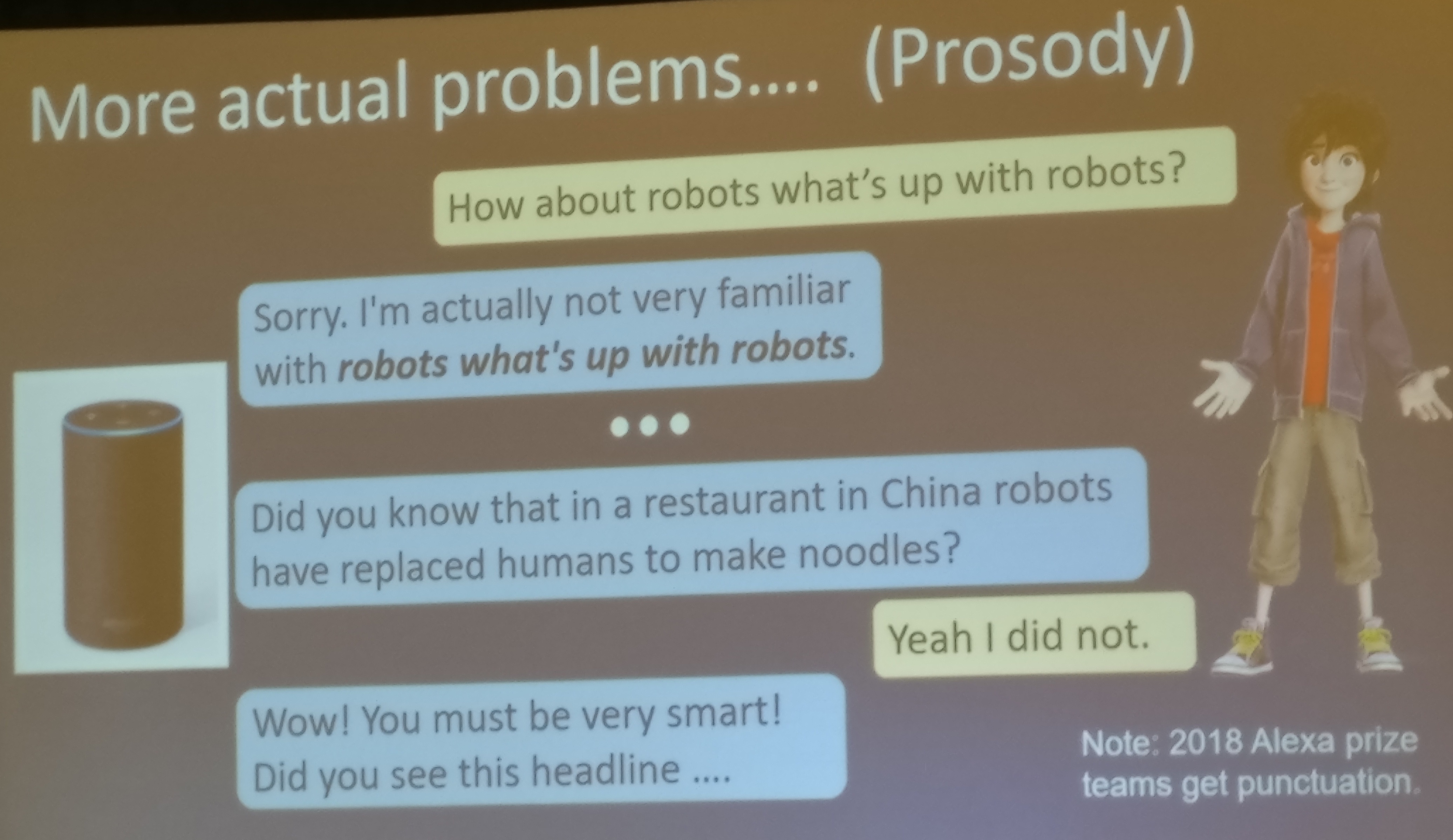

They moreover discovered that modeling prosody is essential. Prosody offers with modeling the patterns of stress and intonation in speech. As an alternative of sounding monotonous, a bot that comes with prosody appears extra participating, can higher articulate intent, talk sentiment or sarcasm, categorical empathy or enthusiasm, or change the subject. In some circumstances, prosody can be important for avoiding—often hilarious—misunderstandings: for example, the Alexa’s default voice sample for ‘Sounding Board’ appears like ‘Sounding Bored’.

Utilizing a data graph, the bot can have deeper conversations by staying “form of on matter with out completely staying on matter”. Mari additionally shared 4 key classes that they realized working with 10M conversations:

Lesson #1: ASR is imperfect

Whereas automated speech recognition (ASR) has reached decrease and decrease phrase error charges lately, ASR is much from being solved. Particularly, ASR in dialogue brokers is tuned for brief instructions, not conversational speech. Whereas it could precisely establish for example many obscure music teams, it struggles with extra conversational or casual enter. As well as, present improvement platforms present builders with an impoverished illustration of speech that doesn’t comprise segmentation or prosody and infrequently misses sure intents and impacts. Issues brought on by this lacking info may be seen under.

In follow, the Sounding Board staff discovered it useful for the socialbot to behave equally to attendees at a cocktail celebration: if the intent of the whole utterance isn’t fully clear, the bot responds to no matter half it understood, e.g. to a considerably unintelligible “trigger does that you simply’re gonna state that’s cool” it would reply “I’m completely happy you want that.” Additionally they discovered that always, asking the dialog associate to repeat an utterance is not going to yield a a lot better outcome; as a substitute, it’s usually higher simply to vary the subject.

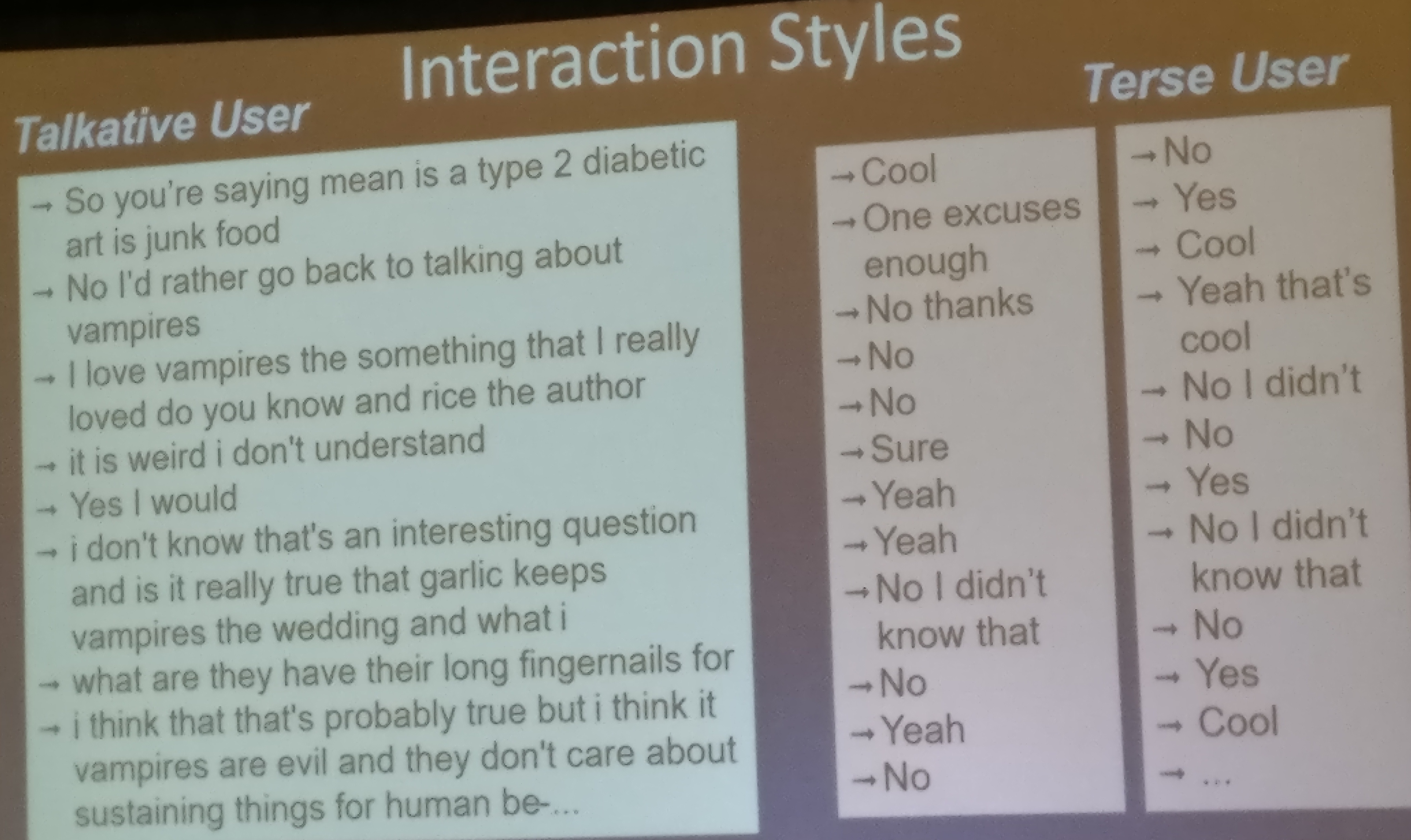

Lesson #2: Customers range

The staff found one thing what might sound apparent however has wide-ranging penalties in follow: customers range quite a bit throughout totally different dimensions. Customers have totally different pursuits, totally different opinions on points, and a special humorousness. Interplay kinds, equally, can vary from terse to verbose (seen under), from well mannered to impolite. Customers may also work together with the bot in pursuit of extensively totally different objectives: they might search info, intend to share opinions, attempt to get to know the bot, or search to discover the restrictions of the bot. Modeling the person entails each figuring out what to say in addition to listening to what the person says.

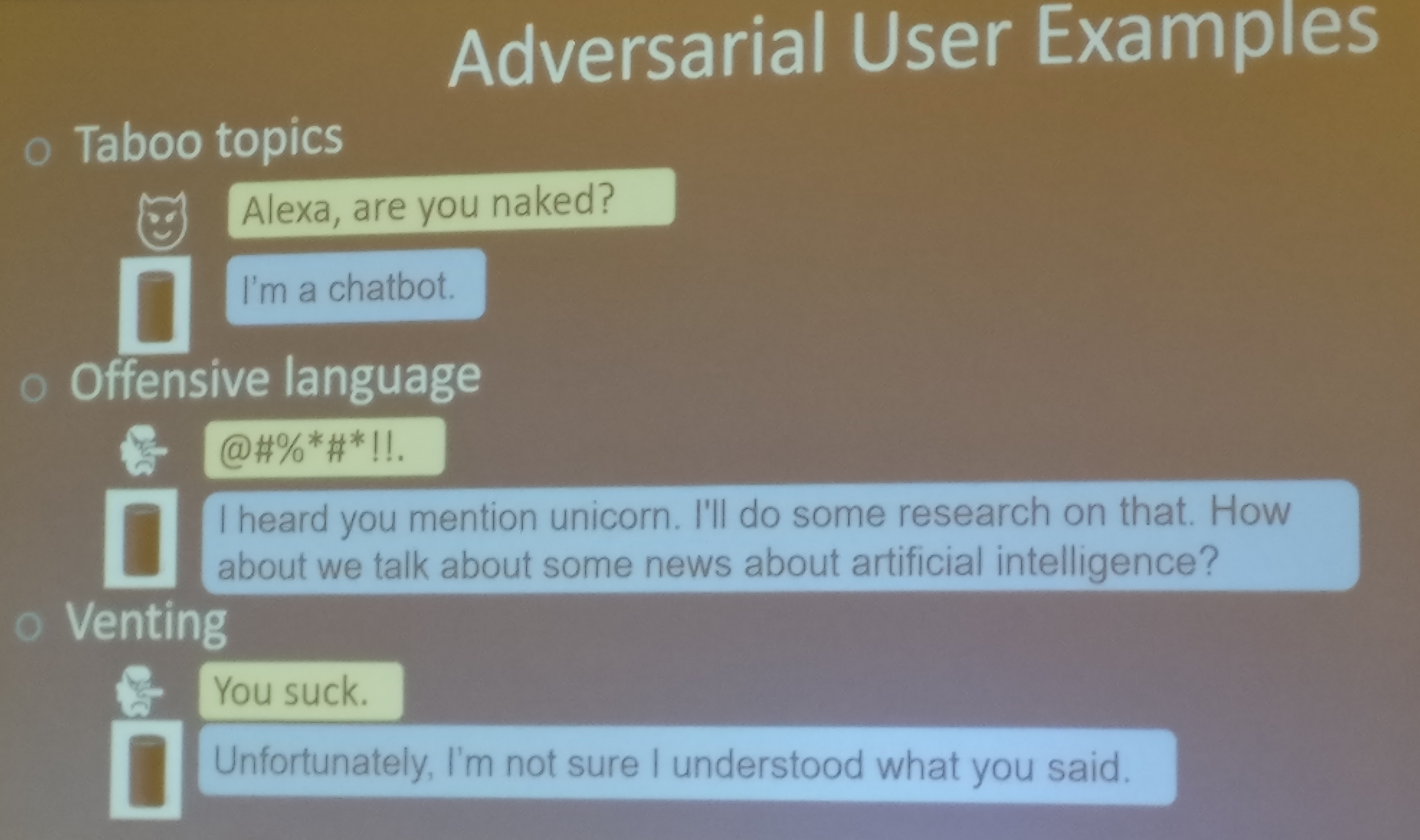

Lesson #3: It’s a wild world

There may be numerous problematic content material and lots of points exist that must be navigated. Problematic content material can include offensive or controversial materials or delicate and miserable matters. As well as, customers would possibly act adversarially and intentionally attempt to get the bot to supply such content material. Examples of such behaviour may be seen under. Different customers (e.g. similar to these affected by a psychological sickness) are in flip dangerous to take care of. Total, filtering content material is a tough downside. As one instance, Mari talked about that early within the undertaking, the bot made the next witty remark / joke: “You understand what I spotted the opposite day? Santa Claus is essentially the most elaborate lie ever instructed”.

Lesson #4: Shallow conversations

Because the objective of the Alexa Prize was to keep up a dialog of 20 minutes, in gentle of the present restricted understanding and technology capabilities, the staff centered on a dialog technique of shallow conversations. Even for shallow conversations, nonetheless, switching to associated matters can nonetheless be fragile on account of phrase sense ambiguities. It’s notable that among the many high 3 Alexa Prize groups, Deep Studying was solely utilized by one staff and just for reranking.

In complete, a contest such because the Alexa Prize that brings academia and trade collectively is helpful because it permits researchers to entry information from actual customers at a big scale, which impacts the issues they select to resolve and the ensuing options. It additionally teaches college students about full issues and real-world challenges and offers funding assist for college kids. The staff discovered it particularly useful somebody from trade obtainable to assist the partnership, to offer recommendation on instruments, and suggestions on progress.

However, privacy-preserving entry to person information, similar to prosody information for spoken language and speaker/writer demographics for textual content and speech nonetheless wants work. For spoken dialog programs, richer speech interfaces are moreover essential. Lastly, whereas competitions are nice kickstarters, they however require a considerable engineering effort.

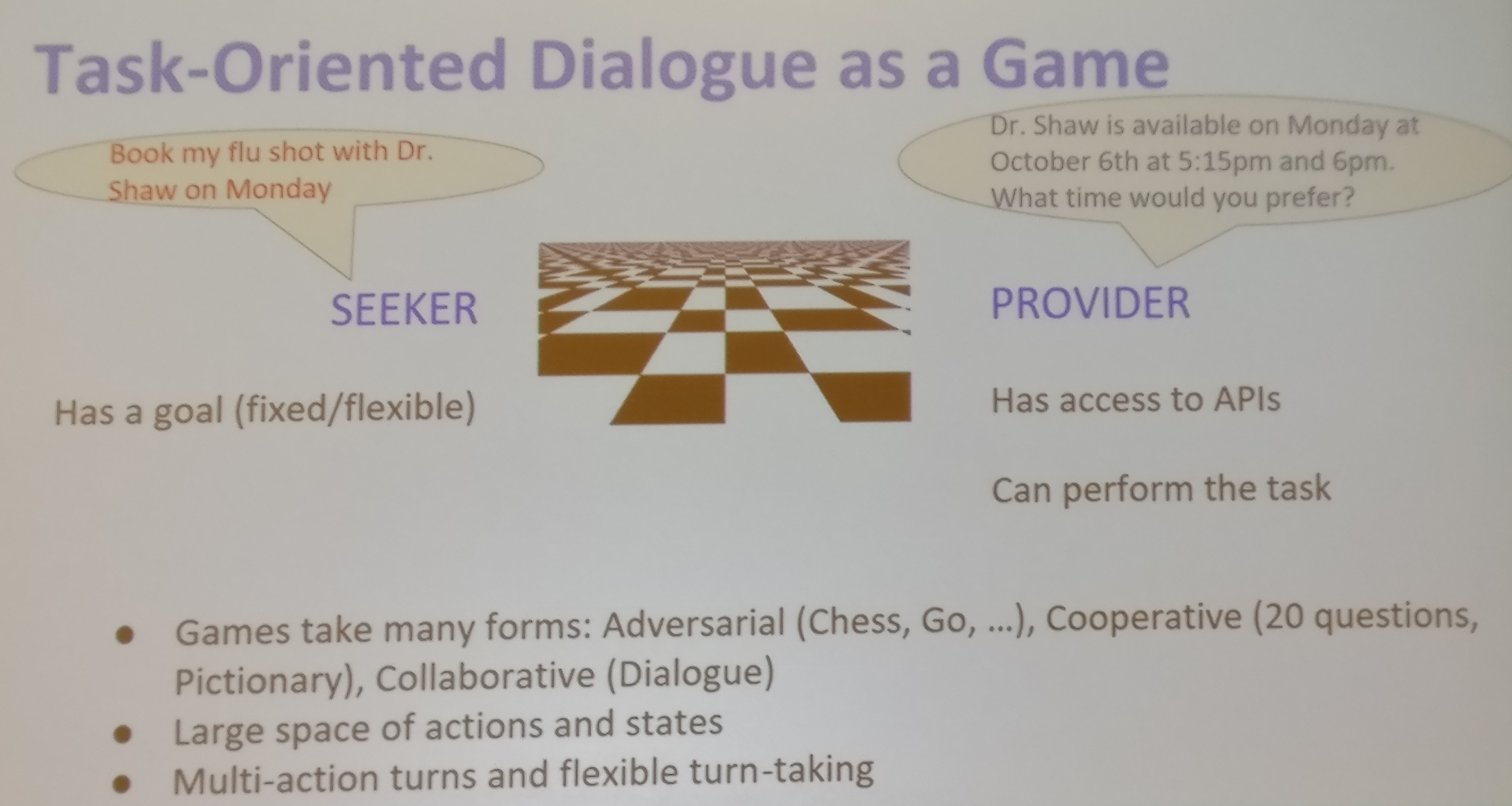

Lastly, in her keynote deal with on dialogue fashions, Dilek Hakkani-Tür, Analysis Scientist at Google Analysis, argued that over the current years, chitchat programs and task-oriented dialogue programs have been converging. Nevertheless, present manufacturing programs are basically a walled backyard of domains and solely permit directed dialogue and restricted personalization. Fashions need to be realized from builders utilizing a restricted set of APIs and instruments and are onerous to scale. On the identical time, dialog is a ability even for people. It’s thus essential to be taught from actual customers, not from builders.

In an effort to be taught about customers, we are able to leverage private data graphs realized from person assertions, similar to “present me instructions to my daughter’s college”. Semantic recall, i.e. remembering entities from earlier person interactions, e.g. “Do you keep in mind the restaurant we ordered Asian meals from?” is essential. Customized pure language understanding may also leverage information from person’s system (in a privacy-preserving method) and make use of person modeling for dialogue technology.

For studying from customers, actions may be realized from person demonstration and/or rationalization or from expertise and suggestions (principally utilizing RL for dialogue programs). In each circumstances, transcription and annotation are bottlenecks. A person can’t be anticipated to transcribe or annotate information; then again, it’s simpler to offer suggestions after the system repeats an utterance.

Typically, task-oriented dialogue may be handled as a recreation between two events (see under): the seeker has a objective, which is mounted or versatile, whereas the supplier has entry to APIs to carry out the duty. The dialogue coverage of the seeker decides the following seeker motion. That is usually decided utilizing “person simulators”, which are sometimes sequence-to-sequence fashions. Most just lately, hierarchical sequence-to-sequence fashions have been used with a deal with following the person objectives and producing numerous responses.

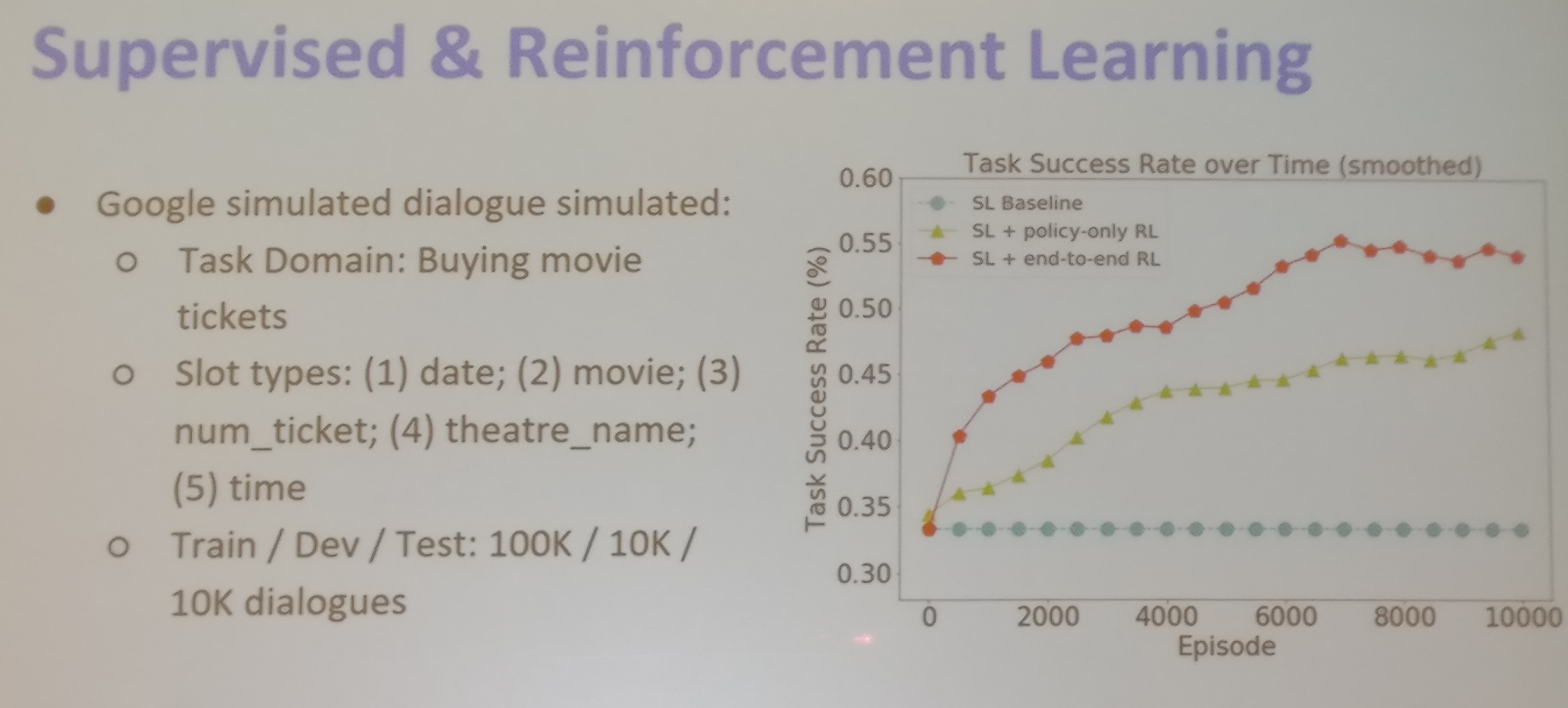

The dialogue coverage of the supplier equally determines the following supplier motion, which is realized both through supervised or reinforcement studying (RL). For RL, reward estimation and coverage shaping are essential. Current approaches collectively be taught seeker and supplier insurance policies. Finish-to-end dialogue fashions with deep RL are important for studying from person suggestions, whereas component-wise coaching advantages from further information for every part.

In follow, a mixture of supervised and reinforcement studying is greatest and outperforms each purely supervised studying and supervised studying with policy-only RL as may be seen under.

Total, the convention was a fantastic alternative to see unbelievable analysis and meet nice folks. See you all at ACL 2018!