Discover ways to construct MMMs for various international locations the best means

Imagine that you just work in a advertising division and need to examine in case your promoting investments paid off. Individuals who learn my different articles know a means to do that: advertising combine modeling! This technique is particularly fascinating as a result of it additionally works in a cookieless world.

I’ve additionally coated Bayesian advertising combine modeling, a strategy to get extra sturdy fashions and uncertainty estimates for every little thing you forecast.

The Bayesian method works exceptionally properly for homogeneous information, which means that the consequences of your promoting spendings are comparable throughout your dataset. However what occurs when now we have a heterogeneous dataset, for instance, spendings throughout a number of international locations? Two apparent methods to take care of it are the next:

- Ignore the truth that there are a number of international locations within the dataset and construct a single huge mannequin.

- Construct one mannequin per nation.

Sadly, each strategies have their disadvantages. Lengthy story brief: Ignoring the nation leaves you with a mannequin that’s too coarse, in all probability it is going to be underfitting. However, if you happen to construct a mannequin per nation, you would possibly find yourself with too many fashions to maintain observe of. And even worse, if some international locations don’t have many information factors, your mannequin there would possibly overfit.

Normally, it’s extra environment friendly to create a hybrid mannequin that’s considerably between these two approaches: Bayesian hierarchical modeling! You possibly can learn extra about it right here as properly:

How we are able to profit from this in our case precisely you ask? For example, Bayesian hierarchical modeling may produce a mannequin the place the TV carryover values in neighboring international locations are not too far aside from one another, which counters overfitting results.

Nonetheless, if the info clearly means that parameters are in reality utterly completely different, the Bayesian hierarchical mannequin will be capable of decide this up as properly, given sufficient information.

Within the following, I’ll present you tips on how to mix the Bayesian advertising combine modeling (BMMM) with the Bayesian hierarchical modeling (BHM) method to create a — possibly you guessed it — a Bayesian hierarchical advertising combine mannequin (BHMMM) in Python utilizing PyMC.

BHMMM = BMMM + BHM

Researchers from the previous Google Inc. have additionally written a paper about this concept that I encourage you to take a look at later as properly. [1] You need to be capable of perceive this paper fairly properly after you’ve gotten understood my articles about BMMM and BHM.

Observe that I don’t use PyMC3 anymore however PyMC, which is a facelift of this nice library. Happily, if you happen to knew PyMC3 earlier than, it is possible for you to to select up on PyMC as properly. Let’s get began!

First, we’ll load an artificial dataset that I made up myself, which is ok for coaching functions.

dataset_link = "https://uncooked.githubusercontent.com/Garve/datasets/fdb81840fb96faeda5a874efa1b9bbfb83ce1929/bhmmm.csv"information = pd.read_csv(dataset_link)X = information.drop(columns=["Sales", "Date"])

y = information["Sales"]

Now, let me copy over some features from my different article, one for computing exponential saturation and one for coping with carryovers. I adjusted them — i.e. modified theano.tensor to aesara.tensor, and tt to at — to work with the brand new PyMC.

import aesara.tensor as atdef saturate(x, a):

return 1 - at.exp(-a*x)def carryover(x, power, size=21):

w = at.as_tensor_variable(

[at.power(strength, i) for i in range(length)]

)x_lags = at.stack(

[at.concatenate([

at.zeros(i),

x[:x.shape[0]-i]

]) for i in vary(size)]

)return at.dot(w, x_lags)

We will begin modeling now.

Earlier than we begin with the total mannequin, we may begin by constructing separate fashions, simply to see what occurs and to have a form of baseline.

Separate Fashions

If we comply with the methodology from right here, we get for Germany:

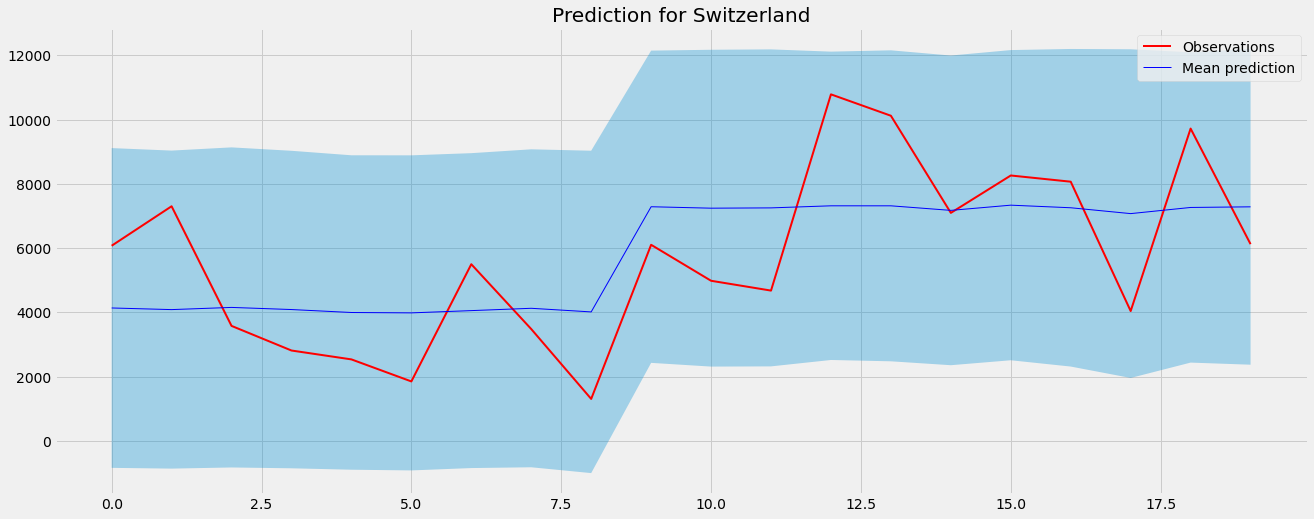

A fairly good match. Nonetheless, for Switzerland we solely have 20 observations, so the predictions will not be too nice:

That is precisely the explanation why the separate fashions method is typically problematic. There may be cause to imagine that the individuals in Switzerland will not be utterly completely different from the individuals in Germany relating to the affect of media on them, and a mannequin ought to be capable of seize this.

We will additionally see what the Switzerland mannequin has realized in regards to the parameters:

The posteriors are nonetheless fairly huge as a result of lack of Switzerland information factors. You possibly can see this from the car_ parameters on the best: the 94% HDI of the carryovers practically spans throughout your complete potential vary between 0 and 1.

Allow us to construct a correct BHMMM now, so particularly Switzerland can profit from the bigger quantity of information that now we have from Germany and Austria.

PyMC Implementation

We introduce some hyperpriors that form the underlying distribution over all international locations. For instance, the carryover is modeled utilizing a Beta distribution. This distribution has two parameters α and β, and we reserve two hyperpriors car_alpha and car_beta to mannequin these.

In Line 15, you possibly can see how the hyperpriors are used then to outline the carryover per nation and channel. Moreover, I take advantage of extra tuning steps than standard — 3000 as a substitute of 1000 — as a result of the mannequin is sort of advanced. Having extra tuning steps offers the mannequin a better time inferring.

And that’s it!

Checking the Output

Allow us to solely check out how properly the mannequin captures the info.

I cannot conduct any actual checks with metrics now, however from the plots, we are able to see that the efficiency of Germany and Austria appears fairly properly.

If we examine Switzerland from the BHMMM to the model of the BMMM from earlier than, we are able to additionally see that it appears so a lot better now.

That is solely potential as a result of now we have given Switzerland some context utilizing the info of different, comparable international locations.

We will additionally see how the posteriors of the carryovers of Switzerland narrowed down:

Some distributions are nonetheless a bit wild, and we must take a deeper look into tips on how to repair this. There is likely to be sampling points or the priors is likely to be dangerous, amongst different issues. Nonetheless, we is not going to do this right here.

On this article, now we have taken a fast take a look at two completely different Bayesian ideas:

- Bayesian advertising combine modeling to investigate advertising spendings, in addition to

- Bayesian hierarchical modeling.

We then cast a good higher Bayesian advertising combine mannequin by combining each approaches. That is particularly helpful if you happen to take care of some hierarchy, for instance, when constructing a advertising combine mannequin for a number of associated international locations.

This technique works so properly as a result of it offers the mannequin context: if you happen to inform the mannequin to provide a forecast for one nation, it could possibly take the details about different international locations into consideration. That is essential if the mannequin has to function on a dataset that’s in any other case too small.

One other thought that I need to provide you with in your means is the next: On this article, we used a nation hierarchy. Nonetheless, you possibly can consider different hierarchies as properly, for instance, a channel hierarchy. A channel hierarchy can come up if you happen to say that completely different channels ought to behave not too in another way, for instance in case your mannequin not solely takes banner spendings however banner spendings on web site A and banner spendings on web site B, the place the consumer conduct of internet sites A and B will not be too completely different.

[1] Y. Solar, Y. Wang, Y. Jin, D. Chan, J. Koehler, Geo-level Bayesian Hierarchical Media Combine Modeling (2017)

I hope that you just realized one thing new, fascinating, and helpful right now. Thanks for studying!

Because the final level, if you happen to

- need to assist me in writing extra about machine studying and

- plan to get a Medium subscription anyway,

why not do it through this hyperlink? This is able to assist me quite a bit! 😊

To be clear, the value for you doesn’t change, however about half of the subscription charges go on to me.

Thanks quite a bit, if you happen to think about supporting me!

You probably have any questions, write me on LinkedIn!