Intuition is very important to understanding a concept. An intuitive grasp of a tool or concept means you can zoom out to the level of abstraction where you get the whole picture in view. I’ve spent the last four years building and deploying machine learning tools at AI startups. In that time, the technology has exploded in popularity, particularly in my area of specialization, natural language processing (NLP).

At a startup, I don’t often have the luxury of spending months on research and testing—if I do, it’s a bet that makes or breaks the product.

A sharp intuition for how a model will perform—where it will excel and where it will fall down—is essential for thinking through how it can be integrated into a successful product. With the right UX around it, even an imperfect model feels magical. Built wrong, the rare miss produced by even the most rock-solid system looks like a disaster.

A lot of my sense for this comes from the thousands of hours I’ve spent working with these models, seeing where they fall short and where they surprise me with their successes. But if there’s one concept that most informs my intuitions, it’s text embeddings. The ability to take a chunk of text and turn it into a vector, subject to the laws of mathematics, is fundamental to natural language processing. A good grasp of text embeddings will greatly improve your capacity to reason intuitively about how NLP (and a lot of other ML models) should best fit into your product.

So let’s stop for a moment to appreciate text embeddings.

What’s an embedding?

A textual content embedding is a chunk of textual content projected right into a high-dimensional latent house. The place of our textual content on this house is a vector, a protracted sequence of numbers. Consider the two-dimensional cartesian coordinates from algebra class, however with extra dimensions—typically 768 or 1536.

For instance, right here’s what the OpenAI text-embedding-ada-002 mannequin does with the paragraph above. Every vertical band on this plot represents a worth in one of many embedding house’s 1536 dimensions.

Mathematically, an embedding house, or latent house, is outlined as a manifold by which comparable gadgets are positioned nearer to 1 one other than much less comparable gadgets. On this case, sentences which can be semantically comparable ought to have comparable embedded vectors and thus be nearer collectively within the house.

We are able to body numerous helpful duties by way of textual content similarity.

- Search: How comparable is a question to a doc in your database?

- Spam filtering: How shut is an e-mail to examples of spam?

- Content material moderation: How shut is a social media message to recognized examples of abuse?

- Conversational agent: Which examples of recognized intents are closest to the consumer’s message?

In these circumstances, you may pre-calculate the embeddings on your targets (i.e. the paperwork you wish to search or examples for classification) and retailer them in an listed database. This allows you to seize the highly effective pure language understanding of deep neural fashions as textual content embeddings as you add new gadgets to your database, then run your search or classifier with out costly GPU compute.

This direct comparability of textual content similarity is only one utility for textual content embeddings. Usually, embeddings have a spot in ML algorithms or neural architectures with additional task-specific parts constructed on prime. I’ve largely elided these particulars within the dialogue under.

Distance

I discussed above {that a} key function of an embedding house is that it preserves distance. The high-dimensional vectors utilized in textual content embeddings and LLMs aren’t instantly intuitive. However the fundamental spatial instinct stays (largely) the identical as we scale issues down.

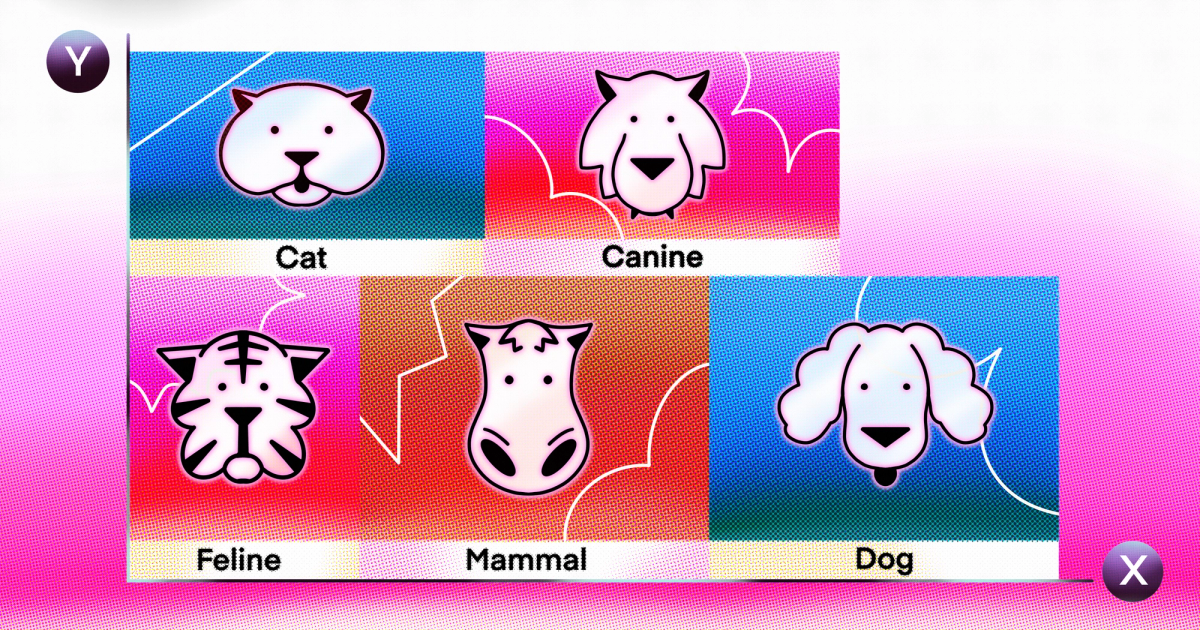

Think about a two-dimensional ground plan of a single-story library. Our library-goers are all cat lovers, canine lovers, or someplace in between. We wish to shelve cat books close to different cat books and canine books close to different canine books.

The best strategy is known as a bag-of-words mannequin. We put a canine-axis alongside one wall and a cat-axis perpendicular to it. Then we depend up the cases of the phrases “cat” and “canine” in every e book and shelve it on its level within the (caninex, caty) coordinate system.

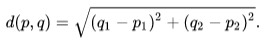

Now let’s take into consideration a easy recommender system. Given a earlier e book choice, what may we recommend subsequent? With the (overly simplifying!) assumption that our canine and cat dimensions adequately seize the reader’s preferences, we simply search for no matter e book is closest. On this case, the intuitive sense of closeness is Euclidean distance—the shortest path between two books:

You may discover, nonetheless, that this places the e book (canine10, cat1) a lot nearer to a (canine1, cat10) than, say (canine200, cat1). If we’re extra involved about relative weights than magnitudes for these options, we are able to normalize our vectors by dividing the numbers of canine mentions and cat mentions every by the sum of cat mentions and canine mentions to get the cosine distance. That is equal to projecting our factors onto a unit circle and measuring the distances alongside the arc.

There’s an entire zoo of various distance metrics on the market, however these two, Euclidean distance and cosine distance, are the 2 you’ll run into most frequently and can serve properly sufficient for growing your instinct.

Latent information

Books that discuss canines seemingly use phrases aside from “canine.” Ought to we think about phrases like “canine” or “feline” in our shelving scheme? To slot in “canine” is fairly simple: we’ll simply make the cabinets actually tall and make our canine-axis vertical so it’s perpendicular to the present two. Now we are able to shelve our books in keeping with the vector (caninex, caty, caninez).

It’s simple sufficient so as to add yet another time period for a (caninex, caty, caninez, felinei) The subsequent time period, although, will break our spatial locality metaphor. We now have to construct a collection of latest libraries down the road. And when you’re on the lookout for books with only one extra or one fewer “feline” point out, they’re not proper there on the shelf anymore—you’ve should stroll down the block to the subsequent library.

In English, a vocabulary of one thing like 30,000 phrases works fairly properly for this type of bag-of-words mannequin. In a computational world, we are able to scale these dimensions up extra easily than we may within the case of brick-and-mortar libraries, however the issue is comparable in precept. Issues simply get unwieldy at these excessive dimensions. Algorithms grind to a halt because the combinatorics explode, and the sparsity (most paperwork may have a depend of 0 for many phrases) is problematic for statistics and machine studying.

What if we are able to determine some widespread semantic sense to phrases like “cat” and “feline?” We may spare our dimensionality finances and make our shelving scheme extra intuitive.

And what about phrases like “pet” or “mammal?” We are able to let these contribute to each cat-axis and canine-axis of a e book they seem in. And if we misplaced one thing in collapsing the excellence between “cat” and “feline,” maybe letting the latter contribute to a “scientific” latent time period would recuperate it.

All we’d like, then, to venture a e book into our latent house is an enormous matrix that defines how a lot every of the noticed phrases in our vocabulary contributes to every of our latent phrases.

Latent semantic analysis and Latent Dirichlet allocation

I gained’t go into the small print right here, however there are a few totally different algorithms you should use to deduce this from a big sufficient assortment of paperwork: Latent semantic evaluation (LSA), which makes use of the singular worth decomposition of the term-document matrix (fancy linear algebra, principally), and Latent Dirichlet allocation (LDA), which makes use of a statistical technique referred to as the Dirichlet course of.

LDA and LSA are nonetheless extensively used for matter modeling. You’ll be able to typically discover them as “learn subsequent” hyperlinks in an article’s footer. However they’re restricted to capturing a broad sense of topicality in a doc. The fashions depend on doc inputs being lengthy sufficient to have a consultant pattern of phrases. And with the unordered bag-of-words enter, there’s no option to seize proximity of phrases, not to mention advanced syntax and semantics.

Neural methods

Within the examples above, we had been utilizing phrase counts as a proxy for some extra nebulous concept of topicality. By projecting these phrase counts down into an embedding house, we are able to each cut back the dimensionality and infer latent variables that point out topicality higher than the uncooked phrase counts. To do that, although, we’d like a well-defined algorithm like LSA that may course of a corpus of paperwork to discover a good mapping between our bag-of-words enter and vectors in our embedding house.

Strategies based mostly in neural networks allow us to generalize this course of and break the restrictions of LSA. To get embeddings, we simply must:

- Encode an enter as a vector.

- Measure the space between two vectors.

- Present a ton of coaching knowledge the place we all know which inputs needs to be nearer and which needs to be farther.

The best option to do the encoding is construct a map from distinctive enter values to randomly initialized vectors, then regulate the values of those vectors throughout coaching.

The neural community coaching course of runs over the coaching knowledge a bunch of occasions. A typical strategy for embeddings is known as triplet loss. At every coaching step, examine a reference enter—the anchor—to a optimistic enter (one thing that needs to be near the anchor in our latent house) and a damaging enter (one we all know needs to be distant). The coaching goal is to attenuate the space between the anchor and the optimistic in our embedding house whereas maximizing the space to the damaging.

A bonus of this strategy is that we don’t must know precise distances in our coaching knowledge—some form of binary proxy works properly. Going again to our library, for instance, we’d choose our anchor/proxy pairs from units of books that had been checked out collectively. We throw in a damaging instance drawn at random from the books exterior that set. There’s definitely noise on this coaching set—library-goers typically choose books on numerous topics and our random negatives aren’t assured to be irrelevant. The thought is that with a big sufficient knowledge set the noise washes out and your embeddings seize some form of helpful sign.

Word2vec

The massive instance right here is Word2vec, which makes use of windowed textual content sampling to create embeddings for particular person phrases. A sliding window strikes by textual content within the coaching knowledge, one phrase at a time. For every place of the window, Word2vec creates a context set. For instance, with a window measurement of three within the sentence “the cat sat on the mat”, (‘the’, ‘cat’, ‘sat’) are grouped collectively, identical to a set of library books a reader had checked out within the instance above. Throughout coaching, this pushes vectors for ‘the’, ‘cat’, and ‘sat’ all slightly nearer within the latent house.

A key level right here is that we don’t must spend a lot time on coaching knowledge for this mannequin—it makes use of a big corpus of uncooked textual content as-is, and may extract some surprisingly detailed insights about language.

These phrase embeddings present the facility of vector arithmetic. The well-known instance is the equation king – man + lady ≈ queen. The vector for ‘king’, minus the vector for ‘man’ and plus the vector for ‘lady’, may be very near the vector for ‘queen’. A comparatively easy mannequin, given a big sufficient coaching corpus, can provide us a surprisingly wealthy latent house.

Dealing with sequences

The inputs I’ve talked about to this point have both been one phrase like Word2vec or a sparse vector of all of the phrases just like the bag-of-words fashions in LSA and LDA. If we are able to’t seize the sequential nature of a textual content, we’re not going to get very far in capturing its which means. “Canine bites man” and “Man bites canine” are two very totally different headlines!

There’s a household of more and more refined sequential fashions that places us on a gentle climb to the eye mannequin and transformers, the core of as we speak’s LLMs.

Fully-recurrent neural network

The essential idea of a recurrent neural community (RNN) is that every token (often a phrase or phrase piece) in our sequence feeds ahead into the illustration of our subsequent one. We begin with the embedding for our first token t0. For the subsequent token, t1 we take some operate (outlined by the weights our neural community learns) of the embeddings for t0 and t1 like f(t0, t1). Every new token combines with the earlier token within the sequence till we attain the ultimate token, whose embedding is used to characterize the entire sequence. This easy model of this structure is a fully-recurrent neural community (FRNN).

This structure has points with vanishing gradients that restrict the neural community coaching course of. Bear in mind, coaching a neural community works by making small updates to mannequin parameters based mostly on a loss operate that expresses how shut the mannequin’s prediction for a coaching merchandise is to the true worth. If an early parameter is buried underneath a collection of decimal weights later within the mannequin, it shortly approaches zero. Its influence on the loss operate turns into negligible, as do any updates to its worth.

It is a huge downside for long-distance relationships widespread in textual content. Contemplate the sentence “The canine that I adopted from the pound 5 years in the past gained the native pet competitors.” It is essential to know that it is the canine that gained the competitors even supposing none of those phrases are adjoining within the sequence.

Long short-term memory

The lengthy short-term reminiscence (LSTM) structure addresses this vanishing gradient downside. The LSTM makes use of a long-term reminiscence cell that stably passes info ahead parallel to the RNN, whereas a set of gates passes info out and in of the reminiscence cell.

Bear in mind, although, that within the machine studying world a bigger coaching set is sort of at all times higher. The truth that the LSTM has to calculate a worth for every token sequentially earlier than it may begin on the subsequent is an enormous bottleneck—it’s inconceivable to parallelize these operations.

Transformer

The transformer structure, which is on the coronary heart of the present era of LLMs, is an evolution of the LSTM idea. Not solely does it higher seize the context and dependencies between phrases in a sequence, however it may run in parallel on the GPU with highly-optimized tensor operations.

The transformer makes use of an consideration mechanism to weigh the affect of every token within the sequence on one another token. Together with an embedding worth of every token, the eye mechanism learns two extra vectors for every token: a question vector and a key vector. How shut a token’s question vector is to a different token’s key vector determines how a lot of the second token’s worth will get added to the primary.

As a result of we’ve loosened up the sequence bottleneck, we are able to afford to stack up a number of layers of consideration—at every layer, the eye contributes slightly which means to every token from the others within the sequence earlier than transferring on to the subsequent layer with the up to date values.

In the event you’ve adopted sufficient to this point that we are able to cobble collectively a spatial instinct for this consideration mechanism, I’ll think about this text a hit. Let’s give it a strive.

A token’s worth vector captures its semantic which means in a high-dimensional embedding house, very similar to in our library analogy from earlier. The eye mechanism makes use of one other embedding house for the key and question vectors—a type of semantic plumbing within the ground between every stage of the library. The key vector positions the output finish of a pipe that pulls some semantic worth from the token and pumps it out into the embedding house. The question vector locations the enter finish of a pipe that sucks up semantic worth different tokens’ key vectors pump into the embedding house close by and all this into the token’s new illustration on the ground above.

To seize an embedding for a full sequence, we simply choose one in every of these tokens to seize a worth vector from and use within the downstream duties. (Precisely which token that is is determined by the precise mannequin. Masked fashions like BERT use a particular [CLS] or [MASK] token, whereas the autoregressive GPT fashions use the final token within the sequence.)

So the transformer structure can encode sequences very well, but when we would like it to know language properly, how will we prepare it? Bear in mind, after we begin coaching, all these vectors are randomly initialized. Our tokens’ worth vectors are distributed at random of their semantic embedding house as are our key and question vectors in theirs. We ask the mannequin to foretell a token given the remainder of the encoded sequence. The beauty of this process is that we are able to collect as a lot textual content as we are able to discover and switch it into coaching knowledge. All we have now to do is cover one of many tokens in a bit of textual content from the mannequin and encode what’s left. We already know what the lacking token needs to be, so we are able to construct a loss operate based mostly on how shut the prediction is to this recognized worth.

The opposite stunning factor is that the problem of predicting the fitting phrase scales up easily. It goes from a normal sense of topicality and phrase order—one thing even a easy predictive textual content mannequin in your telephone can do fairly properly—up by advanced syntax and semantics.

The unimaginable factor right here is that as we scale up the variety of parameters in these fashions—issues like the dimensions of the embeddings and variety of transformer layers—and scale up the dimensions of the coaching knowledge, the fashions simply maintain getting higher and smarter.

Multi-modal models and beyond

Efficient and quick textual content embedding strategies rework textual enter right into a numeric type, which permits fashions akin to GPT-4 to course of immense volumes of knowledge and present a exceptional stage of pure language understanding.

A deep, intuitive understanding of textual content embeddings may also help you observe the advances of those fashions, letting you successfully incorporate them into your personal techniques with out combing by the technical specs of every new enchancment because it emerges.

It is turning into clear that the advantages of textual content embedding fashions can apply to different domains. Instruments like Midjourney and DALL-E interpret textual content directions by studying to embed photographs and prompts right into a shared embedding house. And an identical strategy has been used for pure language directions in robotics.

A brand new class of huge multi-modal fashions like Microsoft’s GPT-Imaginative and prescient and Google’s RT-X are collectively skilled on textual content knowledge together with audiovisual inputs and robotics knowledge, thanks, largely, to the flexibility to successfully map all these disparate types of knowledge right into a shared embedding house.