What are adversarial assaults and Tips on how to shield your embedded gadgets from these

A Fast Intro

With very restricted choices for adversarially strong Deep Neural Networks (DNN) for Embedded Techniques, this text makes an attempt to supply a primer on the sphere and explores some ready-to-use frameworks.

What’s an adversarially strong DNN?

Deep Neural Networks have democratized machine studying and inference. There are two major causes for that. Firstly, we don’t want to seek out and engineer options from the goal dataset — a DNN does that robotically. Secondly, the provision of pre-trained fashions helps us to shortly create task-specific, fine-tuned / switch discovered fashions.

Regardless of their widespread recognition, DNNs have a critical vulnerability, which prevents these networks from getting used on real-life safety-critical techniques. Though a DNN mannequin is proven to be fairly strong towards random noise, it fails towards well-designed, adversarial perturbations to the enter.

In pc imaginative and prescient, adversarial assaults alter an enter picture with small modifications within the pixels, such that these modifications stay visually imperceptible to people, however a DNN fails to deduce the picture appropriately. The results could be very critical. The site visitors signal recognition module of an autonomous car might interpret a left flip with no consideration flip highway signal and fall right into a trench! An Optical character recognizer (OCR) might learn numbers wrongly, leading to monetary skulduggery.

Fortuitously, many devoted researchers are working laborious to create adversarially strong DNN fashions that can not be fooled simply by adversarial perturbations.

Is adversarial robustness vital for Embedded Imaginative and prescient?

Completely. CISCO predicts that the variety of Machine-to-machine (M2M) connections will attain 14.7 billion by 2023 [8]; this report [9] from The Linux Basis expects Edge Computing to have an influence footprint of 102 thousand megawatts (MW) by 2028, and Statista predicts 7,702 million Edge-enabled IoT gadgets by 2030[10]. Most of the Edge Computing purposes are embedded vision-related and are sometimes deployed in safety-critical techniques. Nonetheless, there are usually not many choices for adversarial robustness for embedded techniques. We’re actively researching on this space, and I can be referring to one among our latest analysis right here.

However earlier than delving deep into adversarial robustness for embedded techniques, I’ll give a quick background on the subject within the subsequent part. Readers already acquainted with the topic can skip to the following half.

Adversarial Robustness Primer

Adversarial Robustness is a extremely standard and fast-advancing analysis space. One can get a look on the large physique of analysis articles devoted to this space on this weblog [1].

The most important concern about an adversarial picture is that it’s nearly not possible to identify that a picture has been tampered with.

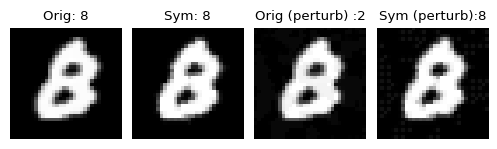

For instance, the above picture of a handwritten digit 8 from the MNIST dataset [15] is misclassified as a digit 2 by a typical Convolutional Neural Community (CNN) inference. Are you able to determine any important distinction between the picture in Column 1 and Column 3? I couldn’t.

Nicely, CNN did. The adversary made some minute modifications, that are not vital to the human eye, however it wreaks havoc with the CNN decision-making course of. Nonetheless, once we use a strong inference mechanism (denoted by Sym), we see that the attacked picture can also be appropriately labeled (Column 4).

Allow us to suppose from the opposite aspect. Is the image in Column 3 a digit 2 from any angle? Sadly, CNN thinks so. It is a larger drawback. An adversary can tweak any picture and coax out a call favorable to it to meet its malicious targets.

The truth is, this was how these adversarial examples have been invented. As recounted by Ian Goodfellow in this lecture (at round 7 minutes), they have been exploring the reasons of why a CNN works so properly. In that course of, they wished to inflict small modifications to photographs of various courses, such that these flip into a picture of the Airplane class. Whereas they anticipated some form of airplane particular options (say, wings) to develop into manifested in a Ship picture, nothing of that kind occurred. After some modifications to the picture, their CNN began to confidently infer a picture that fully appeared like a Ship, as an Airplane.

Now, with that fundamental overview, let me shortly summarize the important thing factors one must know, to get began with Adversarial Robustness:

- Adversarial Assaults are optimization algorithms to seek out instance inputs for which a DNN makes an incorrect choice. Intriguingly, such examples are by no means tough to seek out, reasonably they exist nearly in all places within the enter manifold. Though a typical, empirical threat minimization-based coaching methodology might not embody such examples whereas coaching a DNN mannequin.

- A very good adversarial assault shortly finds out the best adversarial examples. An efficient adversarial picture is visually comparable ( by way of a distance metric) to the unique picture, however forces the mannequin to map it to a unique choice boundary, than the unique. The space metric is both Manhattan distance (L1 norm), Euclidean distance (L2 norm), or Chebyshev distance (L∞ norm).

- If the purpose of the adversary is to drive the DNN to map the choice to any unsuitable class, it’s referred to as an untargeted assault. In distinction, in a focused assault, the adversary forces the DNN to map to a specific class desired by the adversary.

- Perturbations are measured in change per pixel and is commonly denoted by ϵ. For instance, an ϵ of 0.3 would imply that every pixel of the clear instance (unique picture) undergoes a most change of 0.3 within the corresponding adversarial instance.

- The accuracy of a DNN measured with clear pictures and adversarial pictures is sometimes called clear accuracy and strong accuracy, respectively.

- An adversary can assault a DNN inference with out getting access to a mannequin, coaching particulars, and solely a restricted estimate concerning the take a look at knowledge distribution. This risk mannequin is named a whole blackbox setting [2]. This may be so simple as attaching a small bodily sticker on an actual object, which the DNN is attempting to deduce. On the opposite excessive, a whole whitebox setting refers to an assault mannequin the place the mannequin, coaching particulars, and even the protection mechanism are identified to an adversary. That is thought-about the acid take a look at for an adversarial protection. Completely different assaults and defenses are evaluated beneath intermediate ranges of the above two settings.

- An adversarial protection goals to thwart adversarial assaults. Adversarial robustness could be achieved by empirical defenses e.g., adversarial coaching utilizing adversarial examples, or heuristic defenses e.g. pre-processing attacked pictures to take away perturbations. Nonetheless, these approaches are sometimes validated by means of experimental outcomes, utilizing the then assaults. There is no such thing as a cause why a brand new stronger adversary can’t break these defenses later. That is precisely what is occurring in adversarial robustness analysis and is sometimes called a cat and mouse recreation.

The adversarial analysis group goals to resolve this catching-up recreation highlighted within the final level. The present analysis focus in that subject is creating licensed and provable defenses. These defenses modify the mannequin operations such that these are provably appropriate for a spread of enter values or carry out randomized smoothing of a classifier utilizing Gaussian noise, and so on.

Adversarial Robustness For Embedded Imaginative and prescient Techniques?

The adversarial analysis group isn’t specializing in the adversarial robustness of embedded techniques, as of now. That is completely regular, as the sphere itself remains to be shaping up.

We now have seen the identical pattern with Convolutional Neural Networks. After AlexNet [12] gained the ILSVRC problem in 2012 [14], it took 4 years earlier than DeepCompression [11] pioneered in producing small pruned fashions. Nonetheless, at that interval there was no method to totally exploit these pruned fashions as there was no established {hardware} assist to exploit sparsity throughout CNN inference. Not too long ago, such helps are getting developed, and researchers are operating CNN even on micro-controllers.

An adversarial protection for an embedded system should be very low overhead by way of measurement, runtime reminiscence, CPU cycles, and power consumption. This instantly disqualifies one of the best obtainable possibility, specifically, the adversarially educated fashions. These fashions are large, ~500 MB for CIFAR-10 (see right here). As aptly defined on this paper [3], the adversarially educated fashions are likely to study rather more advanced choice areas, in comparison with the usual fashions. The provably strong defenses are but to scale for real-life networks. The heuristic defenses that use a generative adversarial community (GAN) or autoencoders to purify attacked pictures are too resource-heavy for embedded gadgets.

That leaves solely easy heuristic enter transformation-based defenses as solely choices for resource-limited gadgets. Nonetheless, as proven within the seminal works [4–5], all such defenses depend on some type of gradient obfuscation, and may finally be damaged by a powerful adaptive assault. The best way such defenses can herald some advantages is to make the transformation very robust. This can not defend towards a powerful adaptive assault, however the time to interrupt the rework would depend upon the computing sources accessible to the adversary.

One such profitable work [6], makes use of a collection of straightforward transforms e.g., decreasing bit-resolution of shade, JPEG compression, noise injection, and so on. which collectively defend towards the Backward Cross Differentiable Approximation (BPDA) assault launched in [4]. Sadly, there isn’t a public implementation of this paper to check out.

One other latest work [7] makes use of enter discretization to purify adversarial pictures earlier than DNN inference. Within the purification course of, the pixel values of a picture, mendacity in an unrestricted actual quantity area, are reworked right into a restricted set of discrete values. As these discrete values are discovered from massive clear picture datasets, the rework is tough to interrupt, and the alternative removes some adversarial perturbations.

Some palms on…

First, allow us to see just a few pattern assaults to create some adversarial pictures.

We will use any present libraries e.g. Foolbox, Adversarial-attacks-pytorch, ART…, and so forth.

Right here is an instance of attacking and creating adversarial examples with adversarial-attacks-pytorch utilizing the implementation of AutoAttack [13]:

from torchattacks import *assault = AutoAttack(your_dnn_model, eps=8/255, n_classes=10, model='customary')for pictures, labels in your_testloader:

attacked_images = assault(pictures, labels)

Right here is one other instance of attacking and creating adversarial examples with Foolbox utilizing the Projected Gradient Descent assault [3]:

import foolboxassault = foolbox.assaults.PGD(your_dnn_model)for pictures, labels in your_testloader:

attacked_images = assault(pictures, labels)

These attacked_images can be utilized to carry out inference with a DNN.

Subsequent, allow us to attempt defending the assaults with SymDNN. Step one in utilizing this library is to clone or obtain the repository from this hyperlink.

The subsequent step is putting in the dependencies for SymDNN. The steps for this and the python non-obligatory digital surroundings setup is described within the README file of the repository.

Subsequent, we have to arrange some default parameters for discretization. The default values offered work positive for a number of datasets and assaults. We will tweak these later for inference robustness-latency-memory tradeoff.

# For similarity search

import faiss

import sys

sys.path.insert(1, './core')# operate for purification of adversarial perturbation

from patchutils import symdnn_purify# Setup robustness-latency-memory tradeoff parameters, defaults are adequate, then name the purification operate on attacked picturepurified_image = symdnn_purify(attacked_image, n_clusters, index, centroid_lut, patch_size, stride, channel_count)

These purified pictures can be utilized for additional inference utilizing the DNN. This purification operate is extraordinarily low-overhead by way of processing and reminiscence, appropriate for embedded techniques.

SymDNN repository accommodates many examples of assaults on CIFAR-10, ImageNet & MNIST beneath a number of assaults and totally different risk fashions.

Right here is an instance of attacking and creating an adversarial instance with the BPDA assault [4] implementation in SymDNN, taken from right here:

bpda_adversary16 = BPDAattack(your_dnn_model, None, None, epsilon=16/255, learning_rate=0.5, max_iterations=100)for pictures, labels in your_testloader:

attacked_images = bpda_adversary16.generate(pictures, labels)

The assault energy, iterations, and so on. hyperparameters are defined within the unique paper [4] and are typically depending on the appliance state of affairs.

Conclusions

Though Adversarial Robustness is a well-investigated space, we consider that there are a number of dots to attach earlier than it may be efficiently deployed within the subject, particularly in Edge Computing eventualities. The SymDNN protection we offered, isn’t able to undoing all assaults. Nonetheless, it’s one thing that may be readily used for making embedded imaginative and prescient techniques strong towards some adversarial assaults. With embedded AI (Synthetic Intelligence) poised to develop quickly sooner or later, having one thing at hand to guard these is healthier than nothing.

References

[1] Nicholas Carlini, A Full Record of All (arXiv) Adversarial Instance Papers (2019), https://nicholas.carlini.com/writing/2019/all-adversarial-example-papers.html

[2] Nicolas Papernot, Patrick McDaniel, Ian Goodfellow, Somesh Jha, Z. Berkay Celik, and Ananthram Swami, Sensible black-box assaults towards machine studying (2017), In Asia Convention on Laptop and Communications Safety 2017

[3] Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu, Towards deep studying fashions proof against adversarial assaults (2018), In ICLR 2018

[4] Anish Athalye, Nicholas Carlini, and David A. Wagner, Obfuscated gradients give a false sense of safety: Circumventing defenses to adversarial examples (2018). In ICML 2018

[5] Florian Tramèr, Nicholas Carlini, Wieland Brendel, and Aleksander Madry, On adaptive assaults to adversarial instance defenses (2020). In NeurIPS 2020

[6] Edward Raff, Jared Sylvester, Steven Forsyth, and Mark McLean, Barrage of random transforms for adversarially strong protection (2019) In CVPR 2019

[7] Swarnava Dey, Pallab Dasgupta, and Partha P Chakrabarti, SymDNN: Easy & Efficient Adversarial Robustness for Embedded Techniques (2022), In CVPR Embedded Imaginative and prescient Workshop 2022

[8] CISCO, Cisco annual web report (2018–2023), https://www.cisco.com/c/en/us/options/collateral/executive-perspectives/annual-internet-report/white-paper-c11–741490.html

[9] The Linux Basis, State of the sting 2021 (2021) https://stateoftheedge.com/reviews/state-of-the-edge-report-2021/

[10] Statista, Variety of edge enabled web of issues (iot) gadgets worldwide from 2020 to 2030, by market(2022),https://www.statista.com/statistics/1259878/edge-enabled-iot-device-market-worldwide/, 2022

[11] Music Han, Huizi Mao, William J. Dally, Deep Compression: Compressing Deep Neural Networks with Pruning, Educated Quantization and Huffman Coding (2016), ICLR 2016

[12] A. Krizhevsky, I. Sutskever, and G. E. Hinton, Imagenet classification with deep convolutional neural networks (2012), In NeurIPS 2012

[13] Francesco Croce, and Matthias Hein, Dependable analysis of adversarial robustness with an ensemble of numerous parameter-free assaults (2020), ICML 2020

[14] Olga Russakovsky*, Jia Deng*, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg and Li Fei-Fei. (* = equal contribution) ImageNet Giant Scale Visible Recognition Problem. IJCV, 2015

[15] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Gradient-based studying utilized to doc recognition. Proceedings of the IEEE, 86(11):2278–2324, November 1998