This put up discusses 4 main open issues in NLP primarily based on an skilled survey and a panel dialogue on the Deep Studying Indaba.

That is the second weblog put up in a two-part sequence. The sequence expands on the Frontiers of Pure Language Processing session organized by Herman Kamper, Stephan Gouws, and me on the Deep Studying Indaba 2018. Slides of your entire session could be discovered right here. The first put up mentioned main latest advances in NLP specializing in neural network-based strategies. This put up discusses main open issues in NLP. You could find a recording of the panel dialogue this put up is predicated on right here.

Within the weeks main as much as the Indaba, we requested NLP specialists various easy however huge questions. Primarily based on the responses, we recognized the 4 issues that have been talked about most frequently:

- Pure language understanding

- NLP for low-resource situations

- Reasoning about massive or a number of paperwork

- Datasets, issues, and analysis

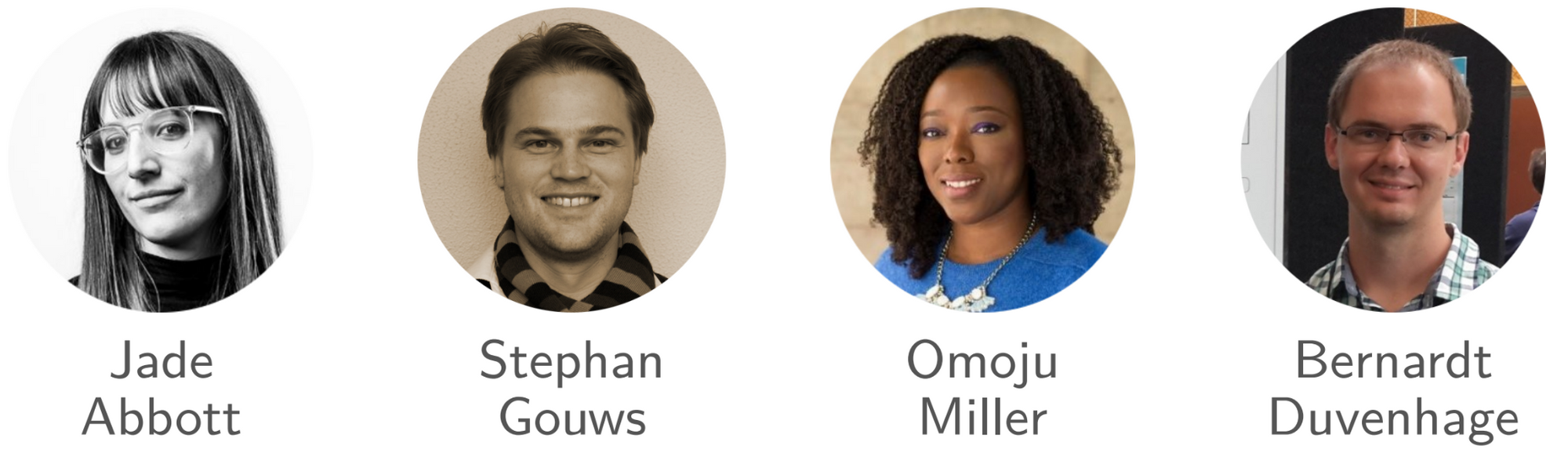

We mentioned these issues throughout a panel dialogue. This text is generally primarily based on the responses from our specialists (that are effectively value studying) and ideas of my fellow panel members Jade Abbott, Stephan Gouws, Omoju Miller, and Bernardt Duvenhage. I’ll goal to supply context round among the arguments, for anybody interested by studying extra.

I believe the largest open issues are all associated to pure language

understanding. […] we should always develop methods that learn and

perceive textual content the way in which an individual does, by forming a illustration of

the world of the textual content, with the brokers, objects, settings, and the

relationships, objectives, wishes, and beliefs of the brokers, and all the things else

that people create to know a chunk of textual content. Till we are able to try this, all of our progress is in bettering our methods’ means to do sample matching.

– Kevin Gimpel

Many specialists in our survey argued that the issue of pure language understanding (NLU) is central as it’s a prerequisite for a lot of duties equivalent to pure language technology (NLG). The consensus was that none of our present fashions exhibit ‘actual’ understanding of pure language.

Innate biases vs. studying from scratch A key query is what biases and construction ought to we construct explicitly into our fashions to get nearer to NLU. Related concepts have been mentioned on the Generalization workshop at NAACL 2018, which Ana Marasovic reviewed for The Gradient and I reviewed right here. Many responses in our survey talked about that fashions ought to incorporate frequent sense. As well as, dialogue methods (and chat bots) have been talked about a number of instances.

However, for reinforcement studying, David Silver argued that you’d in the end need the mannequin to be taught all the things by itself, together with the algorithm, options, and predictions. A lot of our specialists took the other view, arguing that it is best to truly construct in some understanding in your mannequin. What ought to be realized and what ought to be hard-wired into the mannequin was additionally explored within the debate between Yann LeCun and Christopher Manning in February 2018.

Program synthesis Omoju argued that incorporating understanding is troublesome so long as we don’t perceive the mechanisms that really underly NLU and tips on how to consider them. She argued that we would need to take concepts from program synthesis and mechanically be taught packages primarily based on high-level specs as an alternative. Concepts like this are associated to neural module networks and neural programmer-interpreters.

She additionally steered we should always look again to approaches and frameworks that have been initially developed within the 80s and 90s, equivalent to FrameNet and merge these with statistical approaches. This could assist us infer frequent sense-properties of objects, equivalent to whether or not a automobile is a car, has handles, and so on. Inferring such frequent sense information has additionally been a spotlight of latest datasets in NLP.

Embodied studying Stephan argued that we should always use the data in out there structured sources and information bases equivalent to Wikidata. He famous that people be taught language by expertise and interplay, by being embodied in an setting. One may argue that there exists a single studying algorithm that if used with an agent embedded in a sufficiently wealthy setting, with an applicable reward construction, may be taught NLU from the bottom up. Nonetheless, the compute for such an setting can be great. For comparability, AlphaGo required an enormous infrastructure to resolve a well-defined board sport. The creation of a general-purpose algorithm that may proceed to be taught is expounded to lifelong studying and to common downside solvers.

Whereas many individuals suppose that we’re headed within the course of embodied studying, we should always thus not underestimate the infrastructure and compute that may be required for a full embodied agent. In mild of this, ready for a full-fledged embodied agent to be taught language appears ill-advised. Nonetheless, we are able to take steps that may convey us nearer to this excessive, equivalent to grounded language studying in simulated environments, incorporating interplay, or leveraging multimodal knowledge.

Emotion In direction of the tip of the session, Omoju argued that will probably be very troublesome to include a human component regarding emotion into embodied brokers. Emotion, nonetheless, may be very related to a deeper understanding of language. However, we would not want brokers that really possess human feelings. Stephan acknowledged that the Turing check, in spite of everything, is outlined as mimicry and sociopaths—whereas having no feelings—can idiot individuals into considering they do. We should always thus have the ability to discover options that don’t have to be embodied and should not have feelings, however perceive the feelings of individuals and assist us resolve our issues. Certainly, sensor-based emotion recognition methods have constantly improved—and we now have additionally seen enhancements in textual emotion detection methods.

Cognitive and neuroscience An viewers member requested how a lot information of neuroscience and cognitive science are we leveraging and constructing into our fashions. Data of neuroscience and cognitive science could be nice for inspiration and used as a tenet to form your considering. For instance, a number of fashions have sought to mimic people’ means to suppose quick and gradual. AI and neuroscience are complementary in lots of instructions, as Surya Ganguli illustrates in this put up.

Omoju beneficial to take inspiration from theories of cognitive science, such because the cognitive improvement theories by Piaget and Vygotsky. She additionally urged everybody to pursue interdisciplinary work. This sentiment was echoed by different specialists. As an illustration, Felix Hill beneficial to go to cognitive science conferences.

Coping with low-data settings (low-resource languages, dialects (together with social media textual content “dialects”), domains, and so on.). This isn’t a totally “open” downside in that there are already a whole lot of promising concepts on the market; however we nonetheless do not have a common answer to this common downside.

– Karen Livescu

The second subject we explored was generalisation past the coaching knowledge in low-resource situations. Given the setting of the Indaba, a pure focus was low-resource languages. The primary query targeted on whether or not it’s essential to develop specialised NLP instruments for particular languages, or it is sufficient to work on common NLP.

Common language mannequin Bernardt argued that there are common commonalities between languages that may very well be exploited by a common language mannequin. The problem then is to acquire sufficient knowledge and compute to coach such a language mannequin. That is carefully associated to latest efforts to coach a cross-lingual Transformer language mannequin and cross-lingual sentence embeddings.

Cross-lingual representations Stephan remarked that not sufficient persons are engaged on low-resource languages. There are 1,250-2,100 languages in Africa alone, most of which have obtained scarce consideration from the NLP neighborhood. The query of specialised instruments additionally relies on the NLP job that’s being tackled. The principle situation with present fashions is pattern effectivity. Cross-lingual phrase embeddings are sample-efficient as they solely require phrase translation pairs and even solely monolingual knowledge. They align phrase embedding areas sufficiently effectively to do coarse-grained duties like subject classification, however do not enable for extra fine-grained duties equivalent to machine translation. Current efforts however present that these embeddings kind an vital constructing lock for unsupervised machine translation.

Extra advanced fashions for higher-level duties equivalent to query answering alternatively require 1000’s of coaching examples for studying. Transferring duties that require precise pure language understanding from high-resource to low-resource languages remains to be very difficult. With the event of cross-lingual datasets for such duties, equivalent to XNLI, the event of sturdy cross-lingual fashions for extra reasoning duties ought to hopefully change into simpler.

Advantages and impression One other query enquired—given that there’s inherently solely small quantities of textual content out there for under-resourced languages—whether or not the advantages of NLP in such settings may even be restricted. Stephan vehemently disagreed, reminding us that as ML and NLP practitioners, we usually are likely to view issues in an data theoretic manner, e.g. as maximizing the chance of our knowledge or bettering a benchmark. Taking a step again, the precise motive we work on NLP issues is to construct methods that break down limitations. We need to construct fashions that allow individuals to learn information that was not written of their language, ask questions on their well being once they do not have entry to a physician, and so on.

Given the potential impression, constructing methods for low-resource languages is actually some of the vital areas to work on. Whereas one low-resource language could not have a whole lot of knowledge, there’s a lengthy tail of low-resource languages; most individuals on this planet actually converse a language that’s within the low-resource regime. We thus actually need to discover a solution to get our methods to work on this setting.

Jade opined that it’s virtually ironic that as a neighborhood we now have been specializing in languages with a whole lot of knowledge as these are the languages which can be effectively taught all over the world. The languages we should always actually concentrate on are the low-resource languages the place not a lot knowledge is offered. The wonderful thing about the Indaba is that persons are working and making progress on such low-resource languages. Given the shortage of information, even easy methods equivalent to bag-of-words could have a big real-world impression. Etienne Barnard, one of many viewers members, famous that he noticed a distinct impact in real-world speech processing: Customers have been typically extra motivated to make use of a system in English if it really works for his or her dialect in comparison with utilizing a system in their very own language.

Incentives and expertise One other viewers member remarked that persons are incentivized to work on extremely seen benchmarks, equivalent to English-to-German machine translation, however incentives are lacking for engaged on low-resource languages. Stephan steered that incentives exist within the type of unsolved issues. Nonetheless, expertise will not be out there in the best demographics to deal with these issues. What we should always concentrate on is to show expertise like machine translation in an effort to empower individuals to resolve these issues. Tutorial progress sadly would not essentially relate to low-resource languages. Nonetheless, if cross-lingual benchmarks change into extra pervasive, then this must also result in extra progress on low-resource languages.

Information availability Jade lastly argued {that a} huge situation is that there aren’t any datasets out there for low-resource languages, equivalent to languages spoken in Africa. If we create datasets and make them simply out there, equivalent to internet hosting them on openAFRICA, that may incentivize individuals and decrease the barrier to entry. It’s typically adequate to make out there check knowledge in a number of languages, as this can enable us to guage cross-lingual fashions and monitor progress. One other knowledge supply is the South African Centre for Digital Language Sources (SADiLaR), which gives sources for most of the languages spoken in South Africa.

Representing massive contexts effectively. Our present fashions are principally primarily based on recurrent neural networks, which can not characterize longer contexts effectively. […] The stream of labor on graph-inspired RNNs is probably promising, although has solely seen modest enhancements and has not been extensively adopted as a result of them being a lot much less straight-forward to coach than a vanilla RNN.

– Isabelle Augenstein

One other huge open downside is reasoning about massive or a number of paperwork. The latest NarrativeQA dataset is an efficient instance of a benchmark for this setting. Reasoning with massive contexts is carefully associated to NLU and requires scaling up our present methods dramatically, till they will learn complete books and film scripts. A key query right here—that we didn’t have time to debate throughout the session—is whether or not we’d like higher fashions or simply practice on extra knowledge.

Endeavours equivalent to OpenAI 5 present that present fashions can do quite a bit if they’re scaled as much as work with much more knowledge and much more compute. With adequate quantities of information, our present fashions may equally do higher with bigger contexts. The issue is that supervision with massive paperwork is scarce and costly to acquire. Much like language modelling and skip-thoughts, we may think about a document-level unsupervised job that requires predicting the subsequent paragraph or chapter of a e-book or deciding which chapter comes subsequent. Nonetheless, this goal is probably going too sample-inefficient to allow studying of helpful representations.

A extra helpful course thus appears to be to develop strategies that may characterize context extra successfully and are higher in a position to preserve monitor of related data whereas studying a doc. Multi-document summarization and multi-document query answering are steps on this course. Equally, we are able to construct on language fashions with improved reminiscence and lifelong studying capabilities.

Maybe the largest downside is to correctly outline the issues themselves. And by correctly defining an issue, I imply constructing datasets and analysis procedures which can be applicable to measure our progress in direction of concrete objectives. Issues can be simpler if we may cut back all the things to Kaggle model competitions!

– Mikel Artetxe

We didn’t have a lot time to debate issues with our present benchmarks and analysis settings however you will see that many related responses in our survey. The ultimate query requested what crucial NLP issues are that ought to be tackled for societies in Africa. Jade replied that crucial situation is to resolve the low-resource downside. Notably with the ability to use translation in schooling to allow individuals to entry no matter they need to know in their very own language is tremendously vital.

The session concluded with common recommendation from our specialists on different questions that we had requested them, equivalent to “What, if something, has led the sector within the incorrect course?” and “What recommendation would you give a postgraduate pupil in NLP beginning their mission now?” You could find responses to all questions in the survey.

Deep Studying Indaba 2019

In case you are interested by engaged on low-resource languages, think about attending the Deep Studying Indaba 2019, which takes place in Nairobi, Kenya from 25-31 August 2019.

Credit score: Title picture textual content is from the NarrativeQA dataset. The picture is from the slides of the NLP session.