I’ve written earlier than about how a lot I loved Andrew Ng’s Coursera Machine Studying course. Nonetheless, I additionally talked about that I believed the course to be missing a bit within the space of recommender programs. After studying fundamental fashions for regression and classification, recommmender programs doubtless full the triumvirate of machine studying pillars for information science.

Working at an ecommmerce firm, I believe loads about recommender programs and wish to present an introduction to fundamental advice fashions. The objective of a advice mannequin is to current a ranked checklist of objects given an enter object. Sometimes, this rating relies on the similarity between the enter object and the listed objects. To be much less obscure, one usually desires to both current comparable merchandise to a given product or current merchandise which can be personally advisable for a given consumer.

The astounding factor is that if one has sufficient user-to-product information (scores, purchases, and so on…), then no different info is critical to make respectable suggestions. That is fairly completely different than regression and classification issues the place one should discover varied options so as to increase a mannequin’s predictive powers.

For this introduction, I’ll use the MovieLens dataset – a traditional dataset for coaching advice fashions. It may be obtained from the GroupLens web site. There are numerous datasets, however the one which I’ll use beneath consists of 100,000 film scores by customers (on a 1-5 scale). The primary information file consists of a tab-separated checklist with user-id (beginning at 1), item-id (beginning at 1), ranking, and timestamp because the 4 fields. We will use bash instructions within the Jupyter pocket book to obtain the file after which learn it in with pandas.

import numpy as np

import pandas as pd

# !curl -O http://information.grouplens.org/datasets/movielens/ml-100k.zip

# !unzip ml-100k.zip

[0m[01;32mallbut.pl[0m* u1.base u3.base u5.base ub.base u.info

grid_search.cpkl u1.test u3.test u5.test ub.test u.item

[01;32mmku.sh[0m* u2.base u4.base ua.base u.data u.occupation

README u2.test u4.test ua.test u.genre u.user

!head u.data

!echo # line break

!wc -l u.data

196 242 3 881250949

186 302 3 891717742

22 377 1 878887116

244 51 2 880606923

166 346 1 886397596

298 474 4 884182806

115 265 2 881171488

253 465 5 891628467

305 451 3 886324817

6 86 3 883603013

100000 u.data

names = ['user_id', 'item_id', 'rating', 'timestamp']

df = pd.read_csv('u.information', sep='t', names=names)

df.head()

| user_id | item_id | ranking | timestamp | |

|---|---|---|---|---|

| 0 | 196 | 242 | 3 | 881250949 |

| 1 | 186 | 302 | 3 | 891717742 |

| 2 | 22 | 377 | 1 | 878887116 |

| 3 | 244 | 51 | 2 | 880606923 |

| 4 | 166 | 346 | 1 | 886397596 |

n_users = df.user_id.distinctive().form[0]

n_items = df.item_id.distinctive().form[0]

print str(n_users) + ' customers'

print str(n_items) + ' gadgets'

943 customers

1682 gadgets

Most advice fashions include constructing a user-by-item matrix with some form of “interplay” quantity in every cell. If one consists of the numerical scores that customers give gadgets, then that is known as an express suggestions mannequin. Alternatively, one could embrace implicit suggestions that are actions by a consumer that signify a optimistic or destructive choice for a given merchandise (comparable to viewing the merchandise on-line). These two situations usually have to be handled otherwise.

Within the case of the MovieLens dataset, we’ve scores, so we are going to deal with express suggestions fashions. First, we should assemble our user-item matrix. We will simply map consumer/merchandise ID’s to consumer/merchandise indices by eradicating the “Python begins at 0” offset between them.

scores = np.zeros((n_users, n_items))

for row in df.itertuples():

scores[row[1]-1, row[2]-1] = row[3]

scores

array([[ 5., 3., 4., ..., 0., 0., 0.],

[ 4., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

...,

[ 5., 0., 0., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 0., 0., 0.],

[ 0., 5., 0., ..., 0., 0., 0.]])

sparsity = float(len(scores.nonzero()[0]))

sparsity /= (scores.form[0] * scores.form[1])

sparsity *= 100

print 'Sparsity: {:4.2f}%'.format(sparsity)

Sparsity: 6.30%

On this dataset, each consumer has rated at the least 20 motion pictures which leads to an affordable sparsity of 6.3%. Which means that 6.3% of the user-item scores have a price. Notice that, though we crammed in lacking scores as 0, we must always not assume these values to really be zero. Extra appropriately, they’re simply empty entries. We’ll cut up our information into coaching and check units by eradicating 10 scores per consumer from the coaching set and inserting them within the check set.

def train_test_split(scores):

check = np.zeros(scores.form)

practice = scores.copy()

for consumer in xrange(scores.form[0]):

test_ratings = np.random.selection(scores[user, :].nonzero()[0],

measurement=10,

exchange=False)

practice[user, test_ratings] = 0.

check[user, test_ratings] = scores[user, test_ratings]

# Take a look at and coaching are actually disjoint

assert(np.all((practice * check) == 0))

return practice, check

practice, check = train_test_split(scores)

Collaborative filtering

We’ll deal with collaborative filtering fashions at the moment which may be typically cut up into two lessons: user- and item-based collaborative filtering. In both situation, one builds a similarity matrix. For user-based collaborative filtering, the user-similarity matrix will include a ways metric that measures the similarity between any two pairs of customers. Likewise, the item-similarity matrix will measure the similarity between any two pairs of things.

A typical distance metric is cosine similarity. The metric may be considered geometrically if one treats a given consumer’s (merchandise’s) row (column) of the scores matrix as a vector. For user-based collaborative filtering, two customers’ similarity is measured because the cosine of the angle between the 2 customers’ vectors. For customers ${u}$ and ${u^{prime}}$, the cosine similarity is

$$

sim(u, u^{prime}) =

cos(theta{}) =

frac{textbf{r}_{u} dot{} textbf{r}_{u^{prime}}}{| textbf{r}_{u} | | textbf{r}_{u^{prime}} |} =

sum_{i} frac{r_{ui}r_{u^{prime}i}}{sqrt{sumlimits_{i} r_{ui}^2} sqrt{sumlimits_{i} r_{u^{prime}i}^2} }

$$

This may be written as a for-loop with code, however the Python code will run fairly gradual; as an alternative, one ought to attempt to categorical any equation when it comes to NumPy features. I’ve included a gradual and quick model of the cosine similarity perform beneath. The gradual perform took so lengthy that I finally canceled it as a result of I bought uninterested in ready. The quick perform, then again, takes round 200 ms.

The cosine similarity will vary from 0 to 1 in our case (as a result of there are not any destructive scores). Discover that it’s symmetric and has ones alongside the diagonal.

def slow_similarity(scores, sort='consumer'):

if sort == 'consumer':

axmax = 0

axmin = 1

elif sort == 'merchandise':

axmax = 1

axmin = 0

sim = np.zeros((scores.form[axmax], scores.form[axmax]))

for u in xrange(scores.form[axmax]):

for uprime in xrange(scores.form[axmax]):

rui_sqrd = 0.

ruprimei_sqrd = 0.

for i in xrange(scores.form[axmin]):

sim[u, uprime] = scores[u, i] * scores[uprime, i]

rui_sqrd += scores[u, i] ** 2

ruprimei_sqrd += scores[uprime, i] ** 2

sim[u, uprime] /= rui_sqrd * ruprimei_sqrd

return sim

def fast_similarity(scores, sort='consumer', epsilon=1e-9):

# epsilon -> small quantity for dealing with dived-by-zero errors

if sort == 'consumer':

sim = scores.dot(scores.T) + epsilon

elif sort == 'merchandise':

sim = scores.T.dot(scores) + epsilon

norms = np.array([np.sqrt(np.diagonal(sim))])

return (sim / norms / norms.T)

#%timeit slow_user_similarity(practice)

%timeit fast_similarity(practice, sort='consumer')

1 loop, greatest of three: 206 ms per loop

user_similarity = fast_similarity(practice, sort='consumer')

item_similarity = fast_similarity(practice, sort='merchandise')

print item_similarity[:4, :4]

[[ 1. 0.4142469 0.33022352 0.44198521]

[ 0.4142469 1. 0.26600176 0.48216178]

[ 0.33022352 0.26600176 1. 0.3011288 ]

[ 0.44198521 0.48216178 0.3011288 1. ]]

With our similarity matrix in hand, we will now predict the scores that weren’t included with the information. Utilizing these predictions, we will then evaluate them with the check information to try to validate the standard of our recommender mannequin.

For user-based collaborative filtering, we predict {that a} consumer’s $u$’s ranking for merchandise $i$ is given by the weighted sum of all different customers’ scores for merchandise $i$ the place the weighting is the cosine similarity between the every consumer and the enter consumer $u$.

$$hat{r}_{ui} = sumlimits_{u^{prime}}sim(u, u^{prime}) r_{u^{prime}i}$$

We should additionally normalize by the variety of ${r_{u^{prime}i}}$ scores:

$$hat{r}_{ui} = frac{sumlimits_{u^{prime}} sim(u, u^{prime}) r_{u^{prime}i}}{sumlimits_{u^{prime}}|sim(u, u^{prime})|}$$

As with earlier than, our computational velocity will profit enormously by favoring NumPy features over for loops. With our gradual perform beneath, although I exploit NumPy strategies, the presence of the for-loop nonetheless slows the algorithm

def predict_slow_simple(scores, similarity, sort='consumer'):

pred = np.zeros(scores.form)

if sort == 'consumer':

for i in xrange(scores.form[0]):

for j in xrange(scores.form[1]):

pred[i, j] = similarity[i, :].dot(scores[:, j])

/np.sum(np.abs(similarity[i, :]))

return pred

elif sort == 'merchandise':

for i in xrange(scores.form[0]):

for j in xrange(scores.form[1]):

pred[i, j] = similarity[j, :].dot(scores[i, :].T)

/np.sum(np.abs(similarity[j, :]))

return pred

def predict_fast_simple(scores, similarity, sort='consumer'):

if sort == 'consumer':

return similarity.dot(scores) / np.array([np.abs(similarity).sum(axis=1)]).T

elif sort == 'merchandise':

return scores.dot(similarity) / np.array([np.abs(similarity).sum(axis=1)])

%timeit predict_slow_simple(practice, user_similarity, sort='consumer')

1 loop, greatest of three: 33.7 s per loop

%timeit predict_fast_simple(practice, user_similarity, sort='consumer')

1 loop, greatest of three: 188 ms per loop

We’ll use the scikit-learn’s imply squared error perform as our validation metric. Evaluating user- and item-based collaborative filtering, it seems like user-based collaborative filtering provides us a greater consequence.

from sklearn.metrics import mean_squared_error

def get_mse(pred, precise):

# Ignore nonzero phrases.

pred = pred[actual.nonzero()].flatten()

precise = precise[actual.nonzero()].flatten()

return mean_squared_error(pred, precise)

item_prediction = predict_fast_simple(practice, item_similarity, sort='merchandise')

user_prediction = predict_fast_simple(practice, user_similarity, sort='consumer')

print 'Consumer-based CF MSE: ' + str(get_mse(user_prediction, check))

print 'Merchandise-based CF MSE: ' + str(get_mse(item_prediction, check))

Consumer-based CF MSE: 8.39140463389

Merchandise-based CF MSE: 11.5469590109

High-$ok$ Collaborative Filtering

We will try to enhance our prediction MSE by solely contemplating the highest $ok$ customers who’re most just like the enter consumer (or, equally, the highest $ok$ gadgets). That’s, after we calculate the sums over $u^{prime}$

$$hat{r}_{ui} = frac{sumlimits_{u^{prime}} sim(u, u^{prime}) r_{u^{prime}i}}{sumlimits_{u^{prime}}|sim(u, u^{prime})|}$$

we solely sum excessive $ok$ most comparable customers. A gradual implementation of this algorithm is proven beneath. Whereas I’m positive that there’s a manner to make use of numpy sorting to eliminate the double for-loops, I bought fairly annoyed desciphering the 2D argsort output and simply settled for the gradual loop.

As is proven beneath, using this methodology truly halves our error!

def predict_topk(scores, similarity, sort='consumer', ok=40):

pred = np.zeros(scores.form)

if sort == 'consumer':

for i in xrange(scores.form[0]):

top_k_users = [np.argsort(similarity[:,i])[:-k-1:-1]]

for j in xrange(scores.form[1]):

pred[i, j] = similarity[i, :][top_k_users].dot(scores[:, j][top_k_users])

pred[i, j] /= np.sum(np.abs(similarity[i, :][top_k_users]))

if sort == 'merchandise':

for j in xrange(scores.form[1]):

top_k_items = [np.argsort(similarity[:,j])[:-k-1:-1]]

for i in xrange(scores.form[0]):

pred[i, j] = similarity[j, :][top_k_items].dot(scores[i, :][top_k_items].T)

pred[i, j] /= np.sum(np.abs(similarity[j, :][top_k_items]))

return pred

pred = predict_topk(practice, user_similarity, sort='consumer', ok=40)

print 'High-k Consumer-based CF MSE: ' + str(get_mse(pred, check))

pred = predict_topk(practice, item_similarity, sort='merchandise', ok=40)

print 'High-k Merchandise-based CF MSE: ' + str(get_mse(pred, check))

High-k Consumer-based CF MSE: 6.47059807493

High-k Merchandise-based CF MSE: 7.75559095568

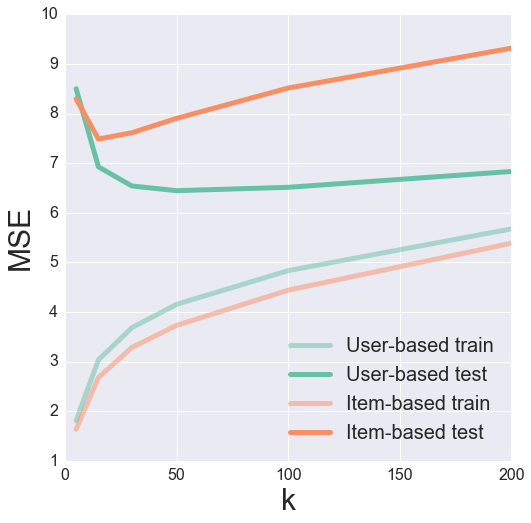

We will attempt tuning the parameter of $ok$ to seek out the optimum worth for minimizing our testing MSE. Right here, it usually helps to visualise the outcomes to get a sense for what’s occurring.

k_array = [5, 15, 30, 50, 100, 200]

user_train_mse = []

user_test_mse = []

item_test_mse = []

item_train_mse = []

def get_mse(pred, precise):

pred = pred[actual.nonzero()].flatten()

precise = precise[actual.nonzero()].flatten()

return mean_squared_error(pred, precise)

for ok in k_array:

user_pred = predict_topk(practice, user_similarity, sort='consumer', ok=ok)

item_pred = predict_topk(practice, item_similarity, sort='merchandise', ok=ok)

user_train_mse += [get_mse(user_pred, train)]

user_test_mse += [get_mse(user_pred, test)]

item_train_mse += [get_mse(item_pred, train)]

item_test_mse += [get_mse(item_pred, test)]

%matplotlib inline

import matplotlib.pyplot as plt

import seaborn as sns

sns.set()

pal = sns.color_palette("Set2", 2)

plt.determine(figsize=(8, 8))

plt.plot(k_array, user_train_mse, c=pal[0], label='Consumer-based practice', alpha=0.5, linewidth=5)

plt.plot(k_array, user_test_mse, c=pal[0], label='Consumer-based check', linewidth=5)

plt.plot(k_array, item_train_mse, c=pal[1], label='Merchandise-based practice', alpha=0.5, linewidth=5)

plt.plot(k_array, item_test_mse, c=pal[1], label='Merchandise-based check', linewidth=5)

plt.legend(loc='greatest', fontsize=20)

plt.xticks(fontsize=16);

plt.yticks(fontsize=16);

plt.xlabel('ok', fontsize=30);

plt.ylabel('MSE', fontsize=30);

It seems like a ok of fifty and 15 produces a pleasant minimal within the check error for user- and item-based collaborative filtering, respectively.

Bias-subtracted Collaborative Filtering

For our final methodology of enhancing suggestions, we are going to attempt eradicating biases related to both the consumer of the merchandise. The concept right here is that sure customers could are inclined to all the time give excessive or low scores to all motion pictures. One may think about that the relative distinction within the scores that these customers give is extra vital than the absolute ranking values.

Allow us to attempt subtracting every consumer’s common ranking when summing over comparable consumer’s scores after which add that common again in on the finish. Mathematically, this seems like

$$hat{r}_{ui} = bar{r_{u}} + frac{sumlimits_{u^{prime}} sim(u, u^{prime}) (r_{u^{prime}i} – bar{r_{u^{prime}}})}{sumlimits_{u^{prime}}|sim(u, u^{prime})|}$$

the place $bar{r_{u}}$ is consumer $u$’s common ranking.

def predict_nobias(scores, similarity, sort='consumer'):

if sort == 'consumer':

user_bias = scores.imply(axis=1)

scores = (scores - user_bias[:, np.newaxis]).copy()

pred = similarity.dot(scores) / np.array([np.abs(similarity).sum(axis=1)]).T

pred += user_bias[:, np.newaxis]

elif sort == 'merchandise':

item_bias = scores.imply(axis=0)

scores = (scores - item_bias[np.newaxis, :]).copy()

pred = scores.dot(similarity) / np.array([np.abs(similarity).sum(axis=1)])

pred += item_bias[np.newaxis, :]

return pred

user_pred = predict_nobias(practice, user_similarity, sort='consumer')

print 'Bias-subtracted Consumer-based CF MSE: ' + str(get_mse(user_pred, check))

item_pred = predict_nobias(practice, item_similarity, sort='merchandise')

print 'Bias-subtracted Merchandise-based CF MSE: ' + str(get_mse(item_pred, check))

Bias-subtracted Consumer-based CF MSE: 8.67647634245

Bias-subtracted Merchandise-based CF MSE: 9.71148412222

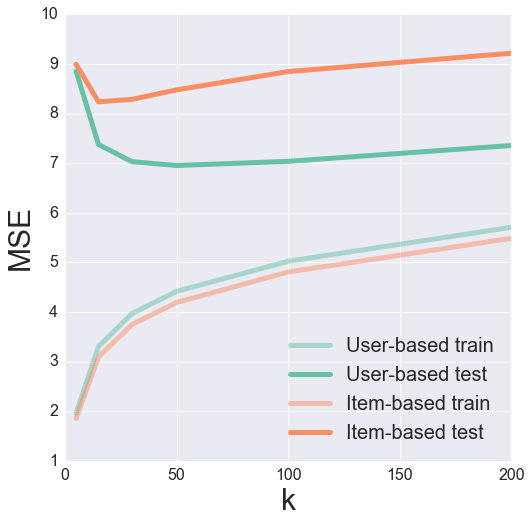

All collectively now

Lastly, we will attempt combining each the High-ok and the Bias-subtracted algorithms. Surprisingly sufficient, this truly appears to carry out worse than the unique High-ok algorithm. Go determine.

def predict_topk_nobias(scores, similarity, sort='consumer', ok=40):

pred = np.zeros(scores.form)

if sort == 'consumer':

user_bias = scores.imply(axis=1)

scores = (scores - user_bias[:, np.newaxis]).copy()

for i in xrange(scores.form[0]):

top_k_users = [np.argsort(similarity[:,i])[:-k-1:-1]]

for j in xrange(scores.form[1]):

pred[i, j] = similarity[i, :][top_k_users].dot(scores[:, j][top_k_users])

pred[i, j] /= np.sum(np.abs(similarity[i, :][top_k_users]))

pred += user_bias[:, np.newaxis]

if sort == 'merchandise':

item_bias = scores.imply(axis=0)

scores = (scores - item_bias[np.newaxis, :]).copy()

for j in xrange(scores.form[1]):

top_k_items = [np.argsort(similarity[:,j])[:-k-1:-1]]

for i in xrange(scores.form[0]):

pred[i, j] = similarity[j, :][top_k_items].dot(scores[i, :][top_k_items].T)

pred[i, j] /= np.sum(np.abs(similarity[j, :][top_k_items]))

pred += item_bias[np.newaxis, :]

return pred

k_array = [5, 15, 30, 50, 100, 200]

user_train_mse = []

user_test_mse = []

item_test_mse = []

item_train_mse = []

for ok in k_array:

user_pred = predict_topk_nobias(practice, user_similarity, sort='consumer', ok=ok)

item_pred = predict_topk_nobias(practice, item_similarity, sort='merchandise', ok=ok)

user_train_mse += [get_mse(user_pred, train)]

user_test_mse += [get_mse(user_pred, test)]

item_train_mse += [get_mse(item_pred, train)]

item_test_mse += [get_mse(item_pred, test)]

pal = sns.color_palette("Set2", 2)

plt.determine(figsize=(8, 8))

plt.plot(k_array, user_train_mse, c=pal[0], label='Consumer-based practice', alpha=0.5, linewidth=5)

plt.plot(k_array, user_test_mse, c=pal[0], label='Consumer-based check', linewidth=5)

plt.plot(k_array, item_train_mse, c=pal[1], label='Merchandise-based practice', alpha=0.5, linewidth=5)

plt.plot(k_array, item_test_mse, c=pal[1], label='Merchandise-based check', linewidth=5)

plt.legend(loc='greatest', fontsize=20)

plt.xticks(fontsize=16);

plt.yticks(fontsize=16);

plt.xlabel('ok', fontsize=30);

plt.ylabel('MSE', fontsize=30);

Validation

Having expanded upon the essential collaborative filtering algorithm, I’ve proven how we will cut back our imply squared error with rising mannequin complexity. Nonetheless, how do we actually know if we’re making good suggestions? One factor that I glossed over was our selection of similarity metric. How do we all know that cosine similarity was a superb metric to make use of? As a result of we’re coping with a site the place many people have instinct (motion pictures), we will take a look at our merchandise similarity matrix and see if comparable gadgets “make sense”.

And only for enjoyable, allow us to actually look on the gadgets. The MovieLens dataset incorporates a file with details about every film. It seems that there’s a web site known as themoviedb.org which has a free API. If we’ve the IMDB “film id” for a film, then we will use this API to return the posters of flicks. Wanting on the film information file beneath, it appears that evidently we at the least have the IMDB url for every film.

1|Toy Story (1995)|01-Jan-1995||http://us.imdb.com/M/title-exact?Toypercent20Storypercent20(1995)|0|0|0|1|1|1|0|0|0|0|0|0|0|0|0|0|0|0|0

2|GoldenEye (1995)|01-Jan-1995||http://us.imdb.com/M/title-exact?GoldenEyepercent20(1995)|0|1|1|0|0|0|0|0|0|0|0|0|0|0|0|0|1|0|0

3|4 Rooms (1995)|01-Jan-1995||http://us.imdb.com/M/title-exact?Fourpercent20Roomspercent20(1995)|0|0|0|0|0|0|0|0|0|0|0|0|0|0|0|0|1|0|0

4|Get Shorty (1995)|01-Jan-1995||http://us.imdb.com/M/title-exact?Getpercent20Shortypercent20(1995)|0|1|0|0|0|1|0|0|1|0|0|0|0|0|0|0|0|0|0

5|Copycat (1995)|01-Jan-1995||http://us.imdb.com/M/title-exact?Copycatpercent20(1995)|0|0|0|0|0|0|1|0|1|0|0|0|0|0|0|0|1|0|0

Should you observe one of many hyperlinks on this dataset, then your url will get redirected. The ensuing url incorporates the IMDB film ID because the final info within the url beginning with “tt”. For instance, the redirected url for Toy Story is http://www.imdb.com/title/tt0114709/, and the IMDB film ID is tt0114709.

Utilizing the Python requests library, we will routinely extract this film ID. The Toy Story instance is proven beneath.

import requests

import json

response = requests.get('http://us.imdb.com/M/title-exact?Toy%20Story%20(1995)')

print response.url.cut up('/')[-2]

tt0114709

I requested a free API key from themoviedb.org. The secret is essential for querying the API. I’ve omitted it beneath, so remember that if you will want your individual key if you wish to reproduce this. We will seek for film posters by film id after which seize hyperlinks to the picture information. The hyperlinks are relative paths, so we’d like the base_url question on the high of the following cell to get the total path. Additionally, a number of the hyperlinks don’t work, so we will as an alternative seek for the film by title and seize the primary consequence.

# Get base url filepath construction. w185 corresponds to measurement of film poster.

headers = {'Settle for': 'software/json'}

payload = {'api_key': 'INSERT API KEY HERE'}

response = requests.get("http://api.themoviedb.org/3/configuration", params=payload, headers=headers)

response = json.hundreds(response.textual content)

base_url = response['images']['base_url'] + 'w185'

def get_poster(imdb_url, base_url):

# Get IMDB film ID

response = requests.get(imdb_url)

movie_id = response.url.cut up('/')[-2]

# Question themoviedb.org API for film poster path.

movie_url = 'http://api.themoviedb.org/3/film/{:}/pictures'.format(movie_id)

headers = {'Settle for': 'software/json'}

payload = {'api_key': 'INSERT API KEY HERE'}

response = requests.get(movie_url, params=payload, headers=headers)

attempt:

file_path = json.hundreds(response.textual content)['posters'][0]['file_path']

besides:

# IMDB film ID is usually no good. Have to get appropriate one.

movie_title = imdb_url.cut up('?')[-1].cut up('(')[0]

payload['query'] = movie_title

response = requests.get('http://api.themoviedb.org/3/search/film', params=payload, headers=headers)

movie_id = json.hundreds(response.textual content)['results'][0]['id']

payload.pop('question', None)

movie_url = 'http://api.themoviedb.org/3/film/{:}/pictures'.format(movie_id)

response = requests.get(movie_url, params=payload, headers=headers)

file_path = json.hundreds(response.textual content)['posters'][0]['file_path']

return base_url + file_path

from IPython.show import Picture

from IPython.show import show

toy_story = 'http://us.imdb.com/M/title-exact?Toy%20Story%20(1995)'

Picture(url=get_poster(toy_story, base_url))

Ta-da! Now we’ve a pipeline to go straight from the IMDB url within the information file to displaying the film poster. With this equipment in hand, allow us to examine our film similarity matrix.

We will construct a dictionary to map the movie-indices from our similarity matrix to the urls of the films. We’ll additionally create a helper perform to return the top-ok most comparable motion pictures given some enter film. With this perform, the primary film returned would be the enter film (due to course it’s the most just like itself).

# Load in film information

idx_to_movie = {}

with open('u.merchandise', 'r') as f:

for line in f.readlines():

information = line.cut up('|')

idx_to_movie[int(info[0])-1] = information[4]

def top_k_movies(similarity, mapper, movie_idx, ok=6):

return [mapper[x] for x in np.argsort(similarity[movie_idx,:])[:-k-1:-1]]

idx = 0 # Toy Story

motion pictures = top_k_movies(item_similarity, idx_to_movie, idx)

posters = tuple(Picture(url=get_poster(film, base_url)) for film in motion pictures)

Hmmm, these suggestions don’t appear too good! Let’s take a look at a pair extra.

As you possibly can see, possibly we weren’t utilizing such a superb similarity matrix all alongside. A few of these suggestions are fairly dangerous – Star Wars is essentially the most comparable film to Toy Story? No different James Bond film within the top-5 most comparable motion pictures to GoldenEye?

One factor that could possibly be the problem is that very fashionable motion pictures like Star Wars are being favored. We will take away a few of this bias by contemplating a unique similarity metric – the pearson correlation. I’ll simply seize the built-in scikit-learn perform for computing this.

from sklearn.metrics import pairwise_distances

# Convert from distance to similarity

item_correlation = 1 - pairwise_distances(practice.T, metric='correlation')

item_correlation[np.isnan(item_correlation)] = 0.

Let’s take a look at these motion pictures once more.

Whereas the ordering modified some, we largely returned the identical motion pictures – now you possibly can see why recommender programs are such a tough beast! Subsequent time we’ll discover extra superior fashions and see how they have an effect on the suggestions.

For the unique IPython Pocket book used to generate this submit, click on right here